FreeNAS 11 introduces a GUI for FreeBSD’s bhyve hypervisor. This is a potential replacement for the ESXi + FreeNAS All-in-One “hyper-converged storage” design.

Hardware

Hardware is based on my Supermicro Microserver Build

- Xeon D-1518 (4 physical cores, 8 threads) @ 2.2GHz

- 16GB DDR4 ECC memory

- 4 x 2TB HGST RAID-Z, 100GB Intel DC S3700s for ZIL (over-provisioned at 8GB) on an M1015. In Environments 1 and 2 this was passed to FreeNAS via VT-d.

- 2 x Samsung FIT USBs for booting OS (either ESXi or FreeNAS)

- 1 x extra DC S3700 used as ESXi storage for the FreeNAS VM to be installed on in environments 1 and 2 (not used in environment 3).

Environments

E1. ESXi + FreeNAS 11 All-in-one.

E2. Nested bhyve + ESXi + FreeNAS 11 All-in-one.

Nested virtualization test. Ubuntu VM with VirtIO is installed as a bhyve guest on FreeNAS which has raw access to disks running under the ESXi Hypervisor. FreeNAS given 4 cores and 12GB memory. Guest gets 1GB memory. Guest tested with 1C and 2C. What is neat about this environment is it could be used as a stepping stone if migrating from environment 1 to environment 3 or vice-versa (I actually tested migrating with success).

E3. bhyve + FreeNAS 11

Ubuntu VM with VirtIO is installed as a bhyve guest on FreeNAS on bare metal. Guest gets 1GB memory. Guest was backed with a ZVOL since that was the only option. Tested wih 1C and 2C.

All environments used FreeNAS 11, E1 and E2 used VMware ESXi 6.5

Testing Notes

A reboot of the guest and FreeNAS was performed between each test so as to clear ZFS’s ARC (in memory read cache). The sysbench test files were recreated at the start of each test. The script I used for testing is https://github.com/ahnooie/meta-vps-bench with networking tests removed.

No attempts on tuning were made in any environment. Just used the sensible defaults.

Disclaimer on comparing Apples to Oranges

This is not a business or enterprise level comparison. This test is meant to show how an Ubuntu guest performs in various configurations on the same hardware with constraints of a typical budget home server running a free “hyperconverged” solution–a hypervisor and FreeNAS storage on the same physical box. Not all environments are meant to perform identically…my goal is just to see if the environments perform “good enough” for home use. An obvious example of this is environments using NFS backed storage are going to perform slower than environments with local storage… but it should still at the very least max out a 1Gbps ethernet. This set of tests is designed to benchmark how I would setup each environment given the constraint of one physical box running both the hypervisor and FreeNAS + ZFS as the storage backend. The test is limited to a single guest VM. In the real world dozens, if not hundreds or even thousands of VMs are running simultaneously so advanced hypervisor features like memory deduplication are going to make a big difference. This test made no attempt to benchmark such. This is not an apples to apples test, so be careful what conclusions you derive from it.

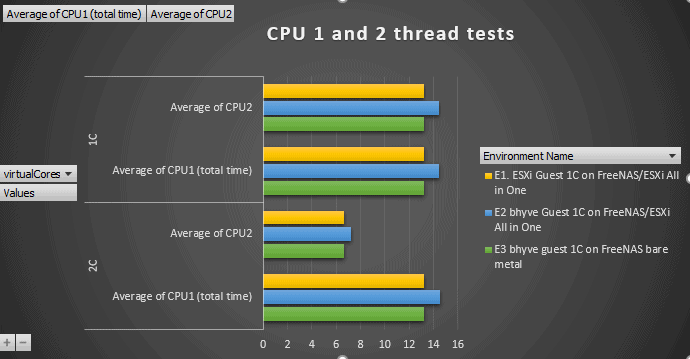

CPU 1 and 2 threaded test

I’d say these are equivalent, which probably shows how little overhead there is from the hypervisor these days, though nested virtualization is a bit slower.

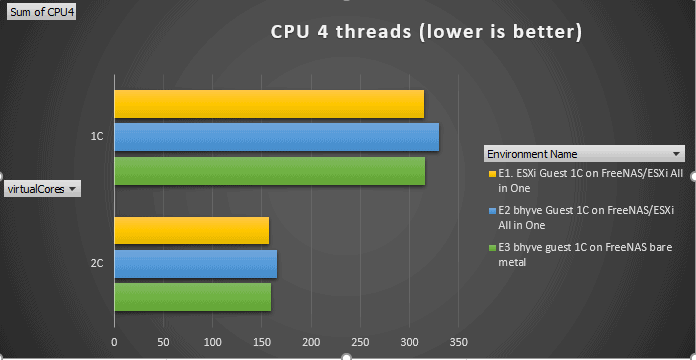

CPU 4 threaded test

Good to see that 2 cores actually performs faster than 1 core on a 4 threaded test. Nothing to see here…

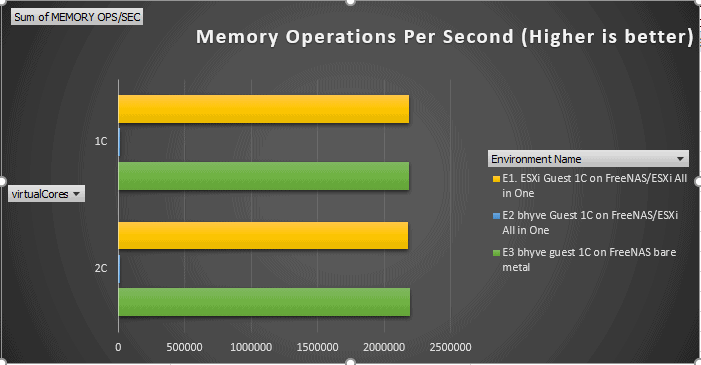

Memory Operations Per Second

Horrible performance with nested, but with the hypervisor on bare metal ESXi and bhyve performed identically.

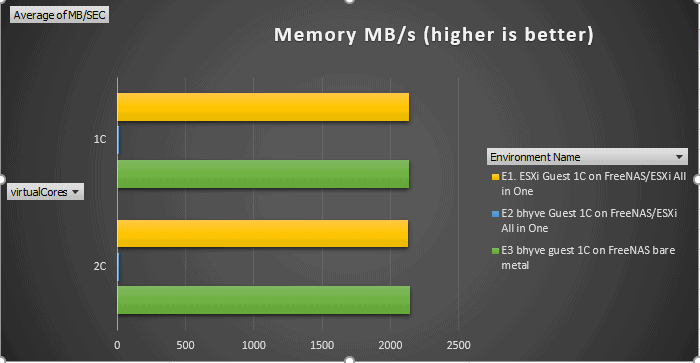

Memory MB/s

Once again nested virtualization was slow.. other than that neck and neck performance.

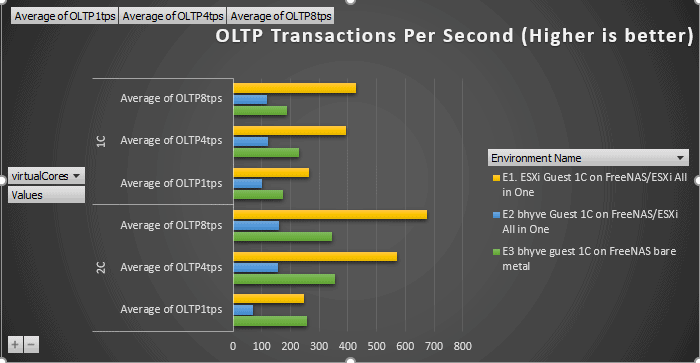

OLTP Transactions Per Second

The ESXi environment clearly takes the lead over bhyve, especially as the number of cores / threads started increasing. This is interesting because ESXi outperforms despite an I/O penalty from using NFS so ESXi is more than making up for that somewhere else.

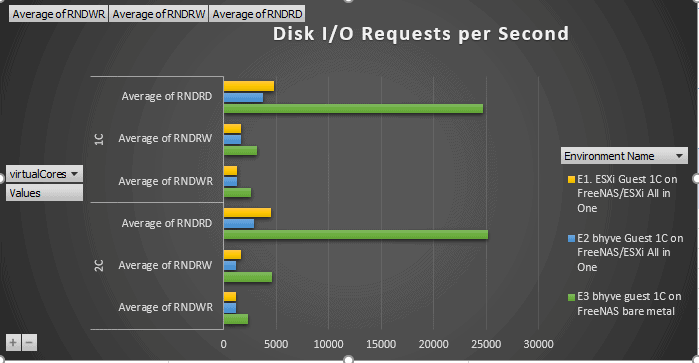

Disk I/O Requests per Second

Clearly there’s an advantage to using local ZFS storage vs NFS. I’m a bit disappointing in the nested virtualization performance since from a storage standpoint it should be equivalent to bare metal FreeNAS, but may be due to the slow memory performance in that environment.

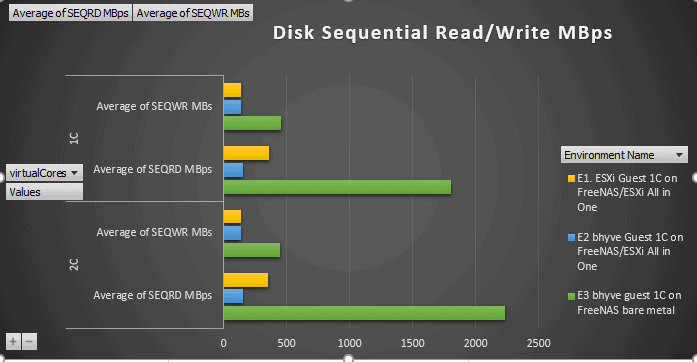

Disk Sequential Read/Write MBps

No surprises, ZFS local storage is going to outperform NFS