I messed up my zpool with a stuck log device, and rather than try to fix it I decided to wipe out the zpool and restore the zfs datasets using CrashPlan.

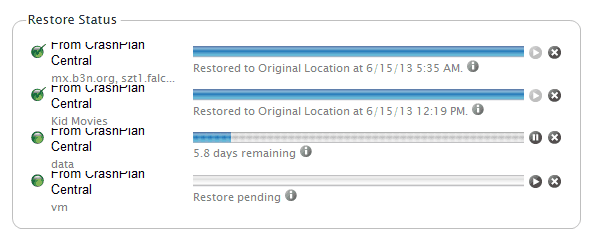

It took CrashPlan roughly a week to restore all my data (~1TB) which is making me consider local backups. All permissions intact. Dirty VM backups seemed to boot just fine (I also have daily clean backups using GhettoVCB just in case but I didn’t need them). One nice thing is I could prioritize certain files or folders on the restore by creating multiple restore jobs. I had a couple of high priority VMs and then wanted kid movies to come in next and then the rest of the data and VMs.

Hi! Thanks for blogging about your restore experience. Smart call, backing up and not solely depending on the data protection features of ZFS.

About a week is making good time for restoring ≈1TB over the internet. Even so, you’ll definitely get a faster restore from a local backup, and have extra redundancy with that, so it’d be a good call to do local backups as well.

-Ryan at Code 42 (makers of CrashPlan)

Thanks for the comment, Ryan. It’s great to have a robust backup solution for home servers.

Ben, Awsome I didn’t know crashplan would work on a illumos distro e.g. https://b3n.org/installing-crashplan-on-omnios/ and it great to see a real world restore.

Ryan, I think I’m sold on CrashPlan – looking at your site – the option to back up to local drives & computers, offsite drives & computers or online to CrashPlan Central seems not only flexible but perfect.

Hi, Jon. CrashPlan has unfortunately dropped Solaris support. The preferred method to backup using CrashPlan and illumos is to share via NFS and have a Linux machine running CrashPlan mount it and backup from there.

I’m in the process of building a FreeNAS server with about 16TB of capacity with 2 redundant disks. Your post about a ‘stuck log device’ makes me concerned about the possible fragility of ZFS. Can you make any recommendations about how to avoid this and make my zpool as reliable as possible? I think Comcast would s**t themselves if I tried to download 15TB worth of data from my Crashplan account (which I just renewed today for another 4 years)!! :)

Hi, Dave. I still run ZFS and don’t have any concerns about reliability. I got the stuck log device while doing performance testing of different operating systems and ZIL configurations so I was importing and exporting the zpool to different OSes, adding and removing log devices to test the ZIL random write performance different SSDs and also testing VMDK vs VMDirect IO performance, etc. I don’t think those are normal circumstances. My best suggestion to avoid the problem is to not remove the log device unless you really need to. When the log device become stuck my zpool was still online and usable (although write performance suffered greatly). I probably could have gotten help on some forums but I decided to just destroy and rebuild the zpool since I had created it a long time ago (I think on Nexenta) and I wanted to move to a newer compression algorithm with OmniOS anyway. I only have about 1TB of data so I can backup to an external HD in addition to CrashPlan online, since your data set is so large you probably would need to setup an additional server to backup to if you wanted a local backup.