TrueNAS Scale and Proxmox VE are my two favorite appliances in my homelab. Overall, TrueNAS is primarily a NAS/SAN appliance, it excels at storage. It is the most widely deployed storage platform in the world. Proxmox on the other hand is a virtualization environment; so it is excellent at VM and software-defined networking. I agree with T-Bone’s assessment that TrueNAS is great at storage but if you want versatility Proxmox is a better option.

I consider TrueNAS a Network Appliance – it’s not just a NAS, but provides a platform for utilities, services, and apps (via KVM, Kubernetes, and Docker) on your network. On the other hand, I consider Proxmox an Infrastructure Appliance–it provides computing, networking, and storage. You’re going to have to build out your services and applications on top of the VMs and Containers it provides.

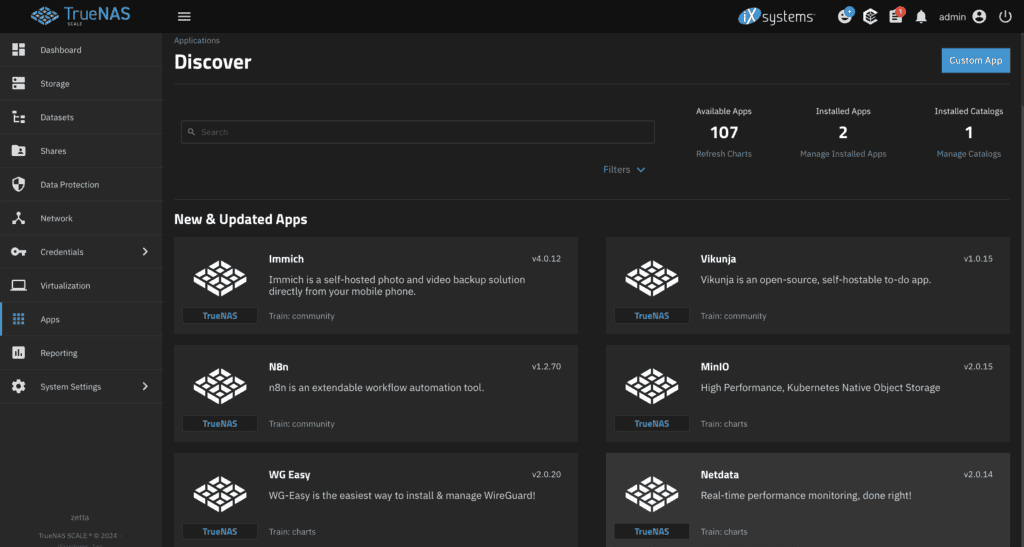

TrueNAS Scale – The Swiss Army Knife of the LAN. TrueNAS is capable of offering a lot of network services beyond a NAS. TrueNAS is the first thing I’d put on my network–even if it’s not needed for storage. All the random things you need can be deployed from the GUI in seconds. Cloudflare tunnels, WireGuard, a TFTP server, an S3 server, SMB, iSCSI, DDNS updater, Emby, Plex, etc. TrueNAS is a full-featured appliance so any of this can be configured and deployed in seconds.

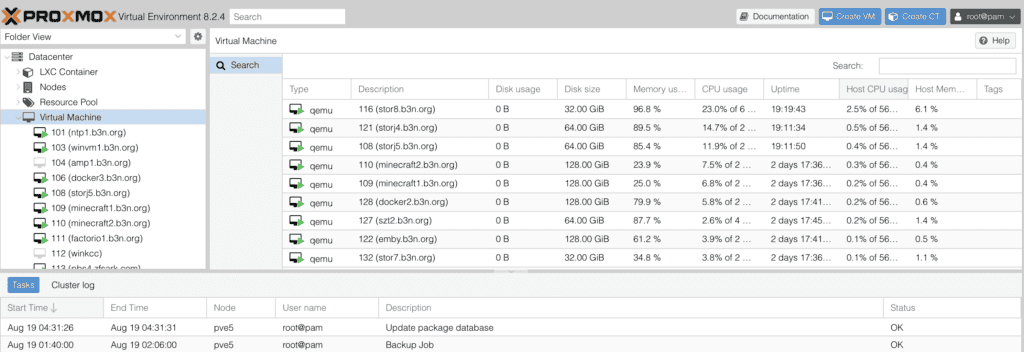

Proxmox VE – Robust Infrastructure Platform – Proxmox is a virtualization platform providing KVM and LXC containers. You’ll have to deploy your applications on top of those servers. It is more work to build out a network on Proxmox since it doesn’t provide anything at the service or application level.

You have to approach the two systems as having a different primary focus but with overlap.

The simplest way I can put this: if Proxmox was a cloud provider it would be IaaS. That’s all it does, but it is good at that. If TrueNAS was a cloud provider it would span IaaS and SaaS.

Rather than start from a feature list, I’m going to start from a few of my homelab services and consider how I’d implement them on each platform. This will be relevant if you’re running a homelab since you probably run similar services. Then I’ll finish up with my thoughts on the feature capabilities of each platform.

Homelab Service Requirements – TrueNAS vs Proxmox

Samba Share

I have several terabytes of SMB shares on my network:

- There’s Emby media.

- A Data share with lots of misc files and folders.

- My ScanSnap and Canon Scanner scan files to SMB.

- I do have a few TBs of archived files and backups over the last 3 decades on SMB

- Time Machine backups for our Macs.

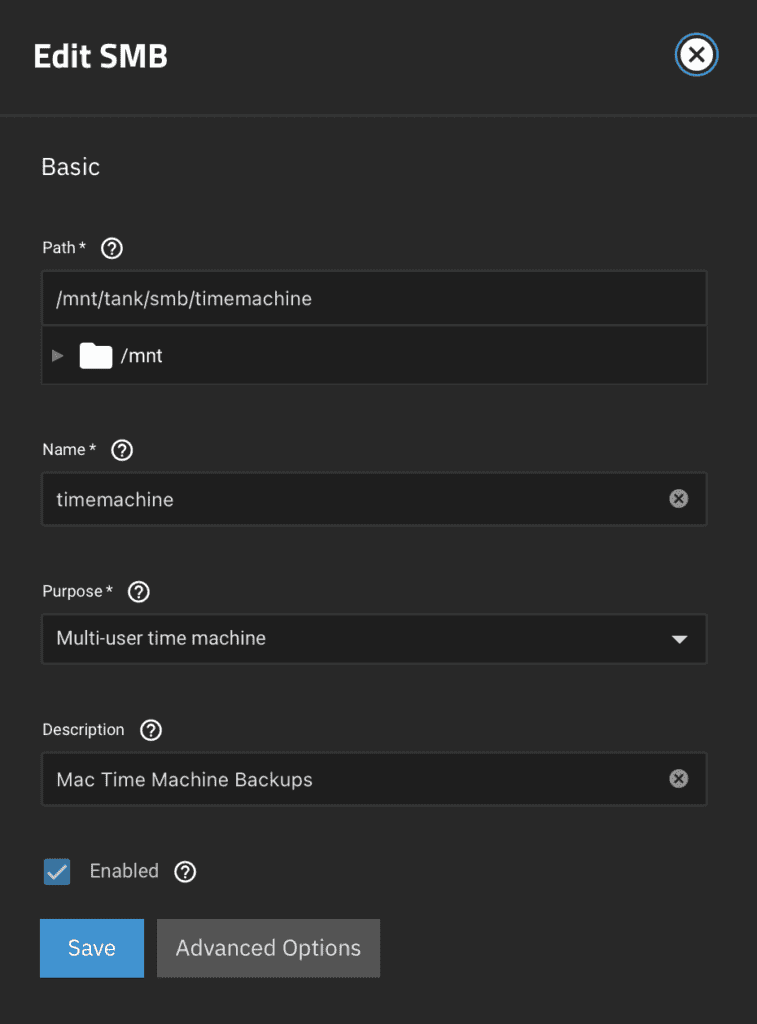

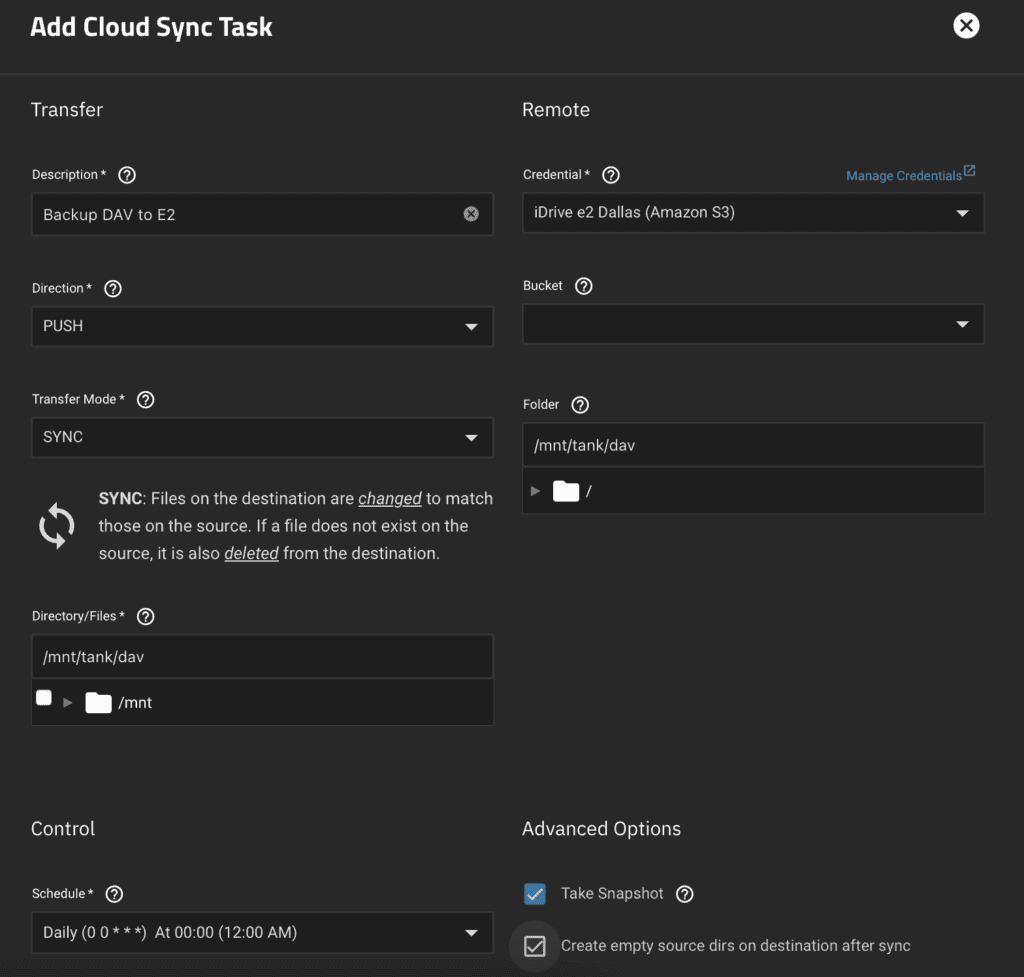

Samba – TrueNAS is built for this. Just create the ZFS datasets and Samba shares. All of this is configurable in the GUI. To configure backups you use Data Protection and set up a Cloud Sync task to point it at any S3 compatible cloud provider (Amazon S3, Backblaze B2, iDrive e2, etc.). You get full ZFS snapshot integration into Windows File Explorer so users can self-restore files or folders from a previous snapshot with a right-click to get to the version history menu. You can expose the .zfs folder for Mac and Linux clients to have access to snapshots. Even advanced SMB shares like TimeMachine backup are easy to set up. You can also deploy a WORM share (write once read many) which is great for archiving data that you want to become immutable. All this can be deployed in a matter of seconds.

Samba – Proxmox is better considered as infrastructure. It has zero NAS capability. In the Proxmox philosophy, a NAS is what you run separately from Proxmox, or on top of the PVE (Proxmox Virtual Environment) infrastructure, not on the Proxmox host itself. The Proxmox way is the separation of duties. Now, since Proxmox is just Debian you /could/ just apt-get install samba. That’s of course not a best practice, but you could do it. A slightly less not-best-practice idea is to create a ZFS dataset on Proxmox, then install an LXC container and do bind-mounts from the host, and run Samba in the LXC container. This will work but for configuration and backups, you’re on your own. And I can’t stress backups enough–if you put SMB on the host, Proxmox won’t back it up, you’ll likely forget about it until you need to restore your data and realize the scripts you put in place to run backups have been failing unnoticed the last eleven months.

In the Proxmox paradigm, you want to install your NAS filer in a VM. You will be using Proxmox’s ZFS or Ceph for VM storage, so your NAS only serves files requiring much less I/O. This gives your NAS all the benefits of being abstracted by a VM–Proxmox can back it up, replicate it, can snapshot the entire thing before an upgrade, and you can migrate it from host to host in the Proxmox VE cluster.

You could install a VM with Ubuntu or Debian and install a Samba server there. In a production environment, separation of duties is essential. You could also install TrueNAS as a VM. I wouldn’t even bother with the complexity of passing drives to it as I did with my FreeNAS VMware setup–just provide it storage from Proxmox and use the TrueNAS VM as a NAS. Let Proxmox manage the disks and TrueNAS manage SMB, NFS, and iSCSI shares.

On running Ubuntu/Debian or TrueNAS under a VM on Proxmox–I think TrueNAS has an advantage for GUI configuration and alerting.

Score: TrueNAS is a better NAS. TrueNAS 1, Proxmox 0.

Emby Server

I run an Emby server (similar to Plex) which mounts SMB storage to serve video files to our TV.

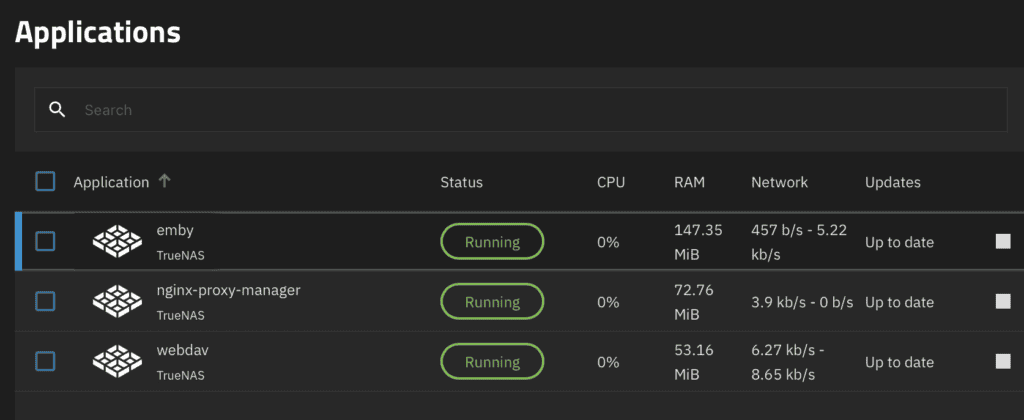

Emby on TrueNAS. TrueNAS has a built-in Emby Application powered by Docker, just install it and you’re good. It’ll create a local dataset for your Emby data–which you can also share via SMB right from TrueNAS to add/remove media.

Emby on Proxmox. The Proxmox model would be to run the Emby server in a VM or LXC container.

Score: Simpler & faster setup with TrueNAS. TrueNAS 1, Proxmox 0.

Windows VM

Windows VM – this would be a KVM VM on either platform.

Windows VM – TrueNAS. KVM VM, this will work but getting to the console is a bit tricky requiring the use of VNC–and I’m not sure it’s that secure.

Windows VM – Proxmox. KVM VM, has a full range of options here and nice secure web-based console access.

Score: Both will work, but Proxmox is a better hypervisor for things like this. TrueNAS 0, Proxmox 1.

Minecraft Servers

I run a few Java Minecraft servers.

Minecraft – TrueNAS. There is a built-in Minecraft app based on Docker. but this is where things get tricky. Here I have a service that is running on Java exposed to the world. I might be a little too cautious, but this seems risky to run in Docker. I know containerization has come a long way–but a compromise or crash of a Docker container kernel means a compromise or crash of the TrueNAS host kernel. And while I could put the application itself on a VLAN in my DMZ, the TrueNAS host (sharing the same kernel) is on my LAN. I think for such a service like Minecraft it’s more secure to run it in KVM. So, even though TrueNAS can run Minecraft as an app, I would do a VM. But if I were just running Minecraft for my LAN, a TrueNAS Docker application would be an option. Also, I’ve found in general that any GUI that tries to configure a Minecraft server is more difficult than CLI. For me (because I’m already familiar with the command line), it’s simpler to manage Minecraft in a VM and not try to figure out how to finagle the GUI to write the ‘server.properties’ file just right.

Minecraft – Proxmox. Would also run it in a VM. Working with both systems Proxmox has better networking tools, and virtual networking switches which make it easier to isolate my DMZ from my LAN. That said I think I could get it all working in a TrueNAS VM.

Score: TrueNAS gives you both options. TrueNAS 1, Proxmox 0.

Other Services…

StorJ – same as Minecraft, StorJ TrueNAS has a built-in app. But again for the sake of security, I’d run it in a VM on TrueNAS (and it would be a VM on Proxmox).

Factorio – doesn’t seem to have a Proxmox app for TrueNAS, so looks like a VM on either platform (could be a Docker app on TrueNAS).

Plausible (Website Analytics). I run this in a Docker container. Could be run in a VM on Proxmox, or directly as a Docker App on TrueNAS.

Cyberpanel + OpenLitespeed + PHP + MySQL Webserver. This would run in a VM on either platform.

TrueNAS Scale vs Proxmox VE Features

Moving from services to features.

Hypervisor

Proxmox outshines TrueNAS with more options as a hypervisor. While both use KVM behind the scenes, the hypervisor options Proxmox offers are unmatched–offering more storage options (Ceph or ZFS), machine settings, and better web VM/container console access. If you set up multiple Proxmox servers you can migrate VMs live.

Containerization

Containers allow the host to share the kernel with an isolated container environment. If I was running only LAN services I’d probably use containers a lot more. I love the density you can get with containers, but I also am not comfortable with the level of security yet. Proxmox also has some limitations with LXC containers–you can’t do live migration with them (which makes sense because they share the host kernel) so migrating from host to host requires downtime.

Proxmox supports LXC containers. Containers can be deployed instantly and you essentially get your distribution of choice (I prefer Ubuntu, but you can just as easily deploy Debian or most other Linux distributions). It’s like a VM, but it shares the kernel with the host, and is a lot more efficient–you’re not having to emulate a machine (this is not a VM). You will notice that just like Proxmox has no NAS capability, it also has no Docker management capability. I think the lack of GUI for Docker management is a huge opportunity for Proxmox. But again, you have to realize that Proxmox is infrastructure. You run Docker in a VM or LXC container on top of Proxmox, not on Proxmox directly.

TrueNAS supports Apps which are iX or community-maintained configuration templates for docker images that can be set up and deployed on the GUI. If they don’t have the app you’re looking for, you can also deploy any normal docker image using the “Custom App” option.

TrueNAS: 1/2, Proxmox 1/2.

Storage

Proxmox supports ZFS or Ceph. It can also utilize network storage from a NAS such as NFS (it can even be served from a TrueNAS server). TrueNAS is focused on ZFS, but high-availability storage is only available with Enterprise appliances.

TrueNAS: 0, Proxmox: 1

High Availability

The Emby server must not go down. TrueNAS Scale High Availability is limited to when you’re running on iX Systems hardware and licensed for Enterprise, and I believe is limited to storage (I don’t think it has VM failover?). Proxmox has high availability (at both storage and hypervisor levels). You can also live-migrate VMs from one host to another in a cluster.

TrueNAS: 0, Proxmox 1.

Performance

In my experience, both are going to be fast enough so I wouldn’t pick one or the other for performance reasons. But if you are interested in performance take a look at Robert Patridge’s Proxmox vs TrueNAS performance tests (Tech Addressed) where Proxmox smokes TrueNAS.

TrueNAS 0, Proxmox 1.

Networking

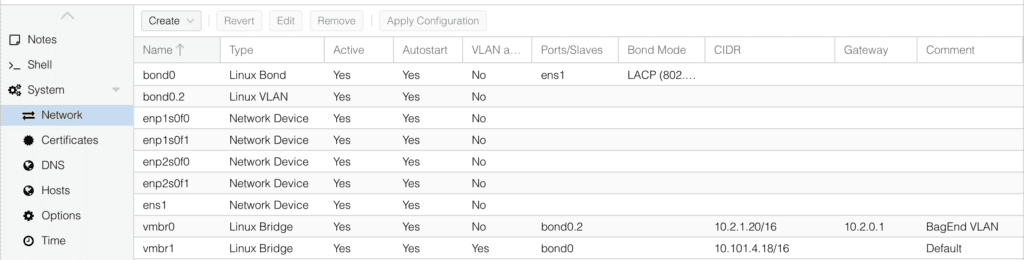

Proxmox has robust SDN (Software Defined Networking) tools and virtual switches (including Open vSwitch if you need advanced networking). TrueNAS is a little more difficult to work with–especially when using Docker applications, I agree with Tim Kye’s networking assessment (T++) of the platforms–sometimes I feel like I’m fighting TrueNAS because of certain decisions iX made while Proxmox just works.

TrueNAS 0, Proxmox 1.

Backups

TrueNAS Backups – Backing up TrueNAS is very easy for ZFS datasets – you can backup at the file level to Cloud Storage (Amazon S3, Backblaze B2, iDrive e2, or any S3 compatible storage), to another ZFS host using Storage replication, or Rsync. TrueNAS lacks ZVOL (block device) backup capability except via ZFS Replication. So for a VM or iSCSI device, you need 3rd party backup software to get file-level backups. If you do things the TrueNAS way and run all your services using the built-in Docker-based applications this isn’t a problem because all the configuration is stored on ZFS datasets (not ZVOLs). You simply restore your TrueNAS config file, restore all the datasets from Cloud Sync, and point the applications and you’re good to go (in theory–sometimes permissions…)

S3 Compatible Object storage for backing up ZFS datasets is inexpensive. Backblaze B2 is $6/TB, and iDrive e2 is $5/TB–but you can pre-pay for e2 to bring the price to around $3.50/TB.

However, if you have any VMs or use iSCSI, you’re still going to need block storage to back up ZVOLs via ZFS replication. You can use rsync.net which starts around $12/TB/month, or set up a VM with block storage formatted as ZFS (see block storage options under Proxmox Backups in the next section below).

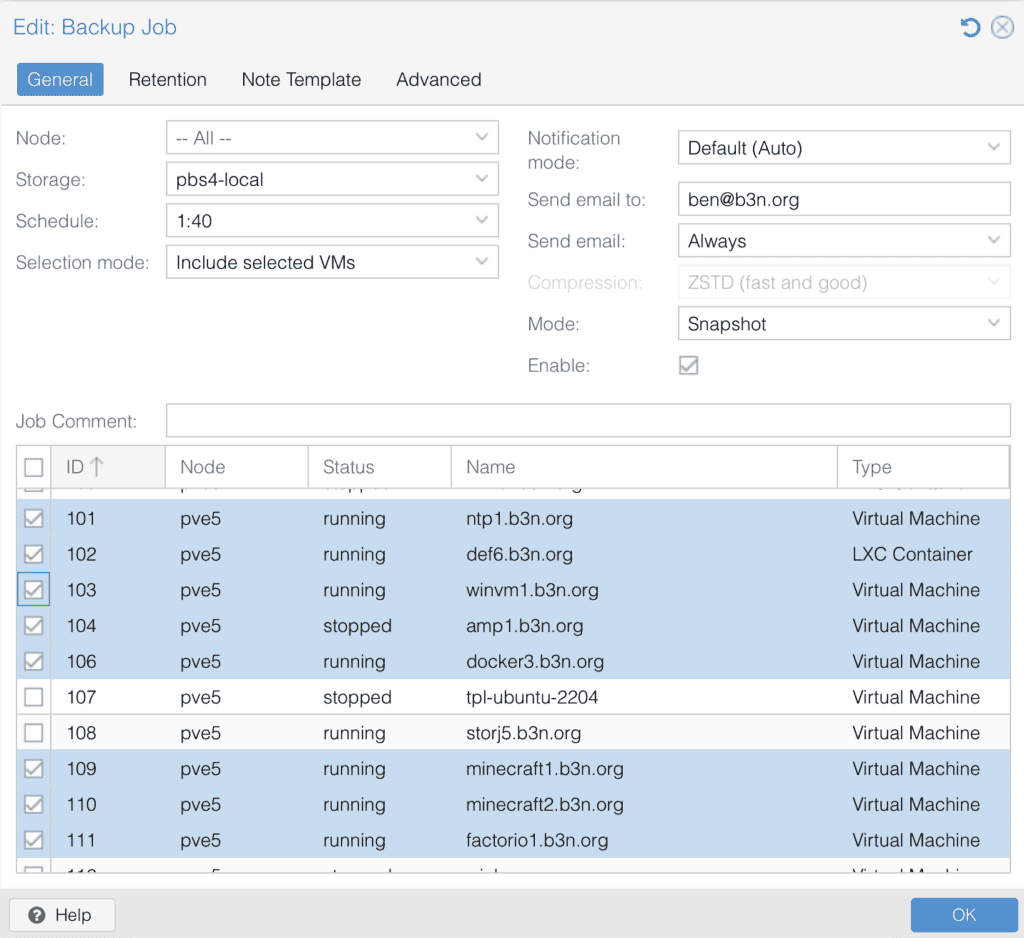

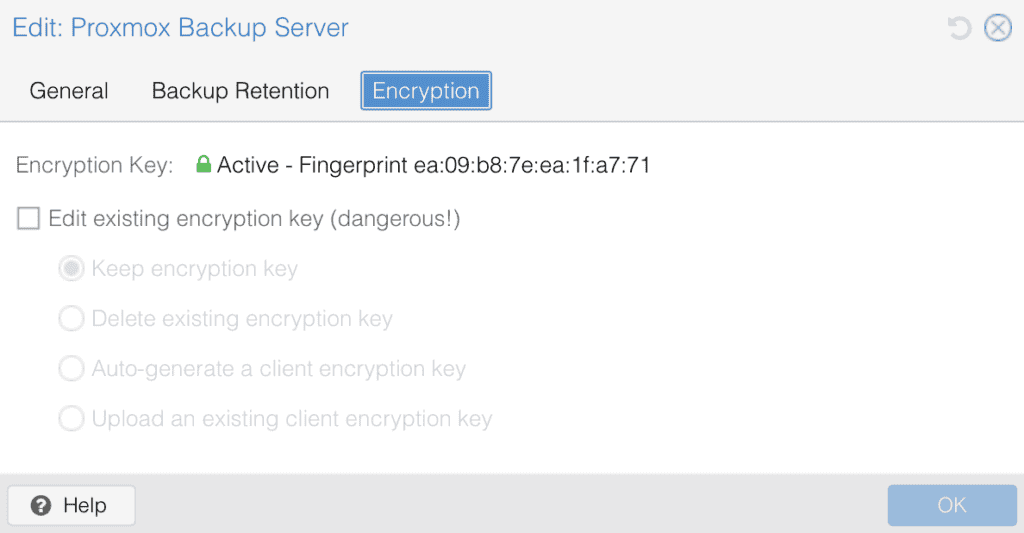

Proxmox Backups. The best backup solution for Proxmox is the Proxmox Backup Server (PBS). You’ll need a local PBS server (which can run in a VM–just make sure to exclude it from backup jobs so you don’t get into an infinite loop) and one remote.

Update 2025-11-15 – PBS 4.0 has added support for S3 support as a Tech Preview so backing up to services like S3 or B2 is coming soon!

The best solution is to find a VPS provider that offers block storage, install a Proxmox backup server there, and replicate your backups from your local PBS to your remote PBS. BuyVM and SmartHost offer VPS with Block Storage around $5/TB range–with either service, you can upload the PBS ISO or provision a KVM VPS with Debian and install PBS on top of it.

One other advantage of Proxmox Backup Server is backups are deduplicated. When backing up multiple VMs, shared data is deduplicated, and backup versions only take up the space of the delta.

Data Encryption

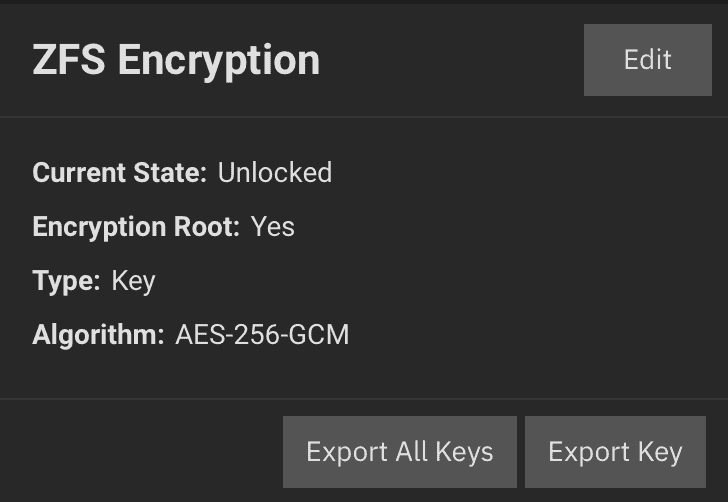

TrueNAS Encryption. TrueNAS is capable of encrypting the entire pool (from the creation wizard). This is useful when sending failed disks back for warranty service. When replicating a pool the encryption is preserved so the destination server has zero ability to see your data (Make sure you keep a backup of your encryption keys offsite).

Backup Encryption: TrueNAS Cloud Sync can encrypt files and filenames when syncing to an S3-like cloud service. I should note there is an option in TrueNAS to backup the configuration file–this includes all the encryption keys so it’s useful to back this up periodically and store it in a password manager.

Proxmox Encryption. Proxmox does not support pool-level encryption from the GUI, but it can easily be done yourself using LUKS, prompting for a key on boot, or from TPM.

Backup Encryption: When backing up, Proxmox can encrypt the data in such a way that the destination server (a remote Proxmox Backup Server) can’t read the data at all without the key.

TrueNAS 1, Proxmox 0. (no GUI for LUKS in Proxmox)

Disaster Recovery

I have done test disaster recoveries on both systems. I prefer a DR with Proxmox because you can restore high-priority VMs first then the rest. I just find the process a lot simpler. PBS also allows you to restore individual files from backups of Linux VMs. I’ve found TrueNAS a bit slower at restoring (especially if restoring from ZFS replication), and from Cloud Sync the permissions aren’t restored (one reason to virtualize your NAS is if you restore it at the block level there’s no chance you’ll miss permissions).

TrueNAS may have an advantage in that you can restore specific files if you need a couple of files after a disaster, but Proxmox Backup Server understands the Linux filesystem so it allows you to restore specific files from a VM as well.

That said, with both systems, I’ve been able to restore from scratch with success.

TrueNAS 1, Proxmox 1.

Storage Management

TrueNAS excels here when it comes to ZFS management. ZFS can be managed fully from the GUI in TrueNAS, including scheduling scrubs and replacing failed drives. In Proxmox you will need to drop into the command line. This isn’t a huge deal for me since I know the CLI well. Proxmox may have a better GUI for Ceph storage management but I haven’t used it.

TrueNAS 1, Proxmox 0.

Licensing

The single greatest cause of downtime is licensing failure. However, both Proxmox and TrueNAS are open source, so you won’t have any downtime caused by licensing issues, and won’t need to waste time going back and forth with sales or negotiating contracts.

TrueNAS licensing. The TrueNAS Scale edition is free and open source, requiring no keys. Generally speaking, if you’re sticking with open-source technology the Scale edition is all you need. It’s when you start needing integration with other proprietary systems such as vCenter, Veeam, or Citrix that you’ll pay for it. High availability clustering and support will require Enterprise. For any business, you should get an Enterprise edition from iX Systems.

Proxmox licensing. Proxmox VE is free and open source. However, you won’t get updates between each release unless you enable the Enterprise or No-Subscription repository (Proxmox). The lowest tier Enterprise subscription is a reasonable €110/year/CPU socket. For a business or anything important you should pay for enterprise, but for a poor man’s homelab you have two options:

Option 1: Only upgrade twice a year on the releases. You might be able to get away without interim updates at all. Proxmox VE is released twice a year, and having a consistent twice-a-year schedule to update your homelab is not unreasonable. Since Proxmox is based on Debian, you’re still getting distribution OS and kernel updates. The only updates you’re not getting are for Proxmox itself.

Option 2: Or you can enable the No-Subscription repository–you’ll get faster updates–but it’s not intended for production. If I was running LXC containers with services exposed to the internet I’d want to do this (or just pay for Enterprise) to get any security fixes fast.

And of course, there is also a nag if you don’t have enterprise licensing enabled. You can of course find scripts to disable the nag, but still, there’s a nag and there’s no way to disable it in the GUI.

TrueNAS 1, Proxmox 1.

Monitoring

The other day, as I walked into my garage, I saw red lights on several of my hard drives. The hard drives were too hot! I should have been using Stefano’s fork of my Supermicro Fan Speed control script which will kick up the fan RPMs when drive temps go high.

Proxmox didn’t alert me at all. Proxmox has some basic monitoring like SMART failures, drive failure, or if a drive is corrupting data. But TrueNAS takes it a step further and is proactive at hardware monitoring.

TrueNAS servers alert me to high drive temperatures, and in general, I’d say the alerting is more proactive and comprehensive than Proxmox. Sometimes you have to dig to find problems in Proxmox, but for TrueNAS you can check the alert notifications in the top-right of the GUI. Just some examples of things you may get alerts for A drive failure, CPU high temperature, HDD high temp, Cloud Sync failed, storage is running low, ZFS replication failed, IPMI firmware update available, SSL certificate about to expire, service crashed/failed, etc. From a hardware & software monitoring and alerting perspective, TrueNAS is a lot more capable.

I think Proxmox is probably more suited to be monitored in an environment where you have something like Zabbix. TrueNAS can be monitored with Zabbix, but it also doesn’t need it.

TrueNAS 1: Proxmox: 0.

GUI Completeness

Proxmox can do the initial setup and you can do most things through the GUI–probably 95%. But when you have to replace a failed drive in your pool or perform an upgrade you’ll have to go to CLI. I’m comfortable with this, but some people aren’t. TrueNAS by contrast can pretty much be run without ever using CLI.

Fleet Management

TrueNAS Scale fleets can be managed with TrueCommand (allows management of systems up to 50 drives total for free).

With Proxmox each node can manage the entire cluster without the need for a central management service. No limits on the free version. But Proxmox can’t centrally manage a cluster of clusters (yet).

Platform Stability & Innovation

One area where Proxmox excels is stability. They are deliberate and have well-thought-out changes, iterating slowly on their roadmap. The upgrade process is documented and thorough, and I’ve never had anything break. But since I’ve been on TrueNAS, they’ve switched the OS from FreeBSD to Linux, Kubernetes to Docker, and from KVM to Incus. In general, I’ve found that with TrueNAS it’s best to stay on the Mission Critical or Conservative track unless there’s a new feature I want sooner. However, TrueNAS innovates faster, so you’ll see newer technologies and features sooner, along with more things you can do in the GUI. Proxmox is simple and stable, while TrueNAS is broad (the Swiss Army Knife of the network) and innovative.

TrueNAS vs Proxmox Comparison Table

| Feature | TrueNAS Scale | Proxmox VE |

|---|---|---|

| NAS / SAN (SMB, NFS, iSCSI Shares) | ✅ | ❌ |

| Applications (Emby, Minecraft, S3 Server, TFTP server, DDNS updater, Tailscale, VPN, etc). | ✅ | ❌ |

| HA Clustering | ❌ Only Enterprise | ✅ |

| VMs | ✅ | ✅ |

| Advanced VM Management | ❌ | ✅ |

| LXC Containers | ❌ | ✅ |

| Docker Containers | ✅ | ❌ |

| Storage – ZFS | ✅ | ✅ |

| Storage – Ceph | ❌ | ✅ |

| Storage Management | ✅ | ❌ |

| Networking | ✅ | ✅ |

| Advanced Networking | ❌ | ✅ |

| ZFS Encryption | ✅ | ❌ |

| Backup Datasets | ✅ | N/A |

| Backup ZVOLs | ✅ | N/A |

| Backup VMs & Containers | ✅ | ✅ |

| Deduplicated Backups | ❌ | ✅ |

| Zero Knowledge Encrypted Backups | ✅ | ✅ |

| Basic Monitoring/Alerting | ✅ | ✅ |

| Comprehensive Monitoring/Alerting | ✅ | ❌ |

| Audit Logs | ✅ | ❌ |

| Hardware Management & Monitoring | ✅ | ❌ |

| Fleet / Cluster Management | ✅ | ✅ |

| Feature | TrueNAS Scale | Proxmox VE |

How Should Each Platform Improve?

In my opinion, if I had a number 1 and 2 priority to improve each platform it would be these items:

- TrueNAS needs to add better KVM management

- TrueNAS needs to add better networking (maybe Open vSwitch?)

- Proxmox needs to add Docker management alongside VM and LXC management.

- Proxmox needs to add GUI management of ZFS storage (replacing failed drives and such).

Survivability

One area that pertains to home labs is survivability in the event of your death. ☠️

Would your wife be able to get help maintaining or replacing what your system does? I think you’re more likely to find help with TrueNAS than Proxmox–even someone slightly technical could easily see from a few minutes of going through the menu options what services and applications are being used. Because TrueNAS is fairly opinionated and configured via GUI, things are done consistently–anyone who uses TrueNAS will feel right at home. Proxmox on the other hand lets you do whatever you want–you may have RedHat, Ubuntu, Windows, or Docker images running in a VM–who knows what insanity someone helping your family will discover? In both cases, make good notes.

What do I run in my homelab?

Honestly, I would be happy with either solution; I’m grateful that both iX and Proxmox provide a free solution. I have configured both systems for organizations.

When I talk to car guys, they’re quick to recommend something overly exotic for my needs. I’ve had car guys tell me I should get a BMW, or Corvette, etc. But I’m not a car guy, really what I want is to get to A to B reliably in the snow with as little maintenance and car tinkering as possible. Having a love for computers I have to be careful not to do the same thing to others–there’s a certain joy you get out of building out your servers using Ansible on Proxmox; and the more complex your needs the more I’m going to lean towards Proxmox over TrueNAS. But it’s not the right solution for everyone.

What prompted me to write this was I have two physical servers: One is running TrueNAS Scale and one running Proxmox VE. However, I decided to simplify and pair it down to one system to make more room in my server rack. This forced me to think about it. Ultimately I decided Proxmox was better for a lot of the virtualization needs in my homelab. Part of my decision is I already have a lot built on Proxmox and it would take time I don’t have to re-implement on TrueNAS, for what I would consider a lateral (no real benefits) move.

But I like the NAS offering of TrueNAS and use a lot of the out-of-the-box features provided with TrueNAS. So I run Proxmox on bare metal, that is my core infrastructure. But put a TrueNAS VM under Proxmox to act as my NAS. And I feel like that NAS should sit under the Hypervisor–in a small environment that seems like where it should be.

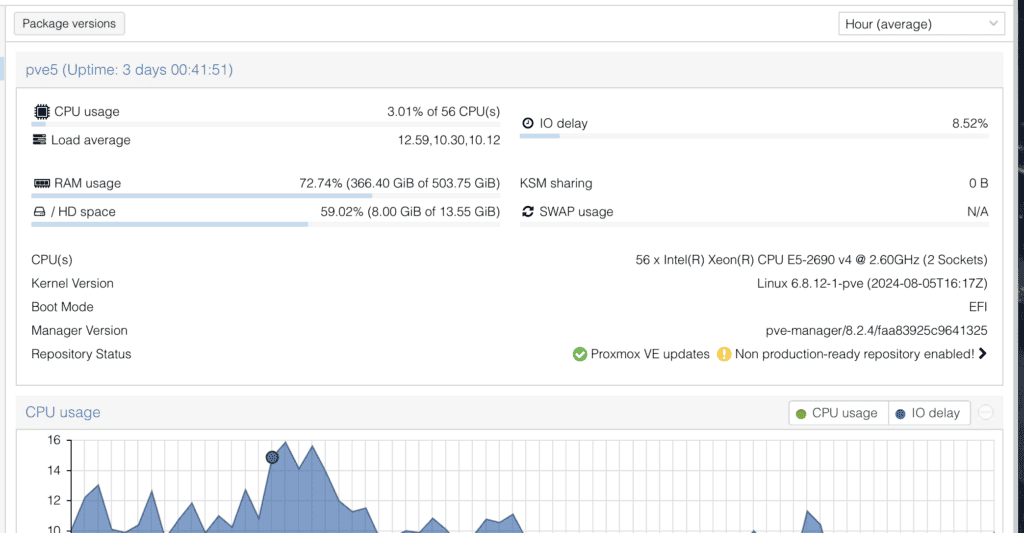

For those of you who want to know, the bare metal server is a Supermicro 2U, 28 cores (56 threads) pair of Xeon E5-2690 v4, 512GB Memory, 1 ZFS pool with 2 x Intel DC S3700 800GB SSD mirrored, and one pool with 9 x 20TB Seagate Exos X20 20TB RAID-Z2 (ZFS) + 1 x DC S3700 for write cache. The TrueNAS Scale VM is given 6 cores, 32GB memory, and 16TB storage. You can often find 3-year-old decommissioned servers like this built off of eBay. Search for “2U Supermicro FreeNAS” or “2U Supermicro IT mode” or similar. I’ve seen the one I have for as low as $300 (with a bit less memory). All you need to do is add drives and install TrueNAS or Proxmox.

For me, a rough (emphasis on the rough) rule of thumb for Proxmox vs TrueNAS is this; if I’m just providing primarily NAS and a few services for a home or office, I’ll likely deploy TrueNAS. If I’m deploying in a complex environment or planning to host applications to the world, then I’d probably use Proxmox.

Hi Benjamin,

Thanks for the “Advertisement” for my (forked) Script ;). And thanks again for your original Version from where I started ! That was a Huge Help !

Please bear in mind that I’m unfortunately aware of some Issues with it causing it to Crash (and Systemd will restart). It’s not the end of the world if the Initial Fan Speed is sufficient (I’m currently starting at 50% – hardcoded for now – I’m using 10000 rpm ARCTIC Fans and these can be tuned down A LOT – I’ll try to make a Variable for it soon).

So make 100% sure you also install the https://github.com/luckylinux/cooling-failure-protection Software for Redundancy in case all else Fails. Not sure if I will migrate cooling-failure-protection to the same Python Stack (although “minimalist”) or stay on BASH. For now it’s KISS Philosophy on that one.

One Issue occurs if you had Events Logged in the IPMI Event Log. There is a chance that, depending on the Event Type (e.g. “Intrusion Switch” or “Power ON” etc) the Script might Crash because the Field it’s expecting doesn’t Exist in the CSV Output of IPMItool. I need to fix this (I’ll try ASAP) and put some try/catch blocks around most of Things, but please make 100% sure you get the Other Script installed as well !

I also observed on one system that if a Device gets removed it can cause the Script to Crash (I’m currently playing around with LOOP Devices because of a very tricky/”special” ZFS on LUKS BUG).

I’ll list those two Issues on my Github Issue Tracker and try to address them ASAP.

I don’t want to scare you (or other People) away, I just want to make sure that you have a “Plan B” in place **if** you are hit by those kind of Issues. I’m NOT suggesting they are happening all the Time. It strongly depends on the System.

The Script is tested to the best of my Abilities but whenever you try to do new Things with your own System, something unexpected tends to happen. And Murphy Law always applies unfortunately …

About the Servers that’s a very nice Price. I’m currently more having a look at a SC216 with 24×2.5” SSD Bays which is a single-Socket 2011-3 (Xeon E5 v3/v4) with a very nice “Barebone” Price for Chassis+Motherboard, possibly with a 2697 v4 since I think I have enough 2u Servers for HDD Storage (SC825/SC826). But yeah, try to add 256GB or 512GB of RAM and then the price shoots up quite easily … I’m not in US and Duties/Taxes/Shipping basically doubles the price :(.

FYI I added a basic try/except so the IPMI Even Log reading should NOT cause the Script to crash anymore.

It’s in the current “main” (and “devel”) branch.

As always, Ben, lots of really good data around these technologies.

One point I thought I would add is in reference to evaluating the viability of any container-based app in general, especially on various NAS platforms. These apps are generally quicker and easier to deploy and run on a smaller overall footprint compared to creating full guest VM’s run them in a virtual environment. In some cases, however, there is a high risk of the app itself not performing correctly and/or seriously impacting the NAS operations that the device is primarily intended for.

In your examples, you call out Emby as one app that you prefer to run on the NAS as opposed to building it its own VM. If you do not require transcoding in any form (including recording Live TV OTA), this can be a very viable option. If the content you are watching is of higher quality than what your playback devices support, ESPECIALLY if you have 4K content you are trying to watch on devices like tablets and PC’s, the server side may need to transcode that content on the fly. This is a CPU-intensive operation and bring file serving functions (or other apps) on a NAS to a halt.

The impact is likely higher on a commercially-bought system given that those CPU’s are quite meager and they lack any meaningful RAM expansion options, but it should be part of the process of consideration on an individual basis. For me, I would never be able to run either my Plex -or- my Emby servers on any sort of NAS because of the amount of OTA recording that I do and the on-the-fly transcoding it requires. I need to run each of them as VM guests with dedicated CPU and RAM to ensure they have the necessary resources.

If one is only “file serving” that content and the remote client device has all of the capability to direct play what it’s retrieving, apps like Plex and Emby can run in a container quite nicely.

Hi, Mark! I agree, the NAS platforms can be configured quick and easy. TrueNAS is quick and easy to get small lightly used apps out. In the enterprise version of TrueNAS the apps are disabled and it’s just a storage platform.

I’m actually not sure if my Emby server ever transcodes–I only gave it 2 cores in Proxmox and it’s never had any problems streaming to our TV and laptops (we never watch videos on our phones/tablets)–maybe that’s enough or more likely it’s using the direct-play you mentioned. Good point on storage contending with CPU–especially when you’re running a low powered 4 or 8 core CPU I can see how it would get overwhelmed (and probably why a lot of enterprise environments still separate out Hypervisor and Storage).

On Proxmox there are options to throttle (and allow temporary bursting for VMs sort of like what AWS does) of CPU, Memory, and Disk I/O and throughput. And in TrueNAS you can give apps a higher or lower percentage of “shares” for CPU so if there’s a certain application that takes too much CPU time from storage you might be able to limit it. I haven’t run into that problem but I think having 28 cores and a fairly light load helps!

My main bottleneck is storage IOPs–even with a ZIL SSD and a huge amount of memory for ARC, I frequently see 5% I/O delay in Proxmox (CPU waiting on storage). I configured my pool with 9x20TB disks, RAID-Z2 and a cold spare on the shelf. In hindsight, I would have done 4 mirrored pairs with a hot spare. But I do have a separate 2x800GB SSD mirror ZFS pool for things that I need to guarantee high IOPs (such as the web server VM running this site).

A few Words concerning Containers and ZFS …

About Containers, you could install Docker on top of Proxmox VE I guess (and maybe even Portainer if you need that), people did that in the Past. Since I wanted some more Isolation, I decided to create a “PodmanServer” KVM instead, so I could better control Resources (CPU, RAM). First it was running “Frankendebian” (Debian Bookworm 12 + APT Pinning for Podman from Testing before the libc6 / time64t Migration that occurred on Debian Testing). Now I’m slowly converting those VMs to Fedora 40 since Podman is much better supported there (although SELinux is a different Beast to handle).

On another Point … Nothing prevents you from doing PCIe passthrough to a TrueNAS VM if you prefer that (you’ll likely need 2 x LSI HBA 9211-8i or 9207-8i etc for up to 12 Drives + 1/2 Boot Drives for the Proxmox Host itself), then using some NFS storage (with a dedicated Linux Bridge + Firewall Rules) for Proxmox VE VMs as well. I did that in my early Homelab Experience with NAPP-IT and OmniOS using ESXI 5.5 back then.

About IOWait on the other hand … 5% IOWait isn’t so bad. I was getting up to 70-80% IOwait at times, particularly on Systems using ZFS on top of ZVOL (Notably for Podman [Docker] Container VM). Then it would take 30s to just save a 1 kB file with nano …

https://forum.proxmox.com/threads/proxmox-ve-8-2-2-high-io-delay.147987/

Some People blame it on my using consumer Crucial MX500 SSDs for Root Storage, but I think there is more to it really. On a side note I thought it had “basic” Power Loss Protection, but actually it just has “At-Rest Power Loss Protection” only … Thanks for nothing, Crucial !

Be aware that there is a huge (10-40 x IIRC) ZFS write amplification especially for VMs/ZVOLs, there are several documented tests on the Proxmox VE Forums. With LUKS into the Mix increases that even more. Let alone if you use ZFS on top of ZVOL like I was doing for my Podman Container VM (:sigh). I recently migrated that to EXT4 on top of ZVOL for that Reason. If you use ZFS on top of ZVOL you anyway need to be sure to only snapshot on one side, only compress on one side AND either enable autotrim in the GUEST VM or manually Periodically Trim the GUEST VM.

Your Intel DC S3700 is quite old and relatively slow in terms of MB/s, but it should still be able to handle lots of IOPS being MLC (as opposed to TLC – let alone QLC). Write Endurance is also top-notch. I was also looking at it but I was like “it’s 10 years old, Calendar Aging might be more of a Factor than the TBW Rating on the Drive”. Some slighly more recent Options might be the Intel S3610, the Intel S3510/S3520 (if you don’t need that many TBW Write Endurance Rating), the Micron 7300 PRO/MAX if you have a few PCIe Slots to spare for M.2/U.2 with some Adapter, maybe Samsung PM9A3. Anecdotally I heard good things on the OpenZFS IRC Channel about the Kingston DC600M I think although there isn’t much of a Track Record there (“it worked for a few years no Issue so far” isn’t really long-Term).

About ZFS on LUKS here is my BUG Analysis (partly LUKS to blame, partly … systemd/udevd I think disconnecting the LUKS Device during Boot while it’s being used): https://github.com/luckylinux/workaround-zfs-on-luks-bug

No action needed if it “works for you”, but if you suddenly start getting a barrage of “zio” error in the middle of the night and cannot SSH / access the web GUI into your Server (like I discovered it) then you might be affected. Still pretty “rare”, so do NOT be paranoid about it, just know that there is a known issue, it has a known workaround (add a LOOP device in the middle of the ZFS-LUKS “Stack”), although for me the Challange was about systemd/udev “Race Condition” since I am using it as ROOT Pool (rpool). Just reach out on the openzfs IRC Channel if you have weird Issues there, they have been a huge Help. I’m just a Homelabber not an IT Expert, so there was a lot of Learning along the Way into setting up that initramfs Script (and mainly the Debug Tool to troubleshoot why my Server was keeping throwing Errors and sending everything via netcat to my Desktop for Log Analysis – I hate BUSYBOX Shell).

I do NOT know if FreeBSD is not affected, should you go with the other TrueNAS offering (commercial ?) with GELI+ZFS. Usually the Grass is NOT greener on the other Side :D.

Hi, Stefano! I watch the SMART status on those SSDs and they claim 50% life left (I’m guessing age is not a factor) so we’ll see… either way there are two of them and it’s backed up. |:-)

At rest power loss protection? 🤡 I can’t think of a time my drives are at rest!

On your ZFS LUKS issue, is that only a problem for the rpool? I suppose you could leave the rpool unencrypted and just encrypt the main pool. I wasn’t aware of the write amplification with LUKS, but I think native ZFS encryption is the best option (which is what TrueNAS supports out of the box, and it looks like on Proxmox it can be enabled on CLI at pool create time–but you’ll lose a few VM migration options–I probably won’t do it on mine).

Hi Benjamin,

Whatever SMART tells … I don’t trust :D. For Several PB of endurance of those Intel S3700, I’m more concerned about Calendar Age (10 Years +), especially on Controller/Capacitors/etc, rather than the Endurance itself for the meager < 100 TBW / year I write on a 1TB Drive. I don't think you'll have any Problem with that :).

ZFS Native Encryption is so-so and there are bugs as well when doing e.g. zfs send | ssh zfs receive.

ZFS Encryption has maybe some Advantages, but it also changes the way some stuff work underneath and also regarding security. Less / No Support for Clevis+Tang autounlock being another Point that made me originally choose LUKS for that.

The ZFS LUKS Original Issue was originally reported on a "Data" Pool, so the quick fix with introducing a "LOOP" middle-man Device seemed to fix the Issue by "standardizing" the way Sectors/Blocks are written.

In my case, fixing that on a LiveUSB Chroot AND Issueing "zpool reopen" or "zpool reopen rpool" fixed the Issue from the LiveUSB / Chroot Environment. That was relatively Quick fix. Previously a single apt update && apt dist-upgrade would basically start a barrage of zio errors (and looking at those shows that there were basically only 2-4 Seconds/Blocks of error per each Device, but ZFS "keeps trying" non-stop most of the Time, according to my Tests). You could see iostat (trying to) write at say 150 MB/s and (trying to) read at say 150 MB/s as well. Non stop. Drive LEDs blinking non stop and System didn't manage to do anything …

As I said, disabling some Systemd Services AND switching back to the Intel Onboard SATA Controller allowed finally the System to start working again after approx. 1-2 Weeks of Troubleshooting and several Tests.

So in short it's a:

a) (Root Pool and Data Pools) LUKS ZFS Interfacing Issue (solved via “LOOP” Device middle-man)

b) (Root Pool Only) **PLUS** some systemd-cryptsetup / systemd-cryptsetup-generator Service

c) (Root Pool Only) **PLUS** some Race Condition and timing I guess with systemd-udevd (doing Partition Rescan at the wrong Moment and kicking the LUKS Device Off while in use … SMART :D).

Good to hear. Thanks for working to make it stable!

Great article definitely has something to think about when getting rid of my Synology.

One question? How can I get those title pictures as a canvas wall art? Without the wording? Those are awesome!

Hi, Sean! This was created by ChatGPT. Here’s the original before I overlayed the logos.

Proxmox has smartd, then I get an email when a drive is alerting (I always set my email address in the install wizard), works on any drive on an HBA, usually I run Dell PERC (megaraid) cards, which sends email alerts via megaclisas-status package. Turnkey Linux containers offers some easy options for samba on proxmox.

Great article. I’m about to go TrueNas with Jellyfin, Prometheus and 2 VMs. I plan to use the VM’s in a dmz situation secure from the rest of my network

“Backup” is not a verb. :-(

I don’t like to verb nouns, but sometimes it slips my mind!