Amazon Lightsail has entered the VPS market, competing directly with DigitalOcean and Vultr. I for one welcome more competition in the $5 cloud server space. I wanted to see how they perform so I spun up 24 cloud servers, 8 for each provider and ran some benchmarks.

$5 Cloud Server Providers Compared

DigitalOcean, Vultr, and Amazon Lightsail offer more expensive plans, but this post is dealing with the low-end $5 plans. Here’s how they compare:

DigitalOcean

- 512MB Memory

- 20GB HDD (extra block storage @ $0.10/GB/month)

- 1TB Bandwidth ($0.02/GB overage fee in U.S.).

- Free DNS

- Best team management – DigitalOcean lets you create multiple-teams and you can add and remove users from those teams.

- 99.99% SLA

- Floating IPs

- Ubuntu, FreeBSD, Fedora, Debian, CoreOS, CentOS

Vultr

- 768MB Memory

- 15GB HDD (extra block storage @ $0.10/GB/month)

- 1TB Bandwidth ($0.02/GB overage fee in U.S.)

- Free DNS

- Account sharing – allows you to setup multi-user access.

- 100% SLA

- Floating IPs (currently can’t setup automatically, requires support setup)

- Ubuntu, FreeBSD, Fedora, Debian, CoreOS, CentOS, Windows, or any OS with your Custom ISO.

Amazon Lightsail

- 512MB Memory

- 20GB HDD (block storage not available)

- 1TB Bandwidth ($0.09/GB overage fee in U.S.)

- 3 Free DNS zones (redundancy across TLDs as well).

- 99.95% SLA

- Amazon Linux or Ubuntu

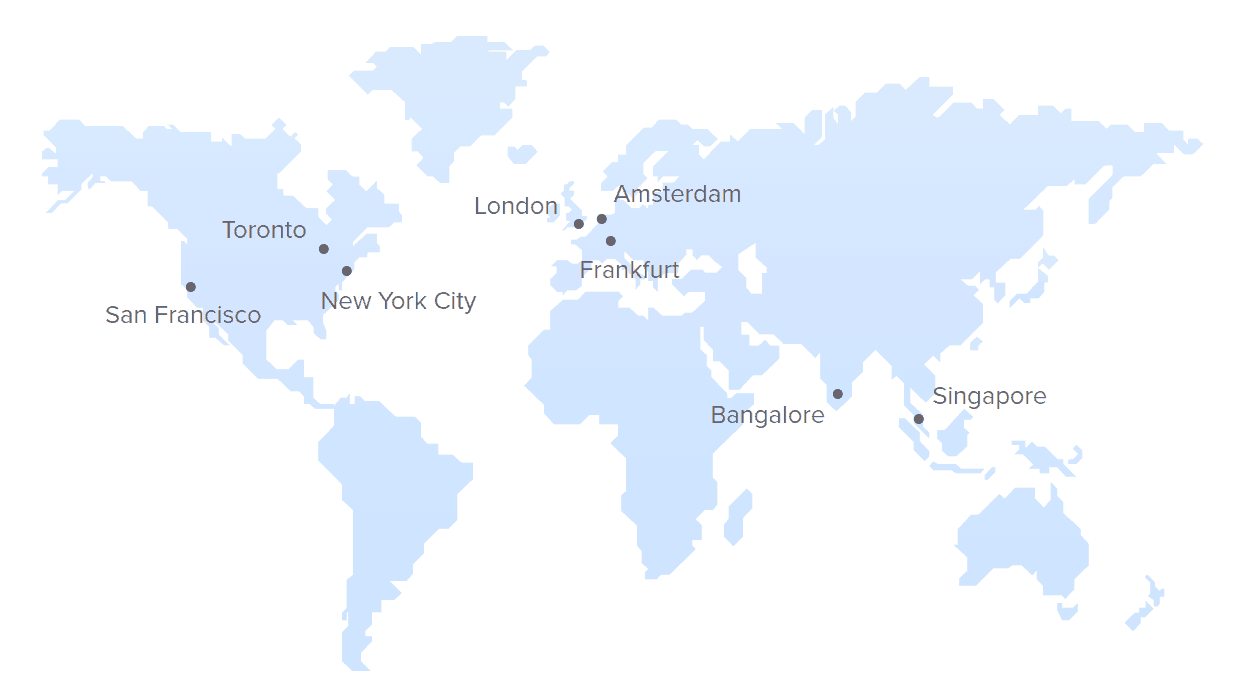

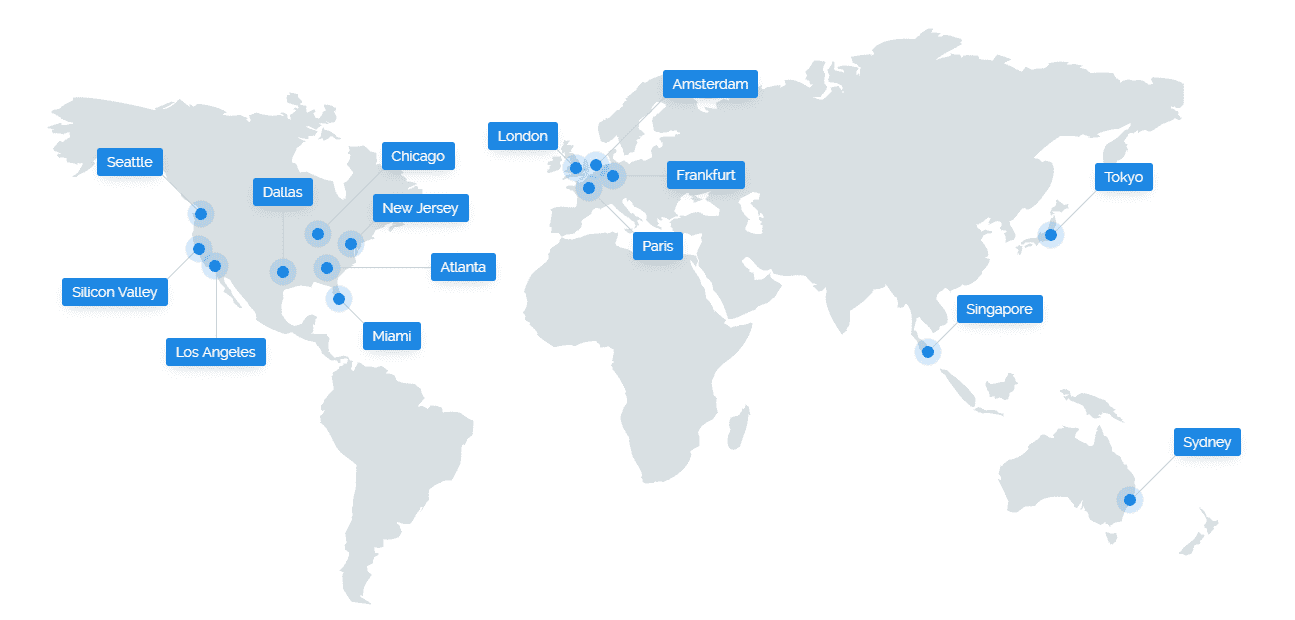

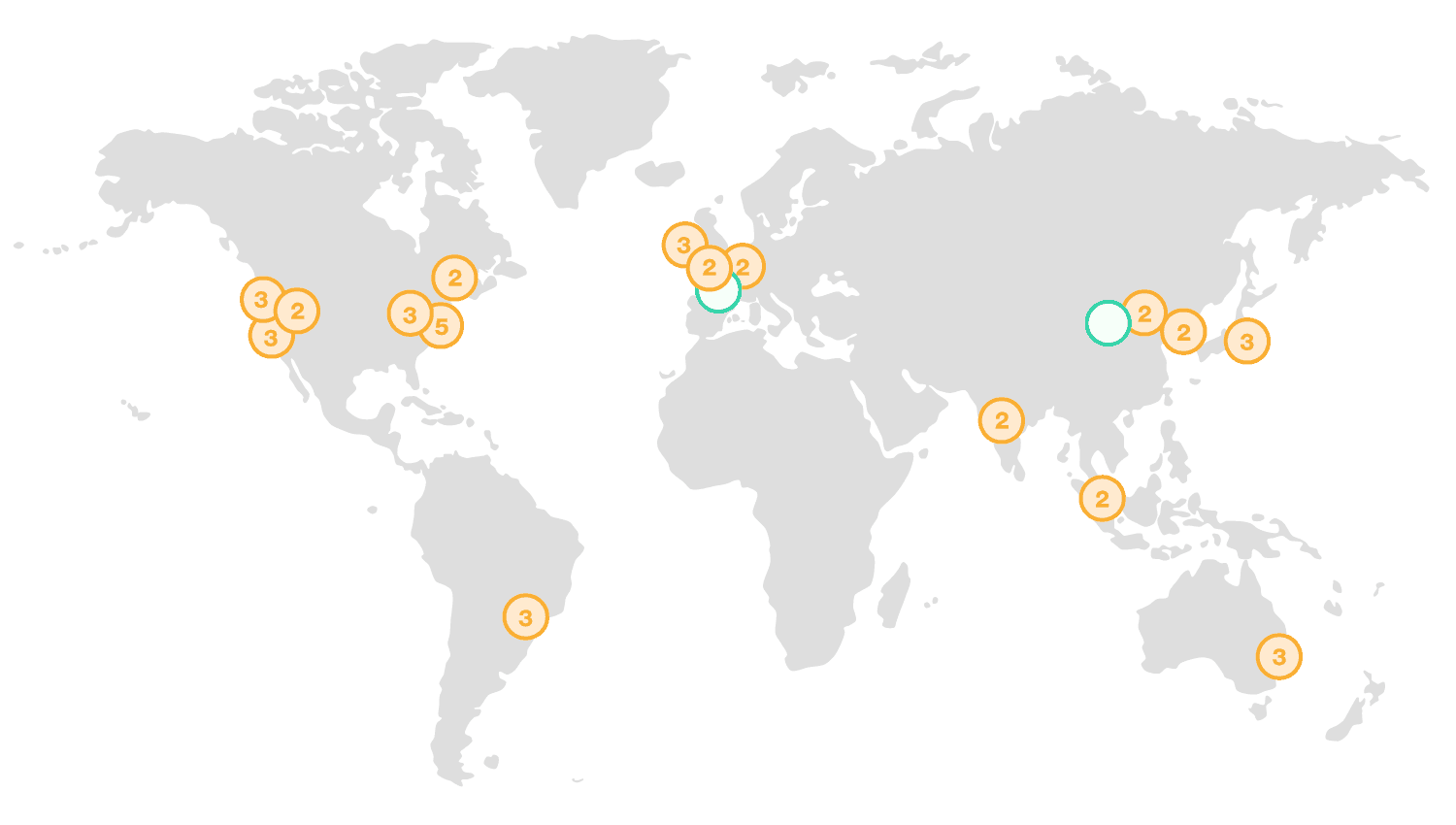

Geographic Locations

All three providers have multiple geographic locations worldwide. Vultr has the most locations in the United States, while Amazon has more geographic locations in the world (although only Virginia is available to LightSail at this point in time).

DigitalOcean Locations

Vultr Global Locations

Amazon Lightsail Global Infrastructure

API Automation

All providers offer an API. In practice DigitalOcean has been around the longest and thus is more likely to be supported in automation tools (such as Ansible). I expect support for the other APIs to catch up soon.

And finally…

Benchmarks

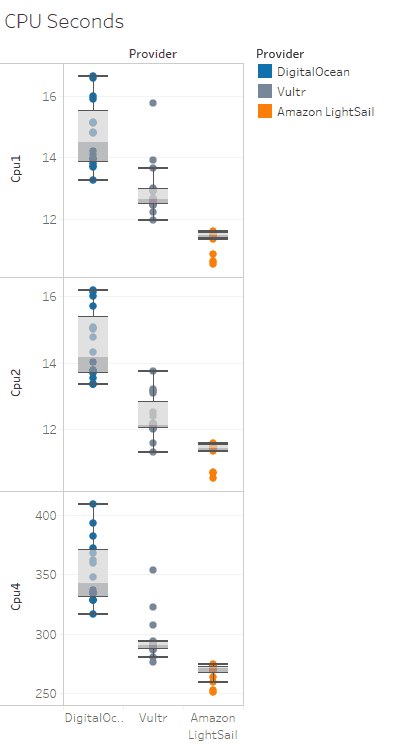

CPU Test – Calculating Primes

Number of seconds needed to compute prime numbers. On the CPU test Amazon Lightsail consistently outperformed, with Vultr coming in second and DigitalOcean last. CPU1 and CPU2 are 1 and 2 threads respectively calculating primes up to 10,000. CPU4 is a 4-threaded test calculating primes up to 100,000.

Lower is better.

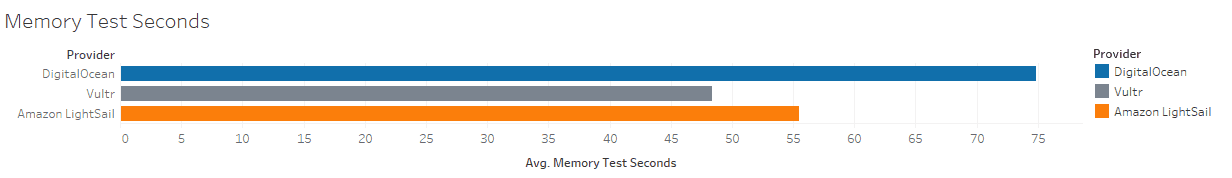

Memory

Lower is better.

(I accidentally omitted the memory test from my parser script and didn’t realize it until the last test ran, so this is the average of 4 results per provider)

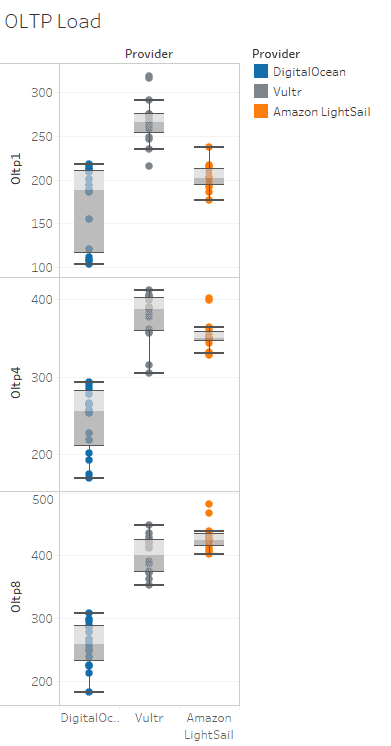

OLTP (Online transaction processing)

Higher is better.

The OLTP load test simulates a transactional database, in general it measures latency on random inserts, updates, and reads against a MariaDB database. CPU, memory, and storage latency all can effect performance so it’s a good all around indicator. This test measures the number of transactions per second. In this area Vultr outperformed DigitalOcean and Amazon Lightsail in 2 and 4 thread tests, while Lightsail took the lead in the 8-thread test. I don’t know why Lightsail started to perform better under multi-threaded tests, however, my guess is that while Lightsail doesn’t offer the fastest single-threaded storage IOPS it may have better multi-threaded IOPS–but I can’t say for sure without doing some different kinds of tests. DigitalOcean performed the worst in all tests–probably due to it’s slower CPU and memory speed.

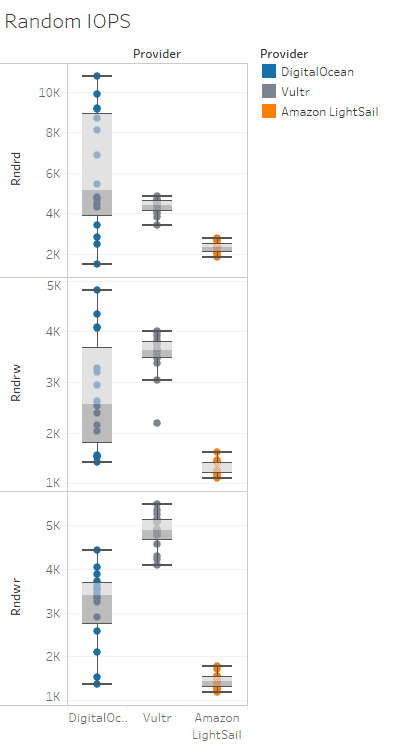

Random IOPS

Higher is better.

Transactions per second. In random IOPS Vultr provided the best consistent performance, DigitalOcean comes in second place with wide variance, and Lightsail comes in last, but it was by far the most predictable.

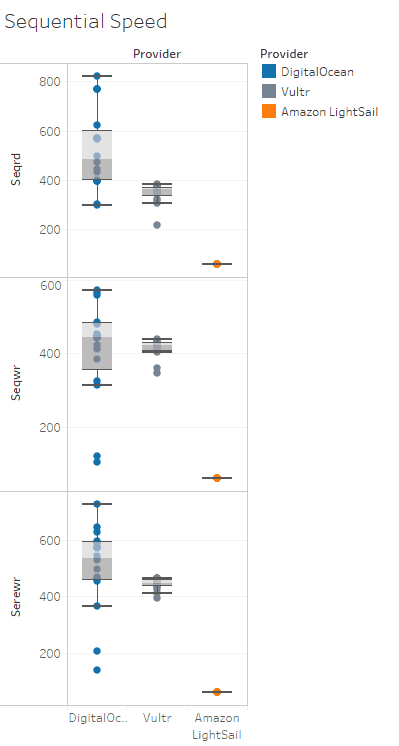

Sequential Reads / Writes / Re-writes

Higher is better.

This simply measures sequential read/write speeds on the hard drive. Vultr offers the most consistent high performance, DigitalOcean is all over the place but generally better than Lightsail which comes in last.

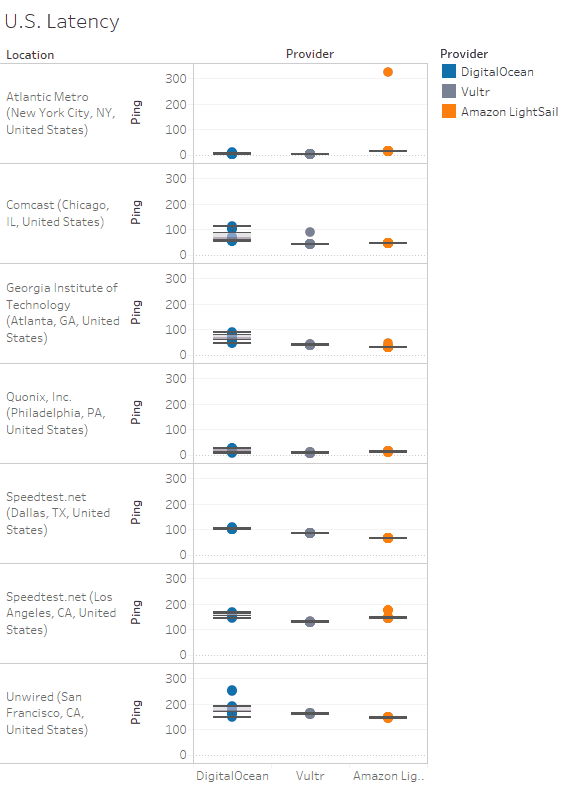

Latency (Ping ms) U.S. Locations

Lower is better.

The U.S. latency is all close enough that it doesn’t matter.

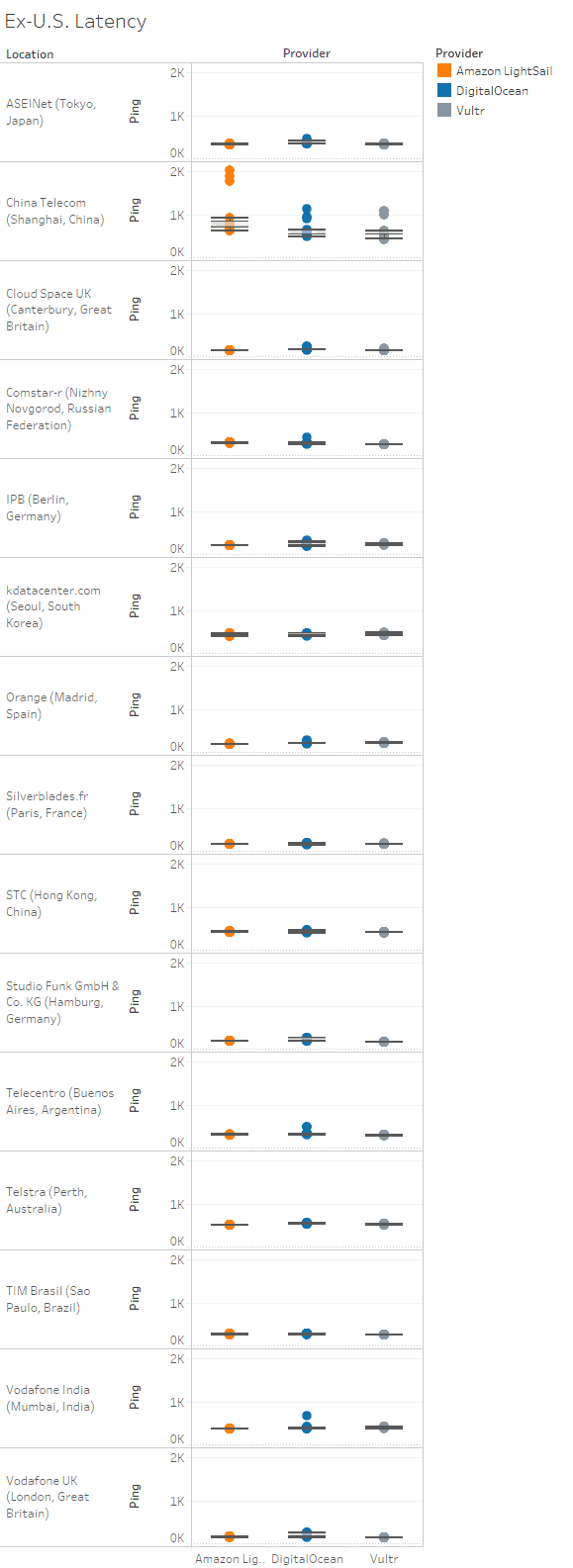

Latency (Ping ms) Worldwide Locations

Lower is better.

International Latency, again the results are pretty close.

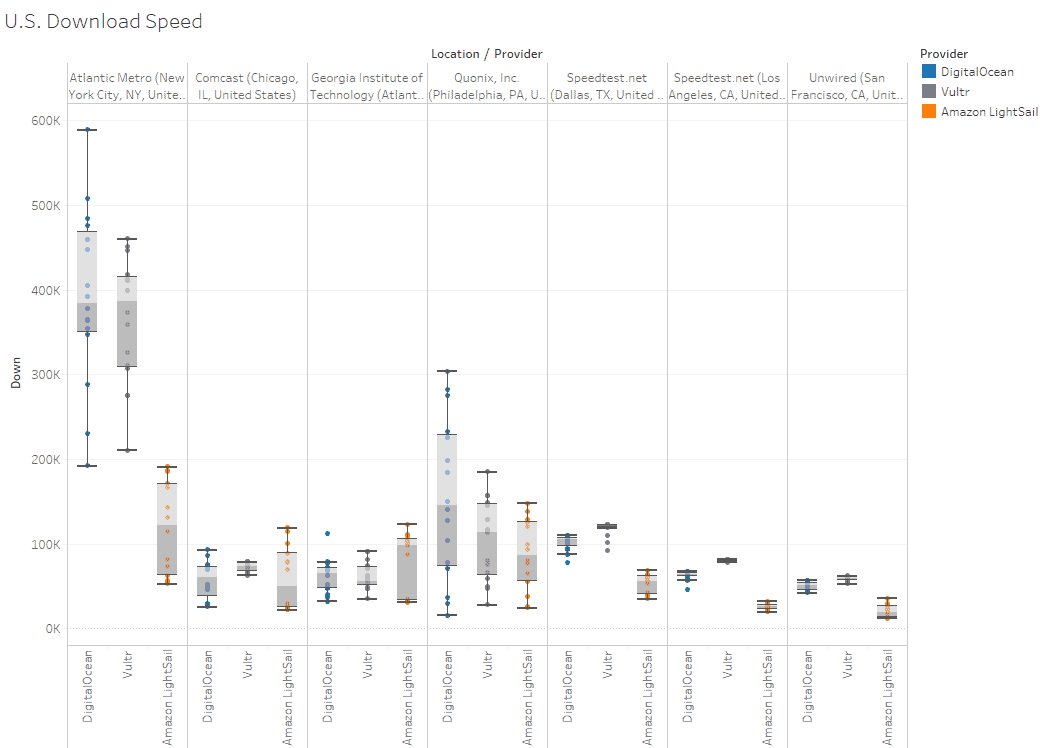

Download Speed Tests from U.S. Locations

Higher is better.

Downloading data from various locations. It’s really hard to conclude any meaningful analysis from this… the faster peering in New York probably has to do with DigitalOcean and Vultr being located in New York vs Lightsail’s location in Virginia.

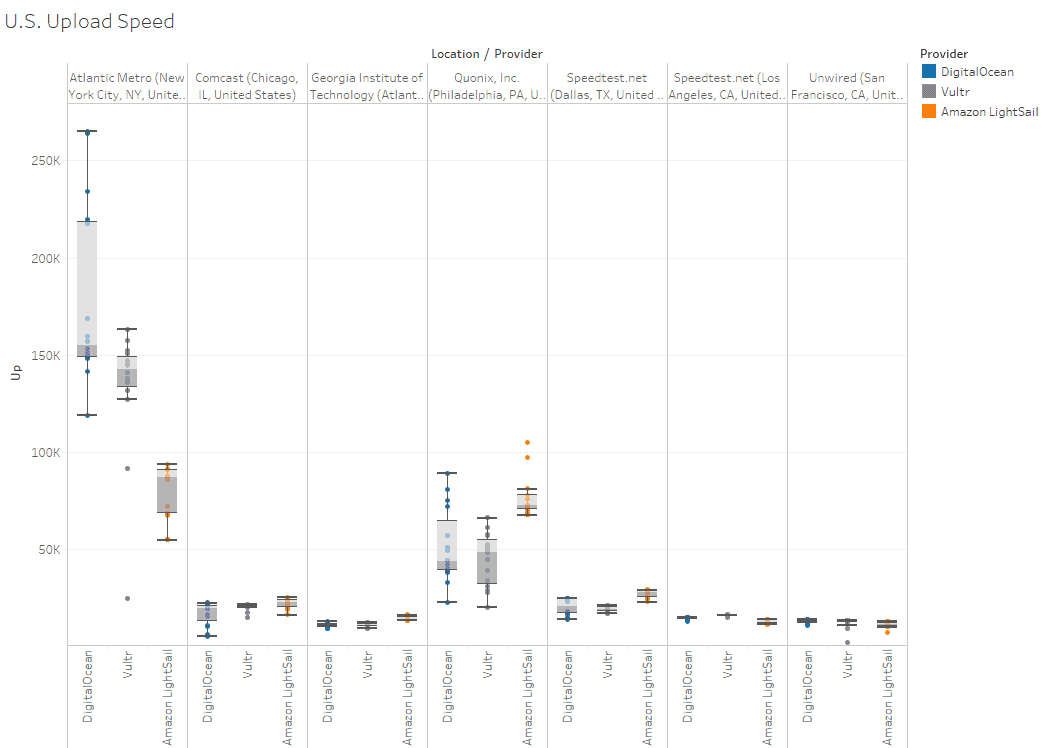

Upload Speed Tests to U.S. Locations

Higher is better.

Due to the similarities in the test results I think the bandwidth constraints are on the other side, or at peering.

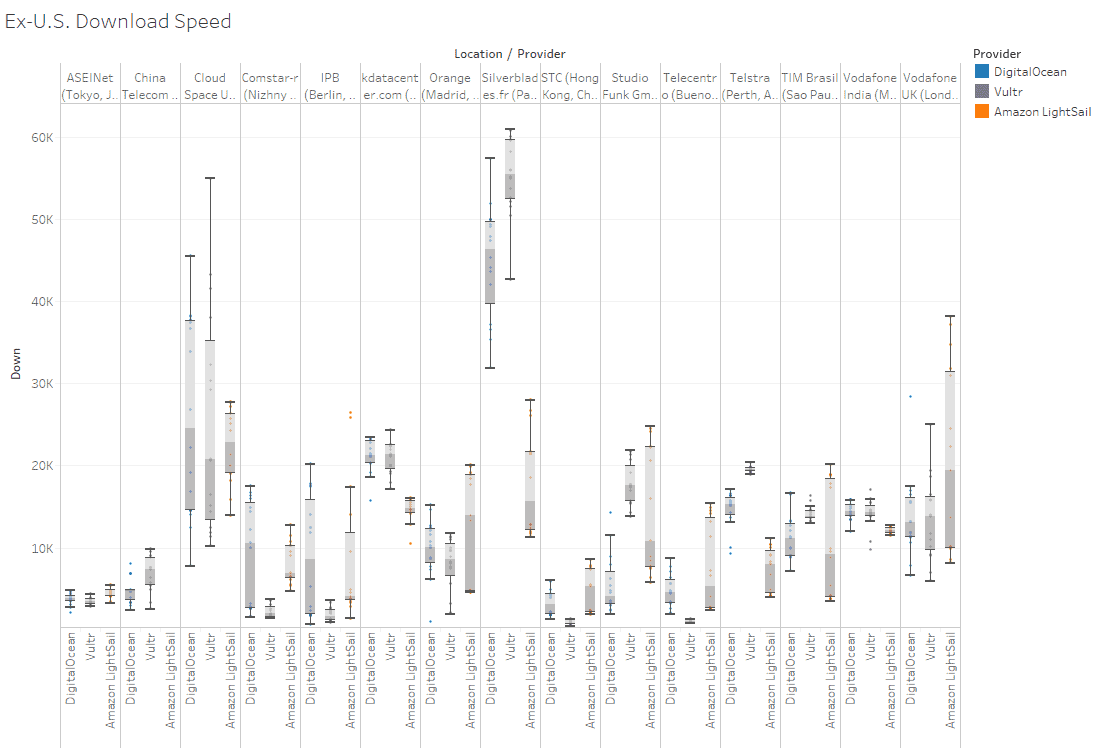

Download Speed Tests from Worldwide Locations

Higher is better.

Who knows what one could conclude from this, it seems like various providers have different quality peering to different worldwide locations, but there are so many variables it’s hard to say.

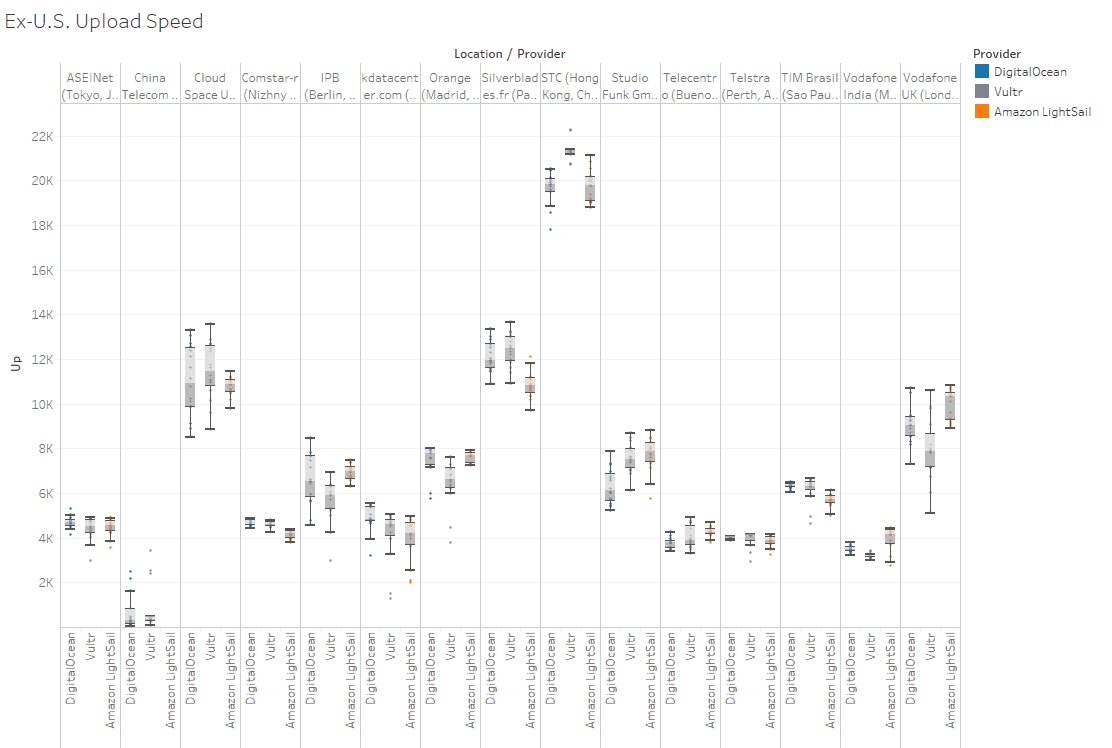

Upload Speed Tests to Worldwide Locations

Higher is better.

Similar groupings for the most part.

Testing Methodology

I spun up 24 x $5 servers, 8 for each VPS provider. I spun up 12 servers yesterday and ran tests, destroyed the VMs, then created 12 new servers today and repeated the tests. All tests were run in the Eastern United States. I chose that region because the only location available currently in Amazon Lightsail is Virginia, so to get as close as I could I deployed Vultr and DigitalOcean servers out of their New York (and New Jersey) data centers. New York is a great place to put a server if you’re trying to provide low latency to the major populations in the United States and Europe without using a CDN.

If the provider had multiple data centers in a region I tried to spread them out.

- DigitalOcean – I deployed 4 servers in NYC3, 2 in NYC2, and 2 in NYC1.

- Vultr – All 8 servers deployed in their New Jersey data center.

- Amazon Lightsail. Deployed in their Virginia location, 2 in each of their four AWS high availability zones.

All the tests I ran are relatively short duration, I did not benchmark sustained loads which may produce different results. My general use case is a web-server or small build server with intermittent workloads. I often spin up servers for a few hours or days and then destroy them once they’re done with their tasks.

Testing Scripts

The testing scripts I used are available in my GitHub meta-vps-bench repository. The testing scripts are very rudimentary and could be improved. It runs sysbench and speedtest benchmarks. The following commands were run on each server as root:

apt update

apt upgrade

reboot

git clone https://github.com/ahnooie/meta-vps-bench.git

cd meta-vps-bench

./setup.sh

./bench.sh

./parse.sh

cat speed*.result

I tried to stagger starting the tests so that multiple speedtests against the same location had a low risk of occurring at the same time… but it may not always work out that way. I ran all tests twice per server which gives 48 total results (16 for each provider).

This script is for testing. I do NOT recommend running this on production servers.

Data

I have published my results to Tableau’s Public Cloud.

Profit Center

This was a lot of work, give Ben some money!

Sign up for DigitalOcean using this link and I’ll get $25 service credit!

Sign up for Vultr using this link and I’ll get $30 credit!

Sign up for Amazon Lightsail and I get nothing. Oh well.

Well, that’s it.

Great stuff. I learned Vultr recently upgraded plans, and introduced a $2.50/month plan. All things considered, with special emphasis on the referral credit, Vultr is the way to go.

Yeah, I just noticed their $2.50 plan this morning. Except the bandwidth it’s the same as DO’s $5 plan… if you can scale your application horizontally it’s great to get a core per $2.50.