What’s the best virtualization solution for your homelab? The other day a friend asked me this. I replied with two options: VMware ESXi or Proxmox VE.

What is a hypervisor? In case you’ve been in the dark for the last decade, a hypervisor is a server that allows you to run multiple virtual machines (VMs) on physical hardware. VMs are useful for isolation (good for security and also for learning since you can wipe out and recreate VMs at will). But virtualization is also an essential foundation for any sort of IT infrastructure, including a homelab. A virtual environment gives you the flexibility to install and run almost anything down the road, even if you can’t foresee it today.

VMware ESXi or Proxmox VE

Two popular Hypervisor options are VMware ESXi and Proxmox VE. These are both Virtualization platforms and allow for running many VMs or containers at high density. Both perform well (I have not done formal testing, but I can’t tell the difference in CPU performance).

VMware is the king of virtualization. You will not find many companies not using VMware until you get to large corporations where open source solutions based on Xen or KVM take over. But anywhere in between from small to medium businesses, you will find VMware everywhere. Also, if you will use any other enterprise-class solution, be it a SIEM, storage, monitoring tool, etc. the vendor will most likely support running on VMware or have integration with it if applicable.

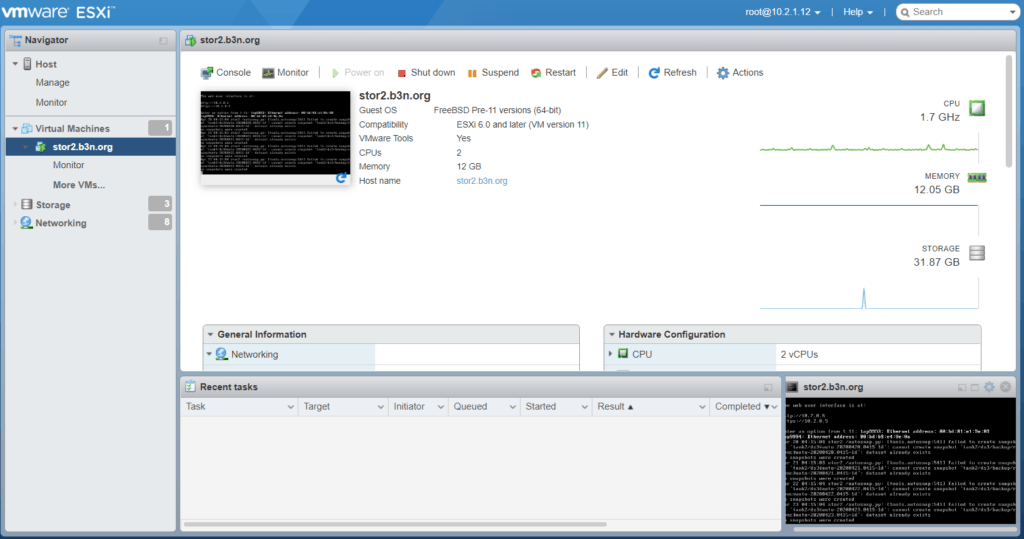

While VMware is proprietary, it is robust and fully featured. ESXi’s vSAN and distributed switching, high availability, and fault-tolerance are unparalleled.

VMware ESXi free is the hypervisor of choice for many homelabs, and VMUG Advantage ($200/year) allows you to use all the features (with up to 6 CPUs) in a non-production environment for no cost.

Another option I like is Proxmox VE. In fact, I like it better than VMware ESXi.

Over the last 16 months, I have migrated most of my VMware VMs over to Proxmox in my Homelab. But I don’t recommend this switch to everyone. If you are learning virtualization to work in an enterprise environment, you want to stick with VMware. Why? I know of hundreds of businesses that use VMware. I know of 1 using Proxmox.

Why Did I Switch to Proxmox VE? Ansible Integration

So why did I switch to Proxmox? In my case, I wasn’t learning VMware. I was learning Ansible. I was trying to automate some of my tasks like deploying VMs. When I tried to set up Ansible with VMware ESXi free in my homelab, I found out the free version of ESXi doesn’t have a REST API! There was no way Ansible could communicate with it. My first thought was to get VMUG, but I doubt my lab qualifies for VMUG since I run some VMs for business purposes. Also, I didn’t want to spend $200/year just for software. So I tried Proxmox to get Ansible integration. I decided if it worked out well, I would switch over to it. 16 months later I migrated my final VM to Proxmox.

Migrating from VMware to Proxmox

Proxmox has a guide to migrate from VMware. I couldn’t quite get that to work. However, what worked for me was mounting the FreeNAS NFS share for VMware from Proxmox. In Proxmox I created a new VM, but then I would delete the virtual disk file and use this command to import the .vmdk file from VMware:

cd /mnt/nfs/vmware/minecraft.b3n.org

qm importdisk 123 minecraft.b3n.org.vmdk pool1 --format vmdk

# ^ 123 is the Proxmox VM ID

My Thoughts on Proxmox

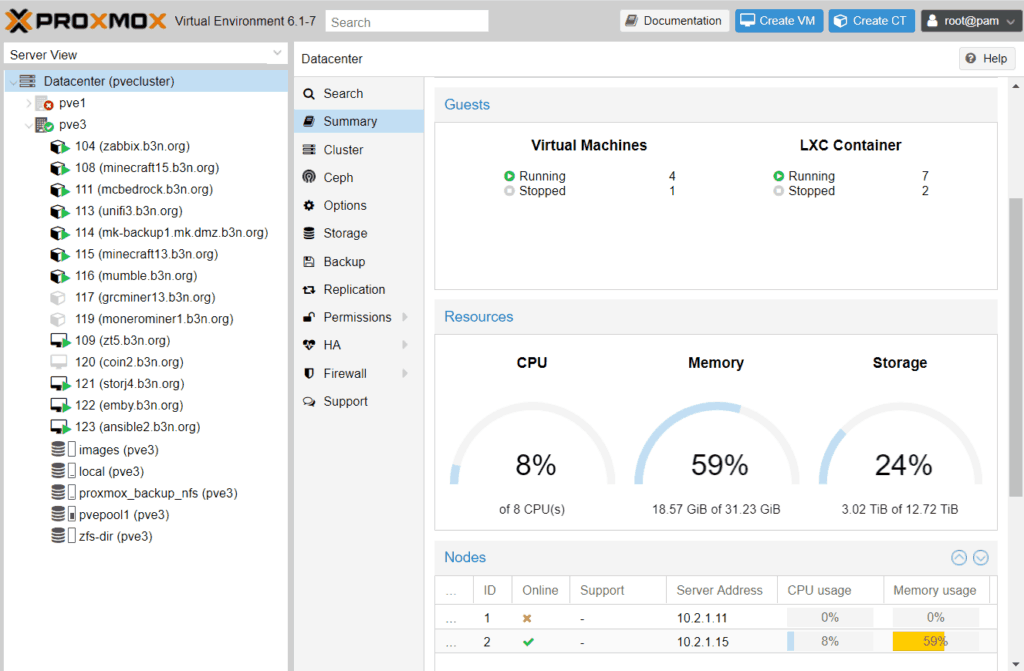

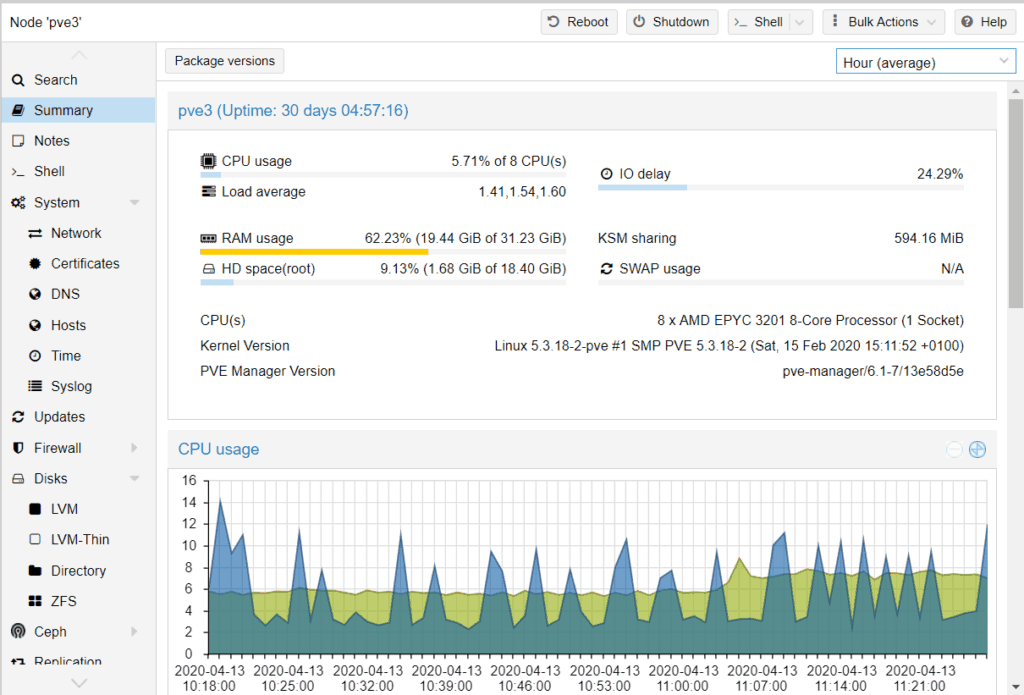

Proxmox is excellent. You get Clustering, Live-migration, High Availability. All for free. You can use ZFS on a single box without the complexity of running FreeNAS on VMware All-in-One. If you have two Proxmox servers, they can use ZFS to mirror each other. With three or more Proxmox servers (technically you only need two with a Raspberry Pi to maintain the quorum), Proxmox can configure a Ceph cluster for distributed, scalable, and high-available storage. From one small server to a cluster, Proxmox can handle a variety of scenarios.

Proxmox builds on open-source technology. KVM for VMs, LXC for Containers, ZFS or Ceph for storage, and bridged networking or Open vSwitch for networking. You could run the open-source components in an ad hoc manner yourself (before I tried Proxmox I had experimented with an Ubuntu LXD server), but Proxmox provides a nice single pane of glass. Besides the GUI, it adds some features like live-migration and integrated backups and alerts on failures or warnings.

My favorite feature in Proxmox that VMware doesn’t have is containers! These aren’t docker containers, but full system containers. Containers allow you to get a higher density (in terms of memory) than VMs because containers share the host kernel. Ubuntu reports a 14.5 higher density using containers instead of VMs. I love the way containers work. You can download whatever templates you want (they include all popular Linux distributions) and then instantly deploy containers from those templates.

Getting performance information on the cluster, individual nodes, VMs, or containers is simple. At a glance, you can see where your bottleneck is and see which VMs and containers are consuming what resources.

Why I would choose VMware ESXi or Proxmox VE

Reasons to use VMware ESXi

- Distributed virtual switching

- Fault Tolerance (the nearest you can get to zero-downtime on legacy systems).

- Enterprise-Class

- A good solution when uptime is important or 24/7 support or handholding is needed.

- Industry-standard

- Integration with other Enterprise-class solutions

- More Robust

- VT-d passthrough is easier to set up (Proxmox can’t set up a passthrough device in the GUI).

- Live network changes (Proxmox requires a reboot for network changes).

Reasons to use Proxmox VE

- All features are free (Ansible integration, High Availability, distributed storage, etc.)

- I’m already familiar with Ubuntu and Debian so Proxmox is easy to manage.

- Can install common open-source tools and packages easily since it’s based on Debian

- Better performance insights and history using tools like Zabbix or Netdata than I can get out of ESXi free.

- Integrated ZFS (useful for a single box using local storage without a RAID card)

- Better suited when licensing costs are important or open-source solutions are required.

- Built on and uses open source technologies

- LXC Containers–instant deploy allows for higher density than VMs.

- Updates are much easier than VMware since Proxmox is just apt-get update;

apt-get upgradeapt-get dist-upgrade (thanks for the correction Ivan). - Each Proxmox node is a complete management node so you have full control of your cluster. You aren’t concerned with anything like vCenter that can go down.

- Upgrades are a lot easier. I’ve seen VMware experts struggle at VMware upgrades. Proxmox provides straight forward upgrade procedures.

- Simple to automate SSL certs to the management interface with something like Let’s Encrypt

VMware ESXi and Proxmox VE feature comparison Chart

| Hypervisor | VMware ESXi Free | VMware ESXi VMUG | Proxmox VE CE | Proxmox VE Enterprise |

| Cost | Free | $200/year | Free | $85/year/CPU |

| Max CPUs | ∞ | 6 | ∞ | ∞ |

| Clustering | No | Yes | Yes | Yes |

| HA-Failover | No | Yes | Yes | Yes |

| HA-Storage | No | Yes, vSAN | Yes, Ceph | Yes, Ceph |

| Fault Tolerance | No | Yes | No | No |

| VMs | Yes | Yes | Yes | Yes |

| Containers | No | Yes | Yes | Yes |

| Live-Migration | No | Yes | VMs only | VMs only |

| VM Backups | 3rd Party: Veeam | 3rd Party: Veeam | Yes | Yes |

| GUI for Virtual Networking | Yes | Yes | No | No |

| Distributed vSwitch | Yes | Yes | No | No |

| Management | No | Yes | Yes | Yes |

| Storage Compatible | iSCSI, NFS, FC, FCoE, local | iSCSI, NFS, vSAN, FC, FCoE, SAN | iSCSI, NFS, ZFS, Ceph, CIFS, GlusterFS | iSCSI, NFS ZFS, Ceph, CIFS, GlusterFS |

| Enterprise SAN integration | N/A | Yes | No | No |

| Minimum Nodes for HA | N/A | 2 (3 preferred) | 3 (or 2 + witness device) | 3 (or 2 + witness device) |

| Per VM Firewall | No | No | Yes | Yes |

| License allows renting out VMs/Containers | No | VSPP Required | Yes | Yes |

| License allows business use | Yes | No | Yes | Yes |

| Code License | Proprietary | Proprietary | AGPLv3 | AGPLv3 |

| API (Integration with Ansible, etc.) | No | Yes | Yes | Yes |

Alternatives to ESXi and Proxmox VE

If you enjoy doing everything off the CLI, you can just use KVM for VMs and LXD for containers. I prefer to have some sort of management system. I find it helpful because it automatically does a lot of things for me like running backups, alerting on the system or drive failures, and monitoring performance. oVirt may be a good option, I passed it by because the list of supported operating systems is outdated so I don’t know how well maintained the project is. Bhyve is another option based on FreeBSD; however, last I tested, it wasn’t entirely stable and resulted in a few crashes under load. I tried to get Hyper-V up and running once, but it wanted to be in an Active Directory environment, and I didn’t want to hack it to work standalone. Xenserver is another option, but it has been discontinued. I do think the best options for homelab use are VMware and Proxmox. Of course, there’s also Kubernetes, but that’s for another post.

The Best Hypervisor for Your Goals

If your goal is to learn hypervisors or enterprise solutions that integrate with VMware for your career, the best hypervisor to learn is VMware ESXi. You cannot match the robustness and ubiquitousness of VMware. Otherwise, for homelab use, I prefer Proxmox. For a zero dollar budget, it offers a lot more freedom and features in the community edition than the ESXi free version.

Cost and licensing restrictions side, from a technical and feature perspective; I would rank the solutions as follows: ESXi VMUG > Proxmox VE Enterprise > Proxmox VE CE > ESXi Free.

One area that Proxmox excels is for those running a single box without hardware RAID. VMware ESXi free provides no software storage solution for a single hypervisor. Proxmox has built-in ZFS making for a much simpler design than the VMware and FreeNAS All-in-one.

My current homelab setup: I have Proxmox VE installed on two of my Supermicro servers. All of the VMs and containers are running there. And both Proxmox servers are backing up to my VMware+FreeNAS all-in-one box.

Which hypervisor do you use in your homelab, and why?

Good comparison. I run ESXi at work on multiple high-density enterprise class bare metals. For my home lab I considered it (via free ESXi). I tried Proxmox as well. While ESXi was definitely more intuitive for me due to familiarity, I hate to say I was sorely disappointed at Proxmox in testing. I loved the root ZFS and the Debian base. It just seemed like it took too many obtuse steps to spin a VM. I was probably doing it wrong. I hope.

I ended up settling on Ubuntu’s Server for a hypervisor with KVM/virt-manager. My requirements required trunking some networking ports plus some other oddities. I found it easier (!!) to configure this in Ubuntu CLI due to (at the time) limited Proxmox community forums versus Ubuntu forums and my own familiarity with overall Linux CLI operations. I still use ZFS for my primary storage. The only thing I really lost was 1) ZFS root partition (overcome by backing up my major configs offsite anyway) and 2) server monitoring tools. For my application I actually simplified things down to the point I only run one outside facing VM. Realistically I could use OMV and be done, but that requires a weekend to migrate (plus it is basically maintained by one person. What happens to the project if he is somehow not able to focus on it?) Thus, the monitoring is not as critical to me. htop, ps -ax, et al, are sufficient for my use case.

If I had to do it over, er…, again in the near future – I’m not sure which way I’d go. FreeNAS, Ubuntu, and Proxmox all look near equal, with Ubuntu/Proxmox slightly ahead if FreeNAS (for My use case and VM support). I’d basically be back to where I was when I first deployed my hypervisor. ;)

Of course, you of all people would find /any/ GUI too hard to use.

LoL

Hi, I think some points in the article needs some clarifications:

– “Updates are much easier than VMware since Proxmox is just apt-get update; apt-get upgrade” – The developers do not recommend to do apt-get upgrade. The recommended options is always to use apt-get dist-upgrade

– “VT-d passthrough is easier to set up (Proxmox can’t set up a passthrough device in the GUI)” – In version 6.x you can now assign PCIe devices, but you have to assign the VFIO driver manually anyway.

– “Live network changes (Proxmox requires a reboot for network changes).” – In version 6.x you can now apply network changes without restart.

– “technically you only need two with a Raspberry Pi to maintain the quorum” – alternative, not recommended and hacky way to achieve two node cluster is to give one of the nodes more votes than the other so alway at least one node of the cluster will have quorum and be operational.

For completeness maybe you can check XCP-ng which is XEN based, open source and seems to be gathering momentum and provides different mix of pros/cons compared to Proxmox.

Hi, Ivan. Thanks for the clarifications and for mentioning XCP-ng, I had not heard of that one but it looks to be a decent alternative. On the reboot to apply networking I rebooted to apply network changes on 6.1-7 while setting up bonding and vlans. I’m not using Openvswitch so maybe it only applies to that? Of course, it could be I just rebooted out of habit.

Hi Benjamin,

on proxmox 6.x you need to install the package “ifupdown2”. Then you can reload via the “Apply Configuration” button on the Network page. Hope i could help!

Leon

Thanks Leon, I’ll test it out. You would think that would be installed by defeault! Ben

You’ve got an apples and oranges thing going on here…

VMware, like Hyper-V and XenServer, are commercial products. They are developed by people that are paid for their work and are developing to ensure interop with other products in their collection of “stuff.” Anything that’s commercial that has a “community edition” (or free version) is going to be relatively on par with each other in terms of features. In fact, the XenServer product recently had some features moved from the free to the paid version specifically because other vendors were not offering them in their respective free versions.

Proxmox is an open-source project. This doesn’t make the developers any less capable, it just shifts where the priorities are and how quickly certain things get worked on. And that includes addressing bugs and security gaps. It also can impact whether any features get fully built before they are abandoned, too.

The single biggest compelling reason to use Proxmox over VMware would the ability to run containers directly in the system as opposed to having to build a Docker host first. But, this is also a reason to stay away from Proxmox. Being able to run a container directly means there’s more “bloat” in the base code than is necessary for a true virtualization environment.

I have a VMware server and a XenServer server. They both do exactly what I need, and they both suck. So, there you have it. :)

P.S. – Please keep your technology posts limited to technology.

Thanks for your thoughts, Mark! Thorough as always. Hopefully, this will help someone decide between the apple and orange.

Hi, I think you are having some misunderstanding for ProxMox. ProxMox is open source but it is supported and developed by a company with roadmap, support policy and so on https://proxmox.com/en/about

Nope. No misunderstanding.

Proxmox has a roadmap, but it will not be as far forward-looking as the roadmap for VMware or XenServer because Proxmox has only one other product (focused on email). They are developing software for components of the tech ecosphere that are relatively mature overall, so being innovative on either front is going to be difficult. What they HAVE done that differentiates them is provide a platform that can directly run containerized software while also supporting more traditional guest workloads. Then again, a Linux server with KVM and Docker installed could do this…

As far as being backed by a company and having support, this is common for Open Source products. Since so much of what they are providing is based on OTHER Open Source software, it’s difficult to draw distinct lines between what they are providing for free and what they are charging for as a product. So, they provide an option to obtain a “Support Contract” to leverage the knowledge they have about the install and setup. This is very much like putting an independent contractor on retainer.

What often happens to Open Source projects that have success is that they ultimately end up with a “Community Edition” of the product that the backing company puts effort into for only the base components and/or it ends up being a fork of what becomes a commercial product.