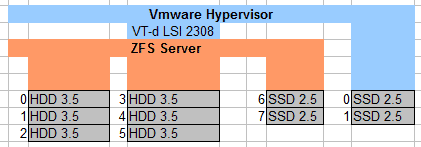

Here’s my latest VMware + ZFS VSAN all in one server build based on a Supermicro platform… this is borrowing from the napp-it all-in-one concept where you pass the SAS/SATA controller to the ZFS VM using VT-d and then share a ZFS dataset back to VMware using NFS or iSCSI. For this particular server I’m using FreeNAS as the VSAN.

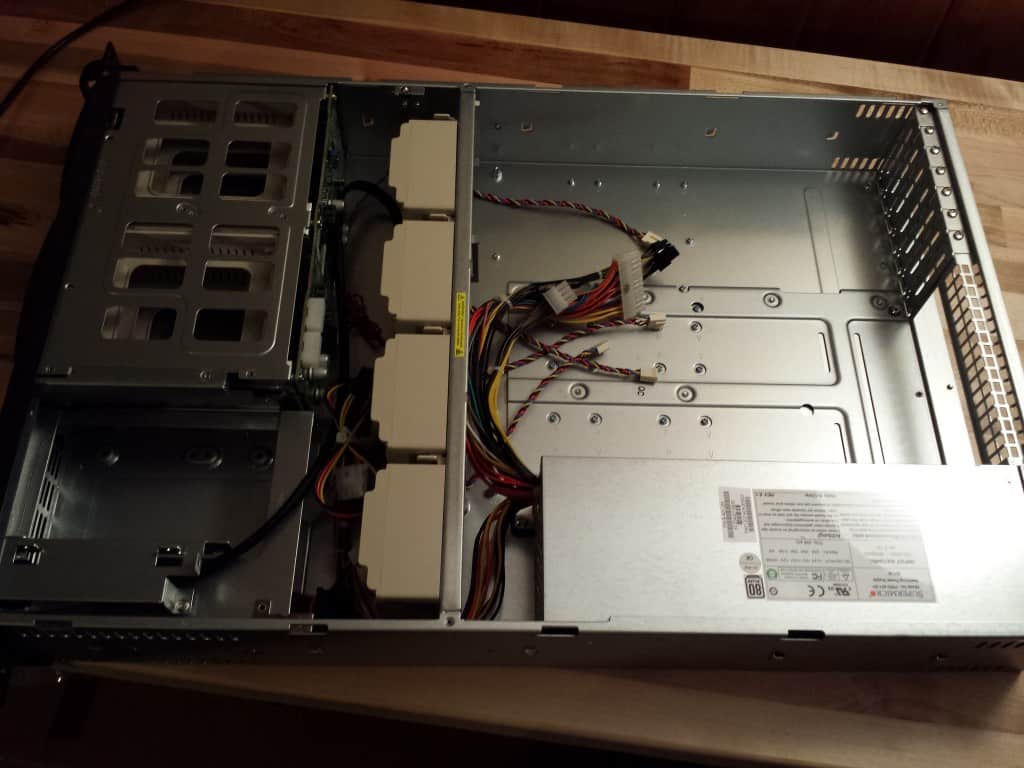

Chassis – 2U Supermicro 6 Bay Hotswap

For the case I went with the Supermicro CSE-822T-400LPB. It comes with rails, 6 hotswap bays, a 400W PSU (80 plus energy efficiency), and a 5.25 bay which works great for installing a mobile rack.

The 3400 RPM Nidec UltraFlo fans are too loud for my house (although quiet for a rack-mount server) and there is no way to keep the speed low in Supermicro’s BIOS so I replaced them with low noise Antec 80mm fans which are a couple of dollars each.

Unfortunately these fans only have 3 wire (don’t have the RPM sensor) so I used freeipmi (apt-get install freeipmi) to disable monitoring the fan speeds:

Motherboard – Supermicro X10SL7-F with LSI-2308

Supermicro’s X10SL7-F has a built-in LSI 2308 controller with 8 SAS/SATA ports. Using this across the two hotswap bays works perfectly. I used the instructions on the FreeNAS forum post to flash the controller into IT mode. Under VMware the LSI-2308 is passed to the ZFS Server which is connected to all 6 x 3.5″ hotswap bays plus the first two hotswap bays of the mobile rack. This allows for 6 drives in a RAID-Z2 configuration, plus one SSD for ZIL and another for L2ARC. The final 2.5″ drives in the mobile rack are connected into the two 6Gbps ports on the motherboard and used by VMware. I usually enable VMware’s host cache on SSDs.

Memory: Crucial

Memory: Crucial 8GB DDR3L (low volt) ECC Server Memory I got the 16GB kit.

CPU Xeon E3-1231v3 Quad Core

I think the Xeon E3-1231v3 is about the best bang for the dollar for an E3 class CPU right now. You get 4 3.4GHz cores (with 3.8GHz turbo), VT-d, ECC support, and hyper-threading. It’s about $3 more than the Xeon E3-1230v3 but at 100MHz faster. I’m actually using the Xeon E3-1240v3 (which is identical to the 1231v3) because I saw a good price on a used one a while ago.

Mobile Rack 2.5″ SSD bay

ICY DOCK ToughArmor 4 x 2.5″ Mobile Rack. The hotswap bays seem pretty high quality metal.

Hard Drives: See my Hard Drives for ZFS post. I’m running 2TB Seagate 7200 RPM Drives, although they may not be the most reliable drives they’re cheap and I’m confident with RAID-Z2 (and I also always have two off-site backups and one onsite backup). For SSDs I use Intel’s DC S3700 (here’s some performance testing I did on a different system, hopefully, I’ll get a chance to retest on this build)

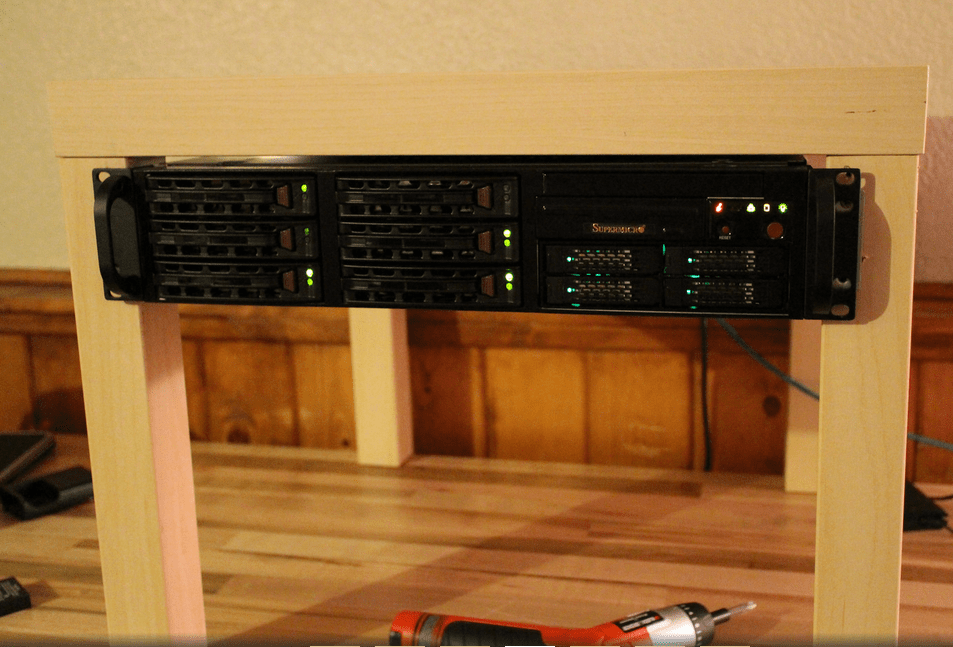

Racking with Rails on a Lackrack

Awhile ago I discovered the Lackrack (these are $7 at Ikea)… I figured since I have rails I may as well install them. The legs were slightly too close together so I used a handsaw to cut a small notch for the rails and mounted the rails using particle board screws. One thing to note is the legs are hollow just below where the top screw of the rail mount goes so only that top screw is going to be able to bear any load.

Here’s the final product:

What VMs runs on the server?

- ZFS – provides CIFS and NFS storage for VMware and Windows, Linux, and FreeBSD clients. Basically all our movies, pictures, videos, music, any anything of importance goes on here.

- Backup server – backs up some of my offsite cloud servers.

- Kolab – Mail / Groupware server (Exchange alternative)

- Minecraft Server

- Owncloud (Dropbox replacement)

- VPN Server

- Squid Proxy Cache (I have limited bandwidth where I’m at so this helps a lot).

- DLNA server (so Android clients can watch movies).

Hi Ben, just wondering how much the whole setup ended up costing you?

Hi, Mango. Well, the only thing I bought new was the case and the mobile rack for the SSDs, I already had everything else.

My good sir. Are you running ESXi 5.5? If so, does it detect the Intel storage controller automatically or did you have to make a custom installation medium?

How would you rate the benefit of using ESXi host cache vs ZIL SLOG/L2ARC?

Yes, ESXi 5.5. It detected the Intel controller automatically, I didn’t have to install any drivers. I am not running the Intel controller in RAID mode or anything like that, just AHCI.

Here’s my ranking in terms of importance, obviously different workloads will benefit differently but in general this is how I rank them:

1. ZIL SLOG device. This really speeds up writes. If I had one SSD it would be for ZIL.

2. Max out memory (32GB on Xeon E3). You can use this for more ARC or for more VMs. Try to give the ZFS VM enough ram to keep ARC cache hit ratio above 90% and you can give the rest to VMware for VMs.

3. SSD A second L2ARC if ARC cache hit ratio is below 90%, otherwise a second ZIL device striped.

4. Get an SSD for VMware to balloon onto if I’m over-provisioning VM memory.

Obviously you’d have to judge based on your workload. I kind of based my decisions on the controllers… I only have 8 ports on the LSI 2308, 6 are taken by spinners so that only leaves me two SSDs that I can pass directly to the ZFS VM so I just gave the other two SSDs to VMware for hostcache.

Single point of failure…

I am dipping my toes into the All-In-One world using a system based on the same motherboard you chose here, and, like you, I am passing the eight X10SL7 LSI ports to FreeNAS. But aren’t you concerned about placing your FreeNAS image on a single nonredundant disk? I realize it’s kind of overkill, having to install a RAID controller for the datastore. But my understanding is that ESXi doesn’t recognize RAID arrays based on Intel’s built-in motherboard RAID controller, so that’s the only solution I see. Am I missing something?

(And BTW, thanks a zillion for your blog!)

Hi, Keith. Thank you. I actually mirror the boot drives that freenas is on.

So two ssds are on the motherboard sata ports and used as esxi data stores so when i create the FreeNAS vm I create a vmdk on each SSD. When installing you can set those up as a zfs boot mirror.

Hi Ben,

great blog at all, i got nearly the same setup here and it runs perfectly. I am just wondering which cpu cooler do you use in this 2u case. The stock one or maybe a passive one?

A very detailed question, but a short line would be great for me.

Thanks, Marc

Hi, Marc. For the CPU I just use the stock CPU fan that came with it… this is not really ideal because the stock CPU cooler blows the air up and what you really want is to blow the air towards the back. But it works. Since I wrote this post I have switched from Antec to Noctua fans which are quieter. I got four of these NF-A8 PWM http://amzn.to/2fNFEWU but one problem I have is when it gets hot they tend to hunt back and forth between high and low RPM speed when the server is under load which is a little annoying, so if I were to do it over again I’d get two PWM fans and two FLX (fixed speed) such as the Noctua NF-A8 FLX: http://amzn.to/2fNEXNA

Ben,

Thanks very much for posting these guides. I am trying to follow along, but am new to the world of backplanes and SAS. If I follow the recommendations for case and mobo, what cables do I need to connect the 6 SAS2 ports on the backplane of the 822T case to 6 of the 8 port controlled by the SAS controller on the X10SL7? They looked like regular SATA cables in the photos, but the manuals for the chassis and motherboard called them SAS2 ports, so I was confused.

What do you recommend for spinners to populate the hotswap bays? Did you actually use SAS drives, or SATA drives? I’ve built NASes before with WD Red, but unsure of SAS. Any recommendations are appreciated. Intended use is in the home, as the VSAN behind a backup target and some light duty servers (minecraft, home lab, home automation controller, etc).

Thanks!

Hi, Garin. I used normal SATA drives, and normal SATA cables (I may have had to get them slightly longer than usual, I don’t remember, it’s possible I used the ones that came with the motherboard)… the LSI-2308 HBA actually has 8 SATA connections like you saw in the manual so you don’t need a SAS breakout cable and likewise backplane has 6 individual SATA connections, 1 for each hard drive instead of a mini-SAS connector.

Here’s my hard drive guide: https://b3n.org/best-hard-drives-for-zfs-server/ basically I’d suggest WD Reds or HGST Deskstar. Doesn’t sound like you need high performance (heavy random I/O) but if you did I’d go with the HGST Deskstars because of the higher RPM. If you don’t have great cooling I’d stick with the Reds. Personally I’m running 6TB whitelabel drives (which are likely WD Reds minus the brand label and warranty).

It sounds like you’re environment is very similar to mine, I run home-assistant, miencraft server, unifi, emby, etc.

Thanks so much for the reply Ben! I am off to order hardware. And thanks for the tip on white label drives…never knew such existed!

Ben, Thanks for the amazing guide. I am going to build my first FreeNAS and if I am to get the same Motherboard, CPU and RAM minus the HBA card, would it support 8 Samsung 256GB SSD Sata 3.0Gb/S? just going to build FreeNAS as the vSAN and use it as NFS for VM stuff.

Thanks,

Le

Ben,

I like where you’re head’s at. I ended up buying a 25U rack for the basement, but your solution is much better space saver.

Supermicro seems to have this market space on lock for hardware, and I haven’t seen a better open source / free space product than FreeNAS.

https://www.candiamantics.com/projects/freenas-storage-solution/

I’ve been going over your SLOG post, thanks.

I have read somewhere that its not recommended to virtualized FreeNas. Is this because of the risks on losing data or is this mostly due to performance?

FreeNAS just wasn’t designed for virtualization. With FreeNAS 10 and 11 that’s not really an issue. Also, there are wrong ways to do it where you can cause data corruption. Now days for small businesses and homelabs a virtual FreeNAS unit is fine. Although I’d strongly suggest not doing it in a mission critical environment… best to keep it simple in those cases (of course, I’d be running TrueNAS in that case).