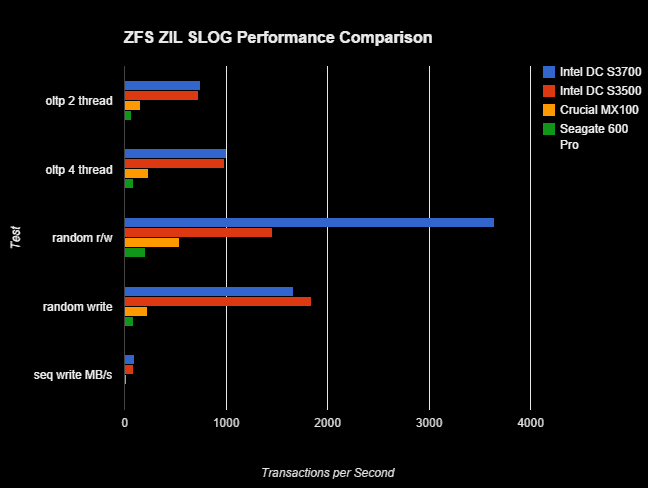

I ran some performance tests comparing the Intel DC S3700, Intel DC S3500, Seagate 600 Pro, and Crucial MX100 when being used as a ZFS ZIL / SLOG Device. All of these drives have a capacitor backed write cache so they can lose power in the middle of a write without losing data.

Here are the results….

The Intel DC S3700 takes the lead with the Intel DC S3500 being a great second performer. I am surprised at how much better the Intel SSDs performed over the Crucial and Seagate considering Intel’s claims are not as great as the other two… Intel claims slower IOPS, and slower sequential performance yet it outperforms the other two SSDs.

| SSD | Seagate 600 Pro | Crucial MX 100 | Intel DC S3500 | Intel DC S3700 |

|---|---|---|---|---|

| Size GB | 240 | 256 | 80 | 100 |

| Model | ST240FP0021 | CT256MX100SSD1 | SSDSC2BB080G4 | SSDSC2BA100G3T |

| Sequencial (MB/s) | 500 | 550 | Read 340 / Write 100 | Read 500 / Write 200 |

| IOPS Random Read | 85,000 | 85,000 | 70,000 | 75,000 |

| IOPS Random Write | 11,000 | 70,000 | 7,000 | 19,000 |

| Endurance (Terabytes Written) | 134 | 72 | 45 | 1874 |

| Warranty | 5 years | 3 Years | 5 years | 5 years |

| ZFS ZIL SLOG Results Below | — | — | — | — |

| oltp 2 thread | 66 | 160 | 732 | 746 |

| oltp 4 thread | 89 | 234 | 989 | 1008 |

| random r/w | 208 | 543 | 1454 | 3641 |

| random write | 87 | 226 | 1844 | 1661 |

| seq write MB/s | 5 | 14 | 93 | 99 |

Drive Costs

- Crucial MX 100 – 256GB – $113

- Seagate 600 Pro – 240GB – $190

- Intel DC S3500 – 80GB – $110

- Intel DC S3700 – 100GB – $208

Conclusion

- For most workloads use the Intel DC S3500.

- For heavy workloads use the Intel DC S3700.

- The best performance for the dollar is the Intel DC S3500.

In my environment the best device for a ZFS ZIL is either the Intel DC S3500 or the Intel DC S3700. The S3700 is designed to hold up to very heavy usage–you could overwrite the entire 100GB drive 10 times every day for 5 years! With the DC S3500 you could write out 25GB/day every day for 5 years. Probably for most environments the DC S3500 is enough.

I should note that both the Crucial and Seagate improved performance over not having a SLOG at all.

Unanswered Questions

I would like to know why the Seagate 600 Pro and Crucial MX100 performed so badly… my suspicion is it may be the way ESXi on NFS forces a cache sync on every write, the Seagate and Crucial may be obeying the sync command while the Intel drives are ignoring it because they know they can rely on their power loss protection mechanism. I’m not entirely sure this is the difference but it’s my best guess.

Testing Environment

This is based on my Supermicro 2U ZFS Server Build: Xeon E3-1240v3, The ZFS server is a FreeNAS 9.2.1.7 running under VMware ESXi 5.5. HBA is the LSI 2308 built into the Supermicro X10SL7-F, flashed into IT mode. The LSI 2308 is passed to FreeNAS using VT-d. The FreeNAS VM is given 8GB memory.

Zpool is 3x7200RPM Seagate 2TB drives in RAID-Z, in all tests an Intel DC S3700 is used as the L2ARC. Compression = LZ4, Deduplication off, sync = standard, encryption = off. ZFS dataset is shared back to ESXi via NFS. On that NFS share is a guest VM running Ubuntu 14.04 which is given 1GB memory and 2 cores. The ZIL device is changed out between tests, I ran each test seven times and took the average discarding the first three test results (I disregarded the first three results to allow some data to get cached into ARC…I did not see any performance improvement after repeating a test three times so I believe that was sufficient).

Thoughts for Future Tests

I’d like to repeat these tests using OmniOS and Solaris sometime but who knows if I’ll ever get to it. I imagine the results would be pretty close. Also, of particular interest would be testing on VMware ESXi 6 beta… I’d be curious to see if there are any changes in how NFS performs there… but if I tested it I wouldn’t be able to post the results because of the NDA.

Test Commands

sysbench --test=fileio --file-total-size=6G --file-test-mode=rndrw --max-time=300 run

sysbench --test=fileio --file-total-size=6G --file-test-mode=rndwr --max-time=300 run

sysbench --test=oltp --oltp-table-size=1000000 --mysql-db=sbtest --mysql-user=root --mysql-password=test --num-threads=2 --max-time=60 run

sysbench --test=oltp --oltp-table-size=1000000 --mysql-db=sbtest --mysql-user=root --mysql-password=test --num-threads=4 --max-time=60 run

sysbench --test=fileio --file-total-size=6G --file-test-mode=seqwr --max-time=300 run

ur benchmarks is really helpful for my home lab and NAS .

You’re welcome. Glad it helped.

Hi, very interesting results. I’m not using ESXi and wonder how these benchmarks reflect non-ESXi setups. And also I’m wondering about the results between Intel and non-Intel SSDs. I couldn’t find any confirmation regarding that the Intel SSDs are ignoring the sync because they rely on the supercap. I don’t even know if they can ignore it… it is the jurisdiction of the OS, isn’t it?

And the Crucial MX100 does have a supercap as well.

Hi, Hhut. I did find some useful information on smartos-discuss thread where someone tested the S3500 vs the Seagate 600 Pro (using SmartOS so the slow performance on non-Intel SSDs is not specific to VMware). See: https://www.mail-archive.com/search?l=smartos-discuss%40lists.smartos.org&q=subject%3A%22Re%3A+%5Bsmartos-discuss%5D+zfs_nocacheflush+and+Enterprise+SSDs+with+Power+Loss+Protection+%28Intel+S3500%29%22&f=1

I thought that the intels were ignoring the sync but I don’t think that’s the case now. As I understand it (I’m not really an expert on this) normally the OS will write data to the disk which the drive will buffer in cache and periodically flush to disk… but when the OS issues a sync command the disk has to move that data from it’s cache and onto stable storage before sending the confirmation back to the OS. So it’s possible that a disk can just ignore the sync command to improve performance and if you have a capacitor backed cache you can ignore sync commands because your cache is already stable in that you won’t lose it if the power goes out. This is how NVRAM works in RAID controllers. However, I don’t think the Intel SSDs are ignoring the sync command, I just think they’re very fast at moving data from the cache to flash.

Hi Benjamin

From your chart above you make the recommendation that “The Intel DC S3700 takes the lead with the Intel DC S3500 being a great second performer” – However ZIL on a SLOG is write only unless a recovery is needed – so based on your tests the Intel DC S3500 is just as good or better.

(Note – I am not talking about endurance or MTBF here which has massive bias towards the Intel DC S3700).

Most people seem to think a 4K QD32 write test is a key metric (via CrystalMark) for evaluating SSDs for ZIL on a SLOG – you can also find a lot sound recommendations here http://nex7.blogspot.com/2013/04/zfs-intent-log.html

Some older benchmarks can bee seen here http://napp-it.org/doc/manuals/benchmarks_5_2013.pdf – the key points are:

– Sync write perfomance is only 10-20% of async without dedicated ZIL !!!

– A ZIL build from a 3 years old enterprise class SLC SSD is mostly slower than without ZIL

(this pool is build from fast disks, but a dedicated ZIL needs to be really fast or its useless)

– A Intel 320 SSD (quite often used because of the included supercap) is a quite good ZIL,

You get up to 60% of the async values (at least with a larger 320, i used a 300 GB SSD)

– Only a DRAM based ZeusRAM is capable to deliver similar values like async write

– Some SSDs like newest SLC ones or a Intel S3700 are very good and much cheaper

And of course the SSD should have super capacitor or equivlent in the event of power lose.

Also IMHO ZIL doesn’t really care about IOPS nor does it generally need a lot of space 8GB to 16GB is fine (I’m not talking about reliability here again). What ZIL on a SLOG wants is low latency like a Intel DC S3700 (45 μs) or a HGST s840Z SAS SSD (39 us). Even though it’s seems small 8GB a ZeusRAM (23µs) is still a fantastic SLOG choice to put your ZIL on and no worry’s about flash issues since it only comes into play as a backup on power failure.

Speaking of low latency keep in mind that SATA has more overhead than a SAS drive. Furthermore a direct PCIe interface is best but they are rarely supported in ZFS capable operating systems and also quite expensive, the holy grail for ZIL will be NVMe (e.g. NVDIMMS) but I have yet to see any support in any ZFS capable operating system.

Hi, Jon.

Not exactly. Even though the SLOG is never read from under normal circumstances the read/write test still perform better. I believe this is because the DC S3700 has a lower write latency and that just happened to show up in that test. So if you need performance on heavy transnational workloads the DC S3700 is probably going to outperform the 3500 by a fair amount when being used as a ZIL.

How did you determine the latency of the S3700 as 45 μs? According to http://www.intel.com/content/dam/www/public/us/en/documents/product-specifications/ssd-dc-s3700-spec.pdf, average write latency is 65 μs.

Good catch, Albert. The DC S3700 has a read latency of 45, Jon must have mixed it up with the write latency.

Not sure if my system is broken but I’m getting nowhere near these numbers not even with sync disabled. Makes me wonder if something is wrong with my setup.

Hi, Dave. Can you describe how your hardware is setup? Are you running the test over gigabit internet, or the VMware virtual 10gbe network? How many drives do you have and what speed are they?

Also, if you’re running everything under VMware like me make sure you aren’t over-allocating your cores during benchmarks (keep hyperthreading in mind).

Hello Ben,

I am building up a home lab with some older Seagate 5900 RPM@2TB disks that I will use for VMware datastore connected by an IBM m1015 HBA. Everything is physical, I have an Intel S1200BTLR board with a Xeon E3-1230 and 32GB of RAM. I also have SSDs for higher workloads. Can you tell me if investing in a pair of Intels S3500 for an SLOG would add value to the array making it fater or not?

Thanks,

Andrei

Hi, Andrei. If you’re using iSCSI with VMware sync=always or NFS with sync=always or sync=standard you’ll see a benefit by having a SLOG. Obviously it depends on your workload… one easy way to tell is test your workload with sync=disabled (which disables ZIL on disk, so the ZIL is only in RAM) if you see a performance increase then you will likely benefit from a SLOG.

Are used s3500 os s3700’s still the best bet for a home use SLOG? From what I’ve been able to dig up – even drives like the Samsung 850 EVO/Pro do not behave well as a SLOG (and do not have capacitor backed write cache).

Hi, Mark. I agree, the Samsung 840 isn’t meant to be an enterprise class SSD. I think the best drives for SLOG are still the Intel DC S3500 or DC S3700, or the newer versions which are DC S3510, DC S3610 and S3710.

Thanks! I just grabbed a 200gb s3700. Should meet my needs.

Hi, I know this POST is a little old but an excellent reference post at that. I upgraded my FreeNAS home lab with a Kingston 240GB V300 SSD Flash Drive. I added it as an LOG to the existing zpool using NFS in a vSphere environment. My performance is nine fold on a storage vMotion. My previous Windows 7 template vmdk flat file (13GB/32GB) which took 45 minutes to storage vMotion now takes 5 minutes. A huge time saver; I am a happy camper.

[root@nas2]13567553 -rw——- 1 root wheel 32G Dec 8 16:25 Template-Windows7SP1-Patched-May-2016-flat.vmdk

– Bare Metal FreeNAS

– Build FreeNAS-9.10.1-U4 (ec9a7d3)

– Platform Intel(R) Core(TM) i5-3470 CPU @ 3.20GHz

– Memory 16241MB

Jeff

Thank you for this benchmark. It seem it is still relevant today.

With regard to the new S3520 models, as compared with the older S3500, according to Intel specifications, they seem much better suited to act as SLOG:

Given the smallest disk size of:

– 150GB (http://ark.intel.com/products/94037/Intel-SSD-DC-S3520-Series-150GB-2_5in-SATA-6Gbs-3D1-MLC)

for S3520,

Both smallest and comparable size of respectively:

– 80GB (http://ark.intel.com/products/74949/Intel-SSD-DC-S3500-Series-80GB-2_5in-SATA-6Gbs-20nm-MLC)

– 160GB (http://ark.intel.com/products/75679/Intel-SSD-DC-S3500-Series-160GB-2_5in-SATA-6Gbs-20nm-MLC)

for S3500,

And given the format:

S3500@80 / S3500@160 / S3520@150

– Write (MB/s): 100 / 175 / 165

– Write Latency (µs): 65 / 65 / 35(!!)

– Endurance (TBW): 45 / 100 / 412(!!)

– Read (MB/s): 340 / 475 / 180 <– of few concern for ZIL, but added for consistency

– Price* ($): 100 / 150 / 130

* As per Amazon.com 2017-05

With its 4 to 10 times higher endurance, much lower write latency (close to entry level zeus from what I understand), and low price tag, on paper, it seem like a great fit for ZIL. Not so much for most other usage though, with such low R/W speed.

Of course, one would have to apply practice to theory, with real devices.

I was not able to find a reliable benchmark online for the S3520.

Thanks for the info Alex, that looks like you’d get a noticeable performance gain. if I was buying today I’d probably go for the newer model.

I saw in your previous post that you used a vmdk stored on the ssd being tested for comparison. I have a FusionIO card, I can’t pass it through directly because FreeNAS doesn’t have the drivers, so I use it as a local datastore on my all-in-one running ESXi 5.5. I tried adding an SLOG via a thick provisioned vmdk on this datastore, similar to your test setup, but it didn’t seem to help and removed the SLOG. The drive is still there and was just revisiting this the other day, so I tried doing dd to it, and noticed I’m only getting like ~100MB/s writing to it. I’m assuming you didn’t see any speed limitations through this interface, any other ideas? Thanks.