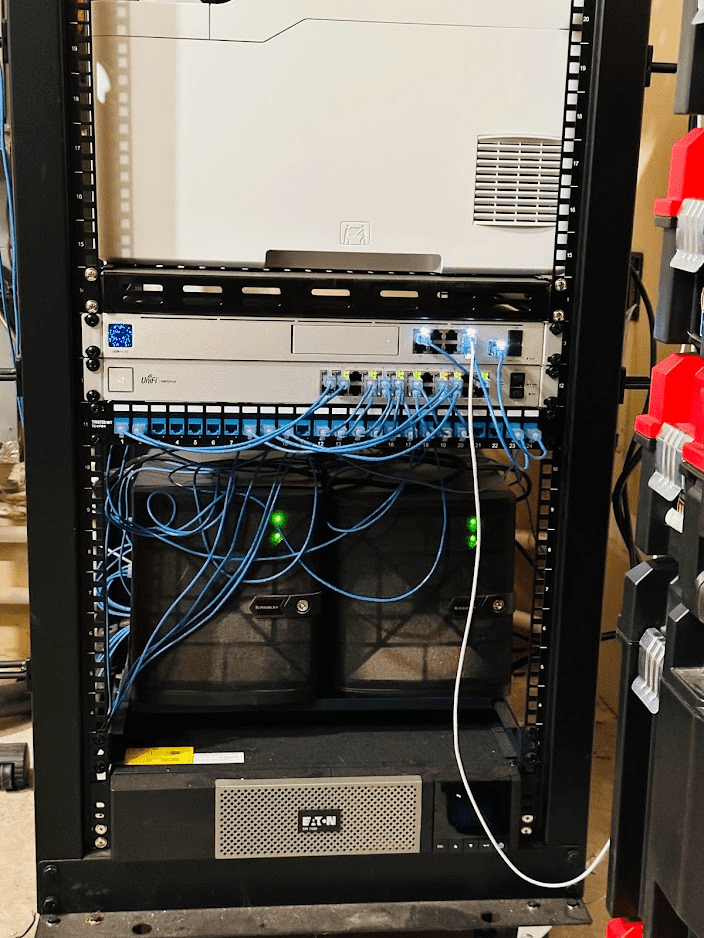

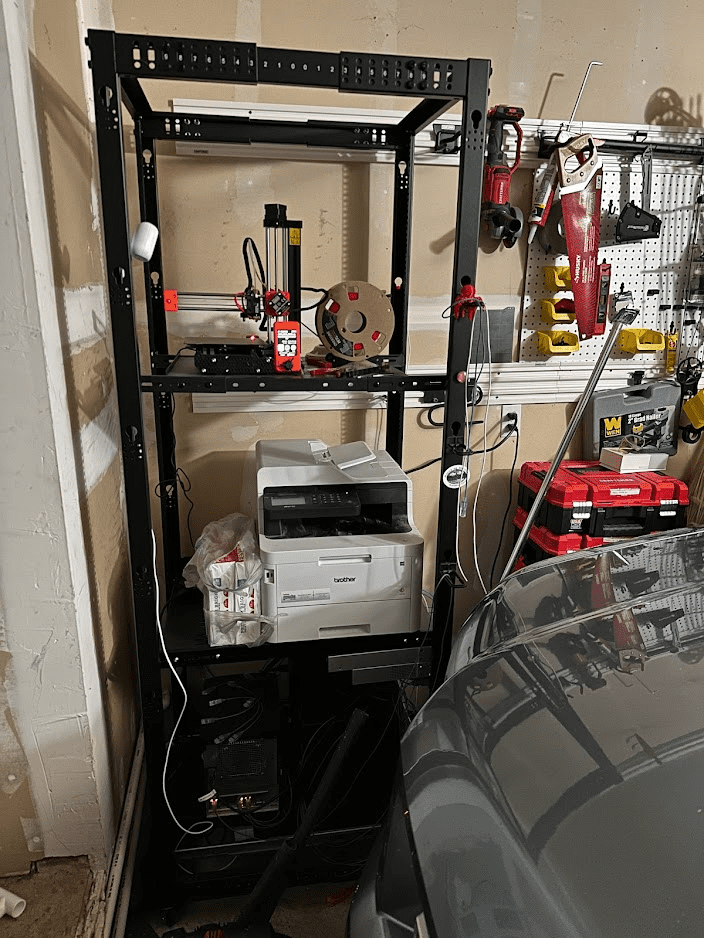

To make more room in the house (converting my home office into a bedroom), I moved my homelab into the garage. It’s still a work in progress, the cable management is a mess, but that’s about as good as it’s going to get for a while, as I have other things to attend to, but here’s the progress so far.

Homelab Equipment in Ascending Order:

- Eaton 5P 1500RT UPS Battery Backup

- 3 Mini-ITX Supermicro servers running Proxmox VE and TrueNAS Scale. You are loading this website from the server on the left. In hindsight, I should have gotten rackmount servers, but I didn’t think I’d ever put them in the garage.

- 24-port Patch Panel (Amazon)

- UniFi 24-port managed switch (Amazon)

- UniFi Dream Machine SE – Firewall / IPS (Amazon)

- Brother MFC L3770CDW LED Printer

- And above that is a Prusa Mini 3D Printer

Move to the garage:

Rack: To facilitate moving to the garage, I purchased an adjustable depth 42U StarTech server rack. It’s a bit overkill, but I might as well install the tallest rack possible to make efficient use of vertical space and get some of the other office equipment in there. With a few shelves, I could also get the 2D and 3D printers out of the house.

Server Rack Rule #1. Estimate the tallest server rack you think you’ll ever need. Then double it.

Rack Shelves: Since I was moving from my office, not all the equipment is rack-mountable (I’m not even sure if they make rack-mount printers), so I added three shelves. Two NavePoint shelves and one StarTech shelf. Both work but the StarTech is a lot deeper (more space efficient since it provides a few more inches of shelf space), so I prefer that one.

UPS: Coincidentally, my UPS battery backup failed just prior to the move, so it was the perfect time to switch to a Rackmount UPS, so I installed the Eaton.

Grounding the rack: I was going to drive a ground rod just outside the garage and run a ground wire through the wall, but Mike told me driving a new ground was a bad idea–he said something about ground loops. Apparently, it’s better for everything in your house to tie into a common ground. So, I ran a copper wire to the cold water pipe on the hot water heater.

Server Rack Rule #2. Always ground your rack to a common ground.

Cabling: I re-routed the fiber internet to the garage and a few cables for the PoE WiFi back to the house for WiFi.

Hot Water Heater Drainage Reroute: Even though the rack is on casters, I wasn’t comfortable having the hot water heater pan drain right into the garage floor, so I drilled a hole in the wall and ran a PVC pipe outside and designed it so that it will primarily drain outside the house, and will overflow into the garage floor only if the PVC pipe freezes. Again, I have the casters, so the servers should be okay, but I didn’t want the default situation to be standing water under the rack.

It’s also a good idea to make sure the roof doesn’t leak. But if it does, just throw a tarp up to keep the servers dry. I always keep a tarp in my truck so should be good.

Server Rack Rule #3. Do not let the servers get wet.

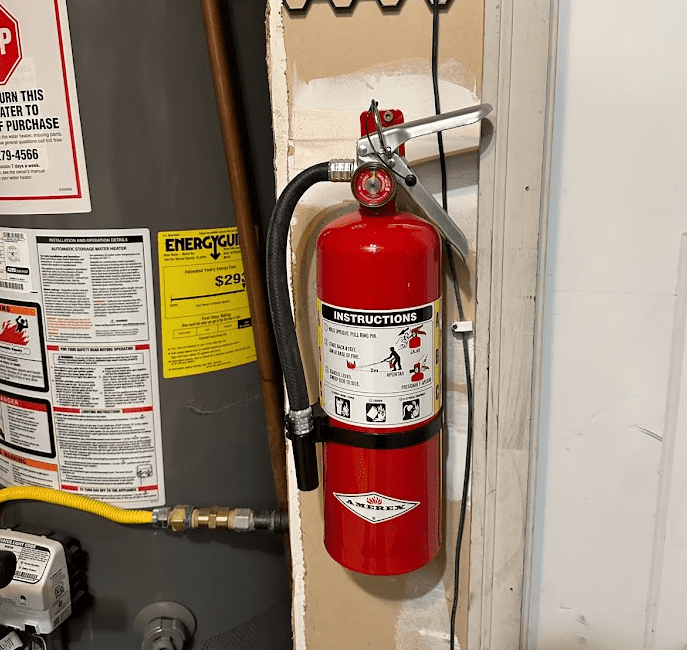

Fire Safety. I figure if there is a fire in the garage, it was most likely to be electrical or liquid, so I installed an ABC (paper/wood, gas/liquids, electrical) fire extinguisher, which should cover pretty much any flammable material in the garage.

So, I noticed there are no fire alarms in the garage. I figure with the servers in there, I’d still like to get an early warning to abandon house. I installed a Splenssy heat alarm designed for garage use above the rack–if there’s a fire in the garage, the alarm should sound. I’m not sure we’ll hear it from inside the house, but it should set off the dogs.

I know this is reliable because the dogs alert me when the 3D printer fault alarm goes off. Finally, the dogs are starting to pay off! Good job, dogs.

Every time I park in the garage…

I have four inches to spare with the cars parked in there. But it’s a pretty nice setup holding my networking gear, servers, printer, and 3D printer. The only annoyance is I have to pull the truck out to do any maintenance.

A few concerns I had about putting the rack in the garage

- Dust. There is a lot of dust in the garage from the concrete. I would say dust accumulates at a faster rate than in the house, but I think it’s fine if I blow out the servers every couple of years.

- Humidity. Initially, I was concerned about garage moisture and condensation on the servers… but I’ve had no issues at all. I then realized that an ice-cold drink collects condensation–but not a hot drink. The servers should always be warmer than the ambient temperature, so this shouldn’t be an issue at all.

- Cooling. So this summer, I had an issue with cooling for a few weeks in the hottest part of the year. My Intel CPU Xeon-D CPU server was throttling due to high temp. I ended up failing most of the workload over to the AMD EPYC server and shutting down non-critical workloads for a couple of weeks. The AMD EPYC CPU didn’t have to throttle at all. This could probably be addressed by pointing a fan at the server rack during the hottest week of the year or installing some sort of ventilation system. Most of the time North Idaho gives you free cooling.

- Access. Now, whenever I need to use the printer, I have to back the car out–to say that’s annoying is an understatement. However, I can always print and just pick it up next time I leave or come home since I’d be pulling the truck out anyway–I suspect checking the printer before I pull into the garage will become a habit, like checking the mail.

My homelab probably saves me ~$290 in monthly hosting fees, but boy, after all that work and considering it’s eating up 6 square feet of garage space, the cloud becomes quite tempting.

Moves and changes are always so overwhelming! lol

Over the last ten years, I went from a media server, to a full blown home lab (four HP Z800 workstations running XenServer / ESXi) to a mix where some machines were media server dedicated and some were for the home lab, to a point where I started consolidating and scheduling power on and off to conserve power.

Most recently, I was largely running 95% on a single Z800 with my firewall on a dedicated PC tower in a server cabinet in my garage. The heat level in the garage would commonly be around 100 degrees in the summer (outside temps in the mid 80’s).

With the Z800’s being XEON-based (and super power hungry), I have now moved over to a Protectli fanless for my firewall and am re-doing the lab using NUC10i7 devices running XCP-NG. My power draw has gone from 400W to 50W. Noise is basically zero, and there is essentially no more heat emanating from the cabinet.

NUC10i7 w/ 64GB RAM, a NVME drive to boot and a 1TB SSD to store VXD’s is about $900.

Those little NUCs look nice, Mark! Actually not a brad price at all considering the specs. Are you planning to cluster the NUCs so you can survive a node failure, or run them as individual servers and just rely on backups?

I’m pretty impressed at how well they handle the workloads.

NUC is about $550 (barebones). 64GB RAM is about $175. NVME drive is like $50. The rest of the cost comes down to how big of an SSD you put in it (and its quality). Power draw is 30W or less almost always.

I’ve always run my virtualization environment with most of the virtual hard drives stored on a NAS and accessed via NFS (some guests I tie to a host and store the hard drive on the local SSD). That way, I can start them up on any host without any sort of configuration change. And, if a host goes down unexpectedly, it’s easy to just start the guest up on another host with minimal (if any) data loss. Snapshots and backups of the virtual hard drives is “more than good enough” for home use.

I also have a couple of NUC5’s that run services in a redundant fashion to support the home network (things like DNS and print services). I bought these for under $20 each and added a $15 SSD and a $10 power adapter. These are more in line with a RPi (only more powerful overall, and x86 architecture) but the reliability is immensely higher because you can boot them from SSD.

My new home lab is “paying for itself” by reducing the electric bill by well over $100/month. That cost reduction will only go up as the electric rates here continue to skyrocket.

> Dust. There is a lot of dust in the garage from the concrete. I would say dust accumulates at a faster rate than in the house, but I think it’s fine if I blow out the servers every couple of years.

It depends how dusty the air as. But if you have very fine particles like concrete it is wise to take some measures. Concrete dust can harden in presence of water vapour (humidity). For example that’s why the home vacuum cleaners are dying if you use them after some construction work. The impeller of the motor/turbine gets a lot of concrete dust. There is humidity in the air. The result is heavy unbalancing of the impeller, which leads to heavy damage to the bearings.

I have an online UPS with fan which never turns off. I make a simple air intake filter made from:

1) 2-3-4 layers of medical cotton gauze (similar to the pictured on the left here -> https://en.wikipedia.org/wiki/Dressing_(medical)#/media/File:ThreeTypesOfGauze.JPG)

2) Masking tape (https://en.wikipedia.org/wiki/Masking_tape) for making the edges of the rectangle;

3) a little more masking tape for attaching the filter to the UPS.

Here is a real example: https://cdn.imgpaste.net/2022/12/21/KkvgL5.jpg

This was in use around 5-6 months. Yes, the air where I live is quite dirty.

Another note: blowing dust from computer equipment could have ill effects. The better approach is to use a brush (one that doesn’t produce static electricity). For example check what machines are used to clean the PCBs on a production line.

I’m like you but have my stuff in the cloud. As soon as I buy a house I’m moving all that hosting “in-house.” I did recently reduce my monthly cost but I’m not getting the overall speed I want which might mean I need to try litespeed.