The other day I got a little frustrated with my Gen 8 Microserver, I was trying to upgrade ESXi to 5.5 but the virtual media feature kept disconnecting in the middle of the install due to not having an ILO4 license–I actually bought an ILO4 enterprise license but I have no idea where I put it! What’s the point of IPMI when you get stopped by licensing? I hate having to physically plug in a USB key to upgrade VMware so much that I decided I’d just build a new server–which I honestly think is faster than messing around with getting an ISO image on a USB stick.

Warning: I’m sorry to say that I cannot recommend this motherboard that I reviewed earlier: I ended up having to RMA this board twice to get one that didn’t crash. The Marvell SATA Controller was never stable long term under load even after multiple RMAs so I ran it without using those ports which sort of defeated the reason I got the board in the first place. Then in 2017 the board died shy of 3 years old, the shortest I have ever had a motherboard last me. Generally I have been pretty happy with ASRock desktop boards but this server board isn’t stable enough for business or home use. I have switched to Supermicro X10SDV Motherboards for my home server builds.

Build List

- SilverStone DS-380 ($150 at Amazon) 8 bay hot-swap

- ASRock Avoton C2750D4I ($400)

- Seagate Barracudas 7200 RPM (2 TB) and Seagate 600 Pro SSD (I already had the 2TB drives and I got the SSD at TigerDirect but they’re out now).

- Kingston 2 x KVR16E11/8I DDR3-1600 8GB ECC ($85)

- Power Supply: FSP300-60GH 300W ($45)

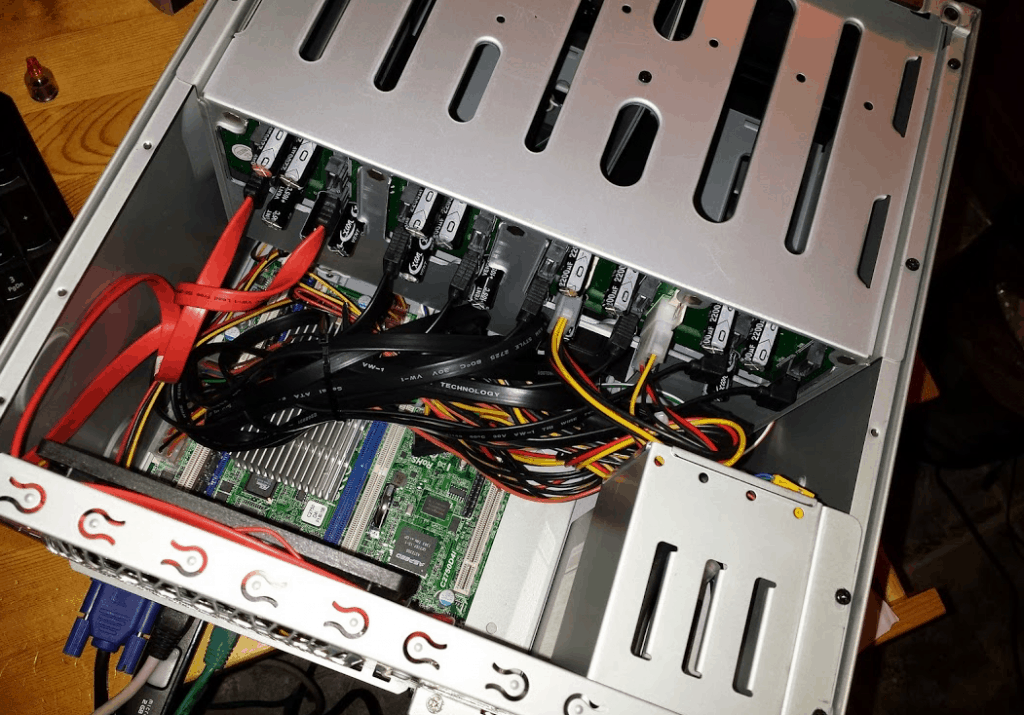

ASRock C2750D4I Motherboard / CPU

Update: 2014-05-11. Here’s a great video review on the motherboard…

12 SATA ports! This motherboard is perfect for ZFS which loves having direct access to JBOD disks. The Marvell SATA controllers did not show up in VMware initially, however Andreas Peetz provides a package for adding unsupported drivers in VMware, and this worked perfectly. It took me a couple minutes to realize all you need to do is run these three commands:

Update November 16, 2014 .. it turned out the below issue was caused by a faulty Marvell controller on the motherboard, I ran FreeBSD (a supported OS) and the fault also occurred there so I RMAed the motherboard … I ended up getting a bad motherboard again but after a second RMA everything is stable in VMware… so you can disregard the below warning.

Update March 12, 2015. My board continues to function okay, but some people are having issues with the drives working under VMware ESXi. Read the comments for details.

Update August 23, 2014 ** WARNING Read this before you run the command below ** I had stability issues using the below hack to get the Marvell controllers to show up. VMware started hanging as often as several times a day requiring a system reboot. This is the entry in the motherboard’s event log: Critical Interrupt – I/O Channel Check NMI Asserted. I swapped the Kingston memory out for Crucial on ASRock’s HCL list but the issue still persisted so I can’t recommend this drive for VMware. After heavy I/O tests ZFS also detected data corruption on two drives connected to the Marvell controllers. I am pretty sure this is because VMware does not officially support these drives so this issue likely doesn’t exist for operating systems that officially support the Marvell controller.

esxcli software acceptance set --level=CommunitySupported esxcli network firewall ruleset set -e true -r httpClient esxcli software vib install -d http://vibsdepot.v-front.de -n sata-xahci

IPMI (allows for KVM over IP). After being spoiled by this on a Supermicro board IPMI with KVM over IP is a must have feature for me, I’ll never plug a keyboard and monitor into a server again.

Avoton Octa-Core processor. Normally I don’t even look at Atom processors, but this is not your grandfather’s Atom. The Avoton processor supports VT-x, ECC memory, AES instructions, and is a lot more powerful and at only 20 W TDP. This CPU Boss benchmark says it will probably perform similarly to the Xeon E3-1220L. The Avoton can also go up to 64GB memory where the E3 series is limited to 32GB making it a good option for VMware or for a high performance ZFS NAS. The Avoton does not support VT-d so there is no passing devices directly to VMs.

My only two disappointments are no internal USB header on the board (I always install VMware on a USB stick so right now there’s a USB stick hanging on the back) and I wish they had used SFF-8087 mini-SAS connectors instead of individual SATA ports on the board to cut down on the number of SATA cables.

Overall I am very impressed with this board and it’s server-grade features like IPMI.

Instead of going into more detail here, I’ll just reference Patrick’s review of the ASRock C2750D4I

Alternative Avoton Boards

There are a few other options worth looking at. The ASRock C2550D4I is the same board but Quad core instead of Octa Core. I actually almost bought this one except I got the 2750 at a good price on SuperBiiz.

Also the SuperMicro A1SAi-2750F (Octa core) and A1SAi-2550F (Quad core) are good options if you don’t need as many SATA ports or you’re going to use a PCI-E SATA/SAS controller. Supermicro’s motherboards have the advantage of Quad GbE ports, an internal USB header (not to mention USB 3.0), while sacrificing the number of SATA ports–only 2 SATA3 ports and 4 SATA2 ports. These Supermicro boards use the smaller SO-DIMM memory.

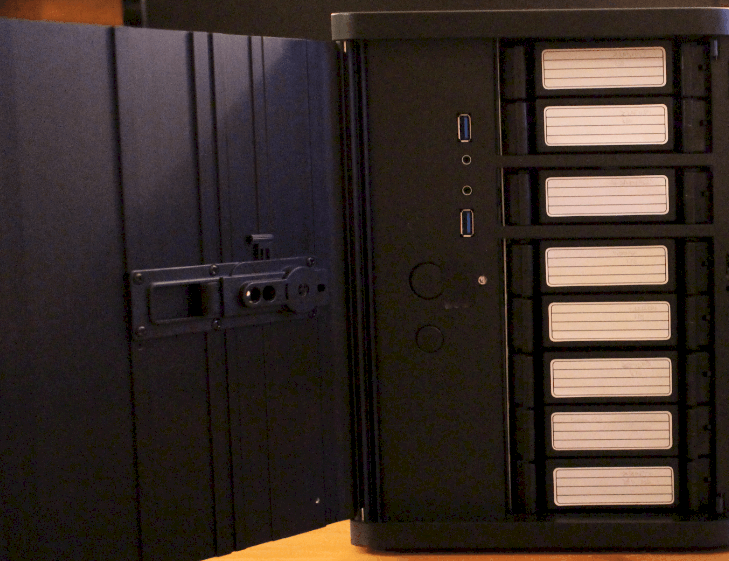

Silverstone DS-380: 8 hot-swap bay chassis

The DS-380 has 8 hot-swap bays, plus room for four fixed 2.5″ drives for up to 12 drives. As I started building this server I found the design was very well thought out. Power button lockout (a necessity if you have kids), locking door, dust screens on fan intakes, etc. The case is practical in that the designers cut costs where they could (like not painting the inside) but didn’t sacrifice anything of importance.

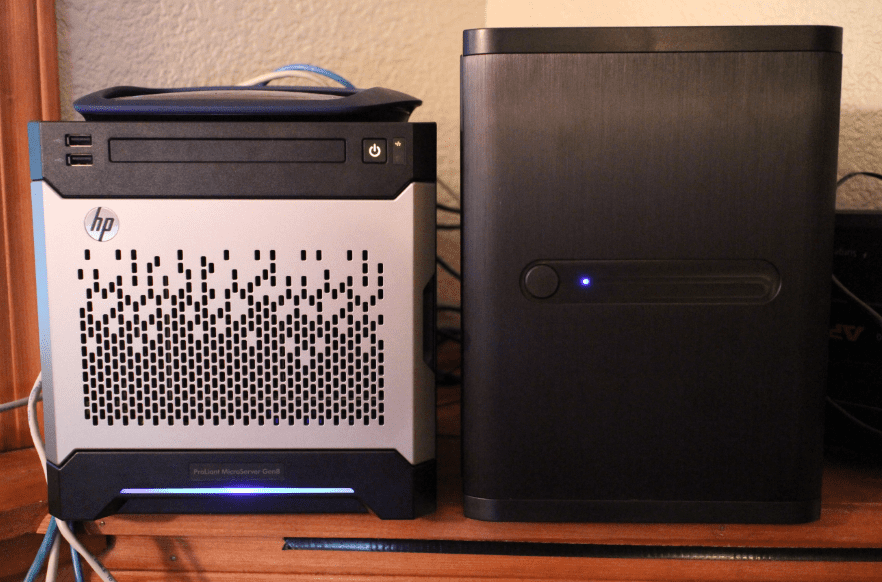

A little larger than the HP Gen8 Microserver, but it can hold more than twice as many drives. Also the Gen8 Microserver is a bit noisier.

You’ll notice above from the top there is a set of two drives, then one drive by itself, and a set of five drives. This struck me as odd at first, but this is actually that way by design. If you have a tall PCI card plugged into your motherboard (such as a video card) you can forfeit the 3rd drive from the top to make room for it.

The drive trays are plastic, obviously not as nice as a metal tray but not too bad either. One nice feature is screw holes on the bottom allow for mounting a 2.5″ drive such as an SSD! That’s well thought out! Also there’s a clear plastic piece that runs alongside the left of each tray that carries the hard drive activity LED light to the front of the case (see video below).

Here’s the official Silverstone DS-380 site, and here’s a very detailed review of the DS-380 with lots of pictures by Lawrence Lee.

Storage

Using 4TB drives 8 bays would get you to 24TB using RAID-Z2 or RAID-6. Plus have 4 2.5″ fixed bays left for SSDs.

Virtual NAS

I run a virtualized ZFS server on OmniOS following Gea’s Napp-in-one guide. I deviate from his design slightly because I run on top of VMDKs instead of Passing the controllers to the guest VM (because I don’t have VT-d on the Avoton).

ZIL – Seagate SSD Pro

120GB Seagate Pro SSD. The ZIL (ZFS Intent Log) is the real trick to high performance random writes, by being able to cache writes on capacitor backed cache the SSD can guarantee a write to the requesting application before it is transferred out of RAM and onto spindles.

So far…

I’m pretty happy with the custom build. I think the Gen 8 HP Microserver looks more professional compared to the DS-380 which looks more like a DIY server. But what matters is on the inside, and having access to IPMI when I need it without having to worry about licensing is worth something in my book.

Great job!

Will you transfer IBM m1015 to it, and how is overall performance in RAID? How loud this case?

I was looking to this motherboard but after all probably will go with Xeon supported, at least 1220Lv3 with E3C224D4I-14S motherboard.

Again, perfect build.

Orkhan.

Hi, Orkhan. With 12 SATA ports I don’t think I’ll try moving the M1015 over to it.. I would certainly do it if there was an advantage like VT-d but you can’t do that on Avoton.

The case is fairly quiet, I wouldn’t call it silent, but it’s not as noisy as the HP Gen8 Microserver. Performance is very good, here’s an OLTP test. This is with 3x7200RPM Seagate Barracuda’s in RAID-Z and the Seagate SSD Pro as ZIL.

sysbench –test=oltp –max-time=20 run –mysql-user=root –mysql-password=****

sysbench 0.4.12: multi-threaded system evaluation benchmark

No DB drivers specified, using mysql

Running the test with following options:

Number of threads: 1

Doing OLTP test.

Running mixed OLTP test

Using Special distribution (12 iterations, 1 pct of values are returned in 75 pct cases)

Using “BEGIN” for starting transactions

Using auto_inc on the id column

Maximum number of requests for OLTP test is limited to 10000

Threads started!

Time limit exceeded, exiting…

Done.

OLTP test statistics:

queries performed:

read: 38556

write: 13770

other: 5508

total: 57834

transactions: 2754 (137.66 per sec.)

deadlocks: 0 (0.00 per sec.)

read/write requests: 52326 (2615.49 per sec.)

other operations: 5508 (275.32 per sec.)

Test execution summary:

total time: 20.0062s

total number of events: 2754

total time taken by event execution: 19.9909

per-request statistics:

min: 2.65ms

avg: 7.26ms

max: 137.46ms

approx. 95 percentile: 18.32ms

Threads fairness:

events (avg/stddev): 2754.0000/0.00

execution time (avg/stddev): 19.9909/0.00

If you try the E3C224D4I-14S please let me know! I was half thinking of trying that so I could get VT-d… I’d be very curious to know if the onboard LSI-2308 can be flashed to IT-mode passed to a VMware guest running ZFS.

Ben a

I connected m1015 into this on Solaris 11.2 and it did not show the drives in the OS. The m1015 was flashed into IT mode.

Thanks for the write up. How are you finding the DS380 in terms of HDD cooling? The SPCR article wasn’t particularly flattering of this case in this regards. Concern for me as it gets above 100 quite often over summer where I live and I’d be intending on using 6 x HGST 4TB SATA disks. Do you think this could be helped with better 120mm fans or implementing some more suitable cable management?

Hi, Dominic. I think the DS380 has better cooling than my HP Gen 8 Microserver… there are three fans and the airflow seems adequate to me but keep in mind I don’t have that many cases to compare it to and I’m not an expert on cooling–SPCR probably has a lot more experience in that area. All the fans can be controlled by the motherboard so you can set the “lowest” speed setting to higher if you’re not concerned about noise–I keep mine at the lowest RPM setting possible but my basement doesn’t get that warm either. Another case you may consider is the U-NAS 800. http://www.u-nas.com/product/nsc800.html Although I can’t say how it compares to the DS380 on cooling.

Thanks for the reply. I did see the NSC800 and probably prefer that case based on the dimensions for the space I have and the HDD trays. However, as you mention in your write up the power button lockout is a must have with children around. Unless I can find some way to fence off my computer desk, then I’d say the DS380 is currently my only choice.

On one of the many forums I’ve been trawling through these past few days, someone mentioned the cooling issues of the DS380 stated in the SPCR article were overstated. Simply removing the filter will drop HD temp by about 4C apparently.

You’re welcome. I agree with the assessment that the air filter does hamper air flow, I noticed with the filter off a lot more air went through. But if you’re concerned about children hitting the power button you really want that filter there because it’s the only thing between their fingers and the fans… I once barely touched a fan spinning at high RPM and a blade snapped right off. If you can limit access to the left side then that won’t be an issue.

My guess is that a higher temperature won’t increase drive failure rate as much as not keeping a consistent temperature but I haven’t actually read any studies so I could be wrong. Also, the desktars you are looking at should be very reliable.

One other thing I just thought of is on the NSC-800 you could just not wire the case power button to the motherboard and use the out of band management port (IPMI) to toggle power.

One more thing. Have you had any stability issues with the ASRock C2750D4I? In particular around using the Marvel SATA ports? I’ve read on multiple forums of disks throwing errors, dropping out of arrays or even complete system lock ups. Some people have reported stability improvements by changing SATA cable and/or disabling Aggressive Link Power Management and Hot Swap in the BIOS, though I think having to disable these options negate the usefulness of having a Hot Swap case. One user on the LimeTech forums reported it to ASRock who said they thought it was a Linux driver issue for the Marvel chipset as they were unable to reproduce in using Windows. From what I’ve read however the crash/stability issues appear to be happening on both Linux and Windows based machines.

I haven’t had any trouble with the Marvel SATA ports under VMware ESXi 5.5. But I haven’t tried running anything other than VMware directly on the metal. I have also done a few hotswap tests with success.

Good to hear. I think I might just keep watching how the Avoton develops. There just seems to be too many issues around stability with this board in the wider community for it to be just user error. Added to this is the ASRock Server line of boards are not available locally, so I would have to import them. Makes warranty returns kind of finacially and time expensive.

Well, Dominic. I think you were wise to wait to see how the board develops… I started getting PSODs in VMware..

Test Post

bro,

i have same mobo with you. I was thinking to do the same setup due to i dont want to spend money to buy another mobo just for vmware.

I went thru this guide and see the FreeBSD raid6 with 6 disk can reach 420MB/sec in read/write.

Would appreciate if you can share your read/write performance to us?

Hi, CH Chong.

I ran Bonnie++ on my OmniOS VM and here are the results. In my current configuration the OmniOS VM has 2 cores, 4GB memory, and is running on RAID-Z1 with LZ4 compression enabled across 3 vmdks which are on 2TB Seagate 7200 RPM drives. I am using a vmdk on a Crucial M500 as a ZIL device as well, although the ZIL is probably not being used for sequential writes, however the SSD as ZIL helps a lot with random IOPS… Anyway, here are the results for sequential reads/writes:

Seq-write: 304MB/s

Seq-read: 651MB/s

I imagine the performance could be improved by giving more cores to OmniOS or by having more spindles (I only have 3), but for my needs this is plenty.

Also, using CIFS from clients outside the server (e,g, my desktop) I am easily able to saturate a gigabit ethernet, My transfer speeds for large ISO files on reads and writes are a little higher than 100MB/s.

Hope that helps.

hi Ben,

Thanks for your input. I guess my setup will be same like you, maybe i will get an additional SSD for VMware HostCache purpose.

Btw, i have another question regarding the OMNI OS. I’m currently using freeBSD and freeBSD doesn’t have native CIFS for zfs. i need to install samba and then share the CIFS thru samba.

In Solaris, we do sharesmb with zfs. Is it OmniOS also same like Solaris ?

I’ve had a VMDK with the L2ARC, a VMDK with the ZIL, and VMware’s Hostcache all on the same SSD without performance issues. I think you’ll bottleneck on the CPU before you’d take advantage of two SSDs but that’s just a guess. I’m using a Crucial M500, but if I was buying today I’d suggest one of Intel’s enterprise class SSDs since they have capacitors to flush the cache in case of power loss. Either the Intel DC S3500 ($100 at SuperBiiz: http://www.superbiiz.com/detail.php?p=SCBB080G) or Inte’s DC S3700 (just over $200 at http://amzn.to/1ukHUGS). The S3700 is twice as expensive but also a lot faster on random write IOPS so if you were going to get two S3500s you might consider a single S3700..but obviously you won’t know which is best unless you benchmark both. Here’s some favorable comments on Intel as being one of the few brands that doesn’t corrupt data on power loss: http://lkcl.net/reports/ssd_analysis.html

Yes, you can build my same setup on FreeBSD with Samba… I built it using http://www.freenas.org/ which is a great ZFS NAS appliance—but I couldn’t get the same Samba performance out of it as I can with OmniOS’s CIFS. With FreeNAS 9.2.1.6 I was only able to get 65MB/s on writes in my environment where OminOS saturates a gigabit connection…I haven’t spent time trying to tune it so maybe I could squeeze more performance out of it but just haven’t had the time. But OmniOS gives me full performance out of the box. OmniOS is based on a Illumos which is a fork of Solaris so you’ll feel write at home with it. There’s some good documentation on Gea’s site: http://www.napp-it.org/ such as installing the VMXNET3 drivers, and if you want to you can install his web-based GUI.

If you are going to use iSCSI instead of NFS for VMWare storage FreeNAS has VMware VAAI support: http://forums.freenas.org/index.php?threads/ctl-is-now-the-iscsi-target-in-freenas.22256/ where OmniOS and Solaris based OSes don’t.

regarding the USB header, it is there.

http://download.asrock.com/manual/C2750D4I.pdf please see page 23

http://www.supermicro.com/products/motherboard/ATOM/X10/A1SA7-2750F.cfm see this board, insane

Heh… At some point they should start using mini-sas connectors instead of SATA ports to save room. I think it’s just a little too wide to fit in my case…it would probably work if I got one of those pico power supplies.

dude,

it’s motherboard issue or others ?

Yep. So far I’ve had two bad motherboard, the original one I bough as well as the first RMA. Now I’m waiting on my second RMA. I tried using FreeBSD (which is on the HCL list) and had the problem as I do in VMware where it lockeds up randomly when drives are connected to the Marvell Controllers. If I use the Intel controllers I don’t have that problem.

all my 6 x 3TB WD Red connected to marvell sata port. I’m running freeBSD 10. May i’m not heavy user ( didnt turn on for 24 x7 ) that’s why i didnt encounter similar problem.

I should try to run esx 5.5

I think you have one of the few good motherboards out there.

hi Ben,

look like many ppl having similar issue.

http://forums.tweaktown.com/asrock/56730-c2750d4i-stability-problems-2.html

btw, i did copy the data , 450GB from local disk to my zpool. No hung at all.

cp -rp /backup/* /tank/backup/

Just an update, after the 2nd RMA I got a motherboard that works!

hello,

This card is compatible RAID ESXi ?

Is this KTH- PL313Q8LV memory is compatible with this card

thank you

No. I use ZFS instead of RAID.

Thank you for your reply

My plan is to install vmware esxi 5.5 on this motherboard

But I wish a tolerance of failure on hard drive

Can you explain how you made with ZFS

configuration

ASRock C2750D4I

2 WD Black hard drives Desktop WD2002FAEX

16GB of ram

thank you

Hi zode94.

I use OmniOS for my ZFS server. The basic idea is you install OmniOS to vmdk on a separate drive (I used a small SSD), then create a vmdk on each of your two data drives, give them to OmniOS and create a ZFS mirror. Then you share the ZFS dataset back to VMware via NFS. I pretty much followed Gea’s documentation: http://www.napp-it.org/help/index_en.html

The only difference with my setup is, because the Avoton CPUs don’t support VT-d I couldn’t pass the controller to the OmniOS (ZFS Server) vm. Instead I created vmdks. This is not a supported configuration at all and could result in data loss. I have not had any data loss using this method, but using a CPU that does VT-d is the safer practice.

Hope that helps,

Ben

Thank you for your reply,

If I understand correctly, ESXi and OmniOs stores on the SSD

Your SSD has no fault tolerance ?

Fault tolerance is only on the datastores ?

This configuration looks to me to be more difficult to implement

I am trying to mount an ESX on a low power configuration

Sorry for the translation, i am french

thank you

hi Ben,

The 2nd RMA is revision 1.1 ?

Hi, if you remove the 2.5 sata bay is there a way to squeeze 4 more 3.5 hdd drives there space wise? I mean could we put some kind of cage for 4 x 3.5 drives and it will fit? It would be nice to use all the sata ports.

Hi, Paul. The 4 x 2.5 day can be removed but space is very tight already, you wouldn’t have enough room to fit 4 more 3.5″ drives. You might have better luck with a regular ATX case. If you want to max out the SATA ports then you could use 4 x 2.5″ SSDs for caching and then load up the 8 bays with 3.5″ drives. How much space do you need? With 8 x 4TB drives in RAID-Z2 (RAID-6) that would give you 24TB.

Thanks for replying.

4tb are too expensive for now and I already have 8 x 3tbs raid 5 ( family home media use only ). I was not going to use the 2.5 bay so i was thinking of removing it and do a DIY solution to mount 4 more drives and I am open to say make a vertical mounting bracket for the additional 4 drives. Just want to know it the space big enough to perhaps accommodate 4 hdd either horizontally or vertically?

On your picture I see space that could possibly accommodate 4 more in the space on top of the processor heat sink where the cables are bunched up together / beside the fan. could you confirm?

I don’t have physical access to the server or I’d try it for you (I sent it to a friend’s house because he has a better internet connection). However, from my memory, if you could get 4 drives to physically fit in there (which I doubt, the space is already pretty tight) I don’t see how it would fit with all the SATA cables and even if you got past that the drives would be blocking airflow..beneath the removable 2.5″ rack there’s the PSU so you really don’t have much room to play with. If you want to go to 12 drives you may want to consider a 2U 12 bay Supermicro Chassis (you may need to replace the PSU and case fans because of the noise). I’m currently running off a 2U 6-bay Chassis (I didn’t need more than 6 drives, and I use a 4-bay 2.5 inch mobile rack for SSDs). It’s not as small as the Silverstone, but it fits nicely in an Ikea table. See: https://b3n.org/supermicro-2u-zfs-server-build/ But you can get a 12-bay chassis that’s the same size. I bought this 12-bay awhile back for $200 with shipping: http://www.ebay.com/itm/2U-Supermicro-826E16-R1200LPB-Server-H8DCL-6-2x-AMD-Opteron-FX-4170-Quad-Core-12-/131304964839?ssPageName=ADME:B:EOIBSA:US:3160 With the number of drives you’re running you might want to consider double-parity (RAID-6) instead of RAID-5.. the chances of multiple drives failing with that many drives is pretty high. Maybe not the answer you’re looking for but hope that helps. Merry Christmas!

Merry Christmas as well. Tnx for the info. As long as it fit’s I’m good as this would only be serving as a media server. I need more space than performance. So the drives won’t be as taxed as a normal production environment. The space i meant was the one beside the mother board beside the fan at the back.

I think I’m all set.

Thanks again!

Hi Ben, I just bought this motherboard with 8 WD red hdds and a samsung sdd and DS380 for a diynas. I am trying to install exsi 5.5 onto the ssd and use the other 8HDD for datastore. However I failed to get the hdds that connect to the marvel 9230 controller to work in esxi. I followed Andreas’ guide, which gets marvel 9172 work. But the hdds connecting 9230 controller cannot be detected on the esxi Configuration / Storage/Device or Datastores. However the 9230 controller is detected in the Configuration / Storage Adapters. All hdds are properly detected in bios. I don’t know what is wrong Do you have any suggestion?

Hi, Tony. Because you’re seeing the controller but no drives can you verify that the controller is set to AHCI mode in the BIOS? Also, you might need to look at this firmware update to disable the 9230 hardware RAID controller: http://www.asrockrack.com/support/ipmi.asp#Marvell9230

Also, you’re probably not as bad as me but I have on a couple of occasions made the mistake of plugging the drives into the SAS ports instead of the SATA on the backplane.

Hope that helps.

Ben

Hi Ben,

Thanks for your reply. I double checked that I plugged the cable into the sata port. I already disabled 9230 HW raid as the official site. But I didn’t find any place in bios to set 9230 working in AHCI mode? There’s AHCI/IDE/Disable options for 9172 controller. But for 9230, there are only options of enable/disable.

Thanks again!!

In the prebios screen, I found the following information:

Marvell 88SE91XX Adapter – BIOS ver 1.0.0.0025

PCIe x1 5.0Gbps

Adapter 1 Disks Information: AHCI mode

….

Marvell 88SE92XX Adapter – BIOS ver 1.0.0.1021

PCIe x2 5.0Gbps [bus:dev] = [9:0]

Mode: PassThru AHCI

I am wondering whether it is because the 9230 works on PassThru AHCI mode or AHCI mode that leads to my problem.

But I didn’t find a way to change 92xx Adapter’s mode. And I am also not quite clear what’s the difference between AHCI and Passthru AHCI.

Well, I took my server offline for a few minutes and didn’t see any other settings in the BIOS other than what you described.

When you ran the vib install did you already have the controller enabled and in AHCI mode? I believe that installer will only install if it sees that controller so if you had it in RAID mode or it wasn’t enabled at the time it wouldn’t have worked.

If that’s not it, could you post the output of this command?

lspci -v | grep “Class 0106” -B 1

(you can enable SSH in ESXi under Configuration -> Security Profile -> Services)

This is what mine looks like:

~ # lspci -v | grep “Class 0106” -B 1

0000:00:17.0 SATA controller Mass storage controller: Intel Corporation Avoton AHCI Controller [vmhba0]

Class 0106: 8086:1f22

—

0000:00:18.0 SATA controller Mass storage controller: Intel Corporation Avoton AHCI Controller [vmhba1]

Class 0106: 8086:1f32

—

0000:04:00.0 SATA controller Mass storage controller: Marvell Technology Group Ltd. 88SE9172 SATA 6Gb/s Controller [vmhba2]

Class 0106: 1b4b:9172

—

0000:09:00.0 SATA controller Mass storage controller: Marvell Technology Group Ltd. 88SE9230 PCIe SATA 6Gb/s Controller [vmhba3]

Class 0106: 1b4b:9230

Hi, Ben,

Thanks for your reply.

It’s almost same as yours:

~ # lspci -v | grep “Class 0106” -B 1

0000:00:17.0 SATA controller Mass storage controller: Intel Corporation Avoton AHCI Controller [vmhba0]

Class 0106: 8086:1f22

—

0000:00:18.0 SATA controller Mass storage controller: Intel Corporation Avoton AHCI Controller [vmhba1]

Class 0106: 8086:1f32

—

0000:04:00.0 SATA controller Mass storage controller: Marvell Technology Group Ltd. 88SE9172 SATA 6Gb/s Controller [vmhba2]

Class 0106: 1b4b:9172

—

0000:09:00.0 SATA controller Mass storage controller: Marvell Technology Group Ltd. 88SE9230 PCIe SATA 6Gb/s Controller [vmhba3]

Class 0106: 1b4b:9230

~ #

In vsphere client

Configuration -> storage adapter, they are also properly displayed

but in Configuration -> storage -> datastore/device, one hdd that connects to the 9230 controller is missing.

I also tried changing hdds and slots to make sure it is not the hdd or cables’ problem. it is always the 9230 one is missing.

Is it just one HDD? I wonder if it’s just a bad SATA port?

Hi Ben, Thanks for your reply.

I have 8 HDD and one SSD, totally 9 HDs. I used up 2 intel SATA3 ports, 4 intel SATA2 ports and 2 9172 SATA3 ports. At least, there will be one connecting to the 9230. But this doesn’t work. I also tried more HDDs connecting to 9230 and empty 9172 or intel controller, and tried switch SATA ports, cable, and HDD. The results always are that the one or ones connecting to 9230 can not be detected. I don’t think it’s hardware issue since all 9 HDD can be detected in BIOS and WINPE. But just not working in ESXi.

I see now, I misunderstood, I thought you had said only one HDD wasn’t working out of multiple drives connected to the 9230, but I see what you’re saying now. From your lspci output it looks like you have the AHCI mode set correctly in BIOS. I think you’re right that it’s a driver issue in VMware so let’s go back to that… can you confirm that you ran these commands AFTER the 9230 was enabled and in AHCI mode in the BIOS?

# esxcli software acceptance set –level=CommunitySupported

# esxcli network firewall ruleset set -e true -r httpClient

# esxcli software vib install -d http://vibsdepot.v-front.de -n sata-xahci

If you ran the commands before putting the 9230 into AHCI mode, or if it was disabled for some reason when you ran that then the drivers would not have installed. You may just need to run the vib install again. At least that’s what I’m hoping because I’m really stumped otherwise… |:-)

:(

I reinstalled the driver. shell response said the driver has upgraded from 1.27 to 1.28. However the problem is still the same.

I’m having the same issue, prior to “upgrading” the controller firmware to disable raid (resolving stability issues with multiple drives) any drives connected to the 9230 controller no longer appear. The controller is found by esxi but sadly the drives no longer are. I’m also not seeing a specific ahi mode option but the controller rom is reporting ahci.

Interesting. I’m on ESXi 5.5.0, 2068190. Image profile is ESXi-5.5.0-20140902001-standard. Did you say you saw the drives before doing the firmware to disable the RAID? I’m trying to think if I did that–I may not have but I’m certainly running in AHCI mode. I had to send back two motherboards to get one that was stable (not just in ESXi, but had stability issues in FreeBSD). But the one I have now has been in service for quite awhile without issues. By chance are your drives over 2TB? I’ve only gone up to 2TB in mine but I assume there shouldn’t be a limitation there.

I have 7 4TB drives attached; 2 of which to the 9230 controller. After dealing with the stability issues from this controller I removed Esxi and installed linux directly on it, saw what was causing the issue and disabled RAID on that controller. After that was stable for a while I tried to reinstall ESXi which is where found the drives no longer appeared despite following the same methods. So I looked and the controller was definitely there but no drives would appear under it.

I bet that 9230 firmware update to disable RAID is what is causing the drives to not appear under VMware. I looked back through my notes and I do not think I installed that update. But I did have stability issues on the 9230 at first. I had to do two RMAs to get a stable motherboard that didn’t crash when I put a load on the 9230. Since several people are having trouble with the board I’m putting a warning up on the post.

Did you say that there isn’t an internal USB header on the ASRock C2750D4I? Because there certainly is, two USB 2.0 internal ports via an internal header. System can be configured via jumper on motherboard to use the internal header for either 1 or two ports (if 2, you lose functionality of one of the rear USB ports) and is set by default to use the internal header as a single port. I run FreeNAS off the internal USB with a short internal header to usb female adapter cable.

Ah, I was wrong. But you do have to buy a little cable thing to use it.

Quick question, do you run vm in this virtualised storage? Wouldn’t this hit I/O hard?

Hi, Eric. Yes, I do run VMs on the virtual storage. It does generate some heavy I/O but as long as you have plenty of memory for ARC and most importantly a SSD for SLOG/ZIL to take those synchronous NFS writes it does fine. For extremely heavy I/O loads I’d suggest going to mirrored vdevs (and lots of them) instead of RAID-Z, and also using a CPU that supports VT-d with an LSI controller–this probably gets bare metal speeds–maybe 1%-2% slower. I have benchmarked my setup against a “high performance” enterprise SAN (which shall remain nameless) that cost 20x as my build… and my build put the SAN performance to shame, by a factor of 10.