I don’t have room for a couple of rackmount servers anymore so I was thinking of ways to reduce the footprint and noise from my servers. I’ve been very happy with Supermicro hardware so here’s my Supermicro Mini-ITX Datacenter in a box build.

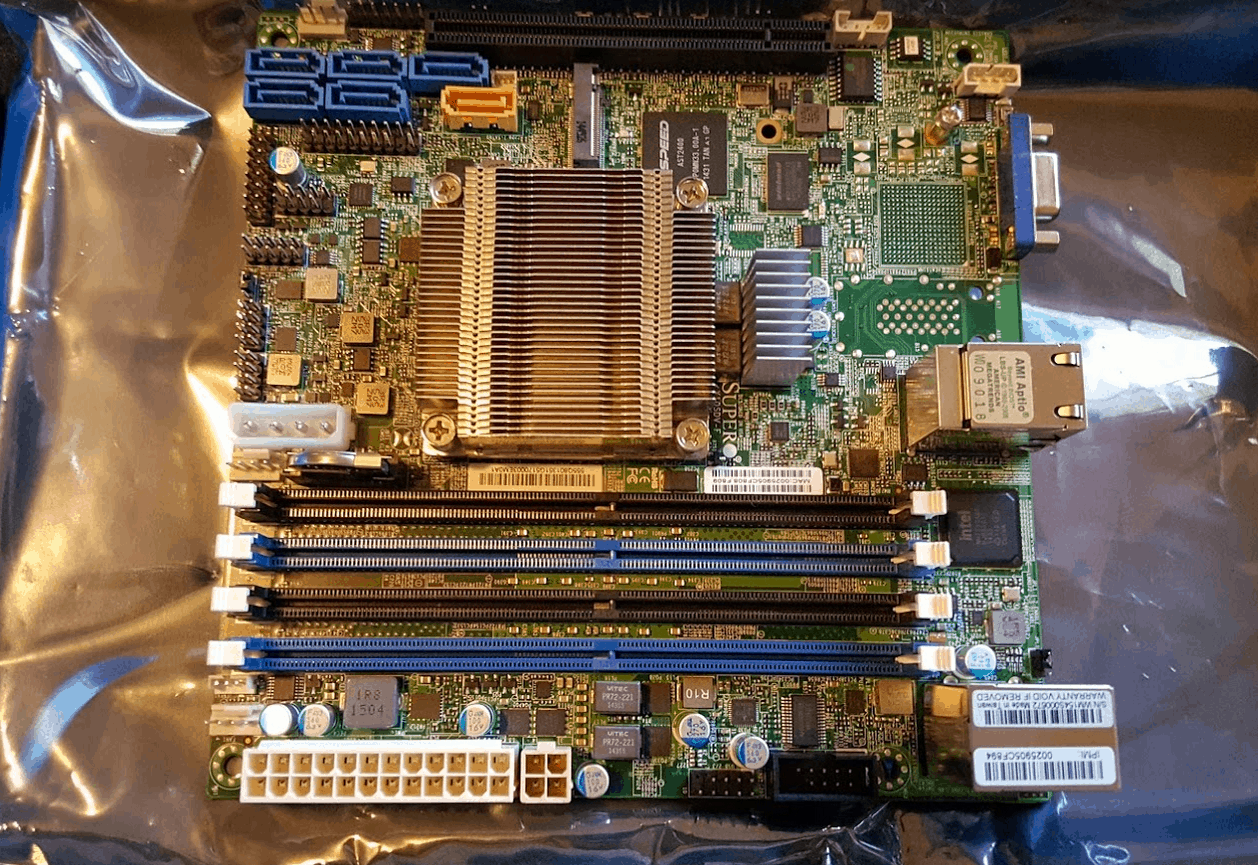

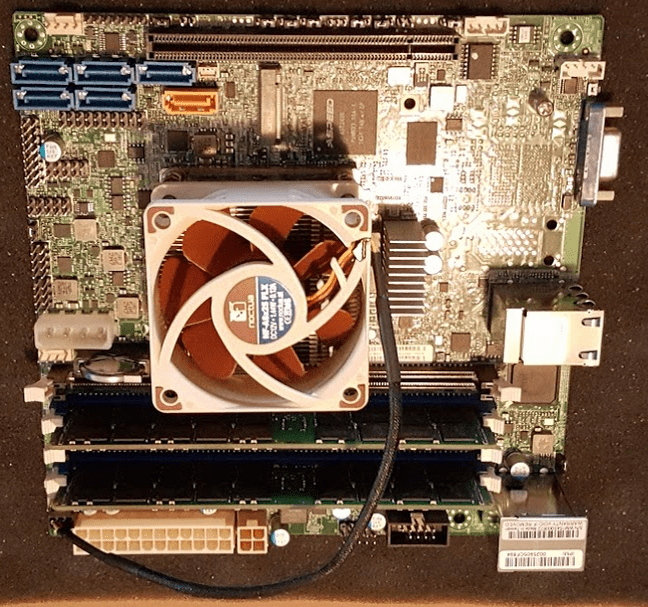

- Motherboard: Supermicro X10SDV-F

- CPU/Motherboard: Xeon D-1540 X10SDV. 8 cores (16 including HT cores)

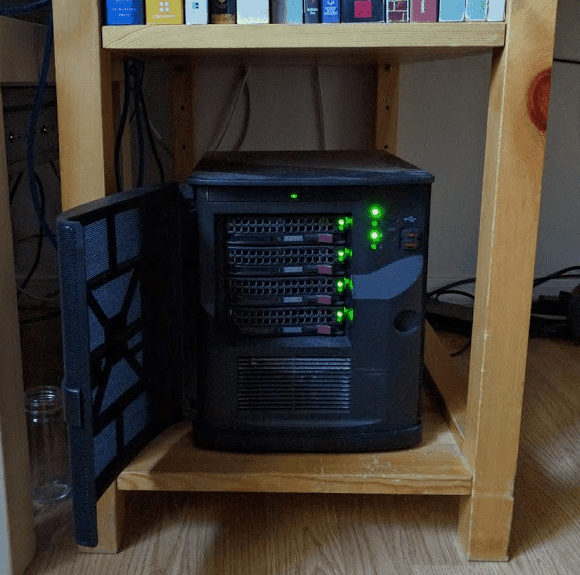

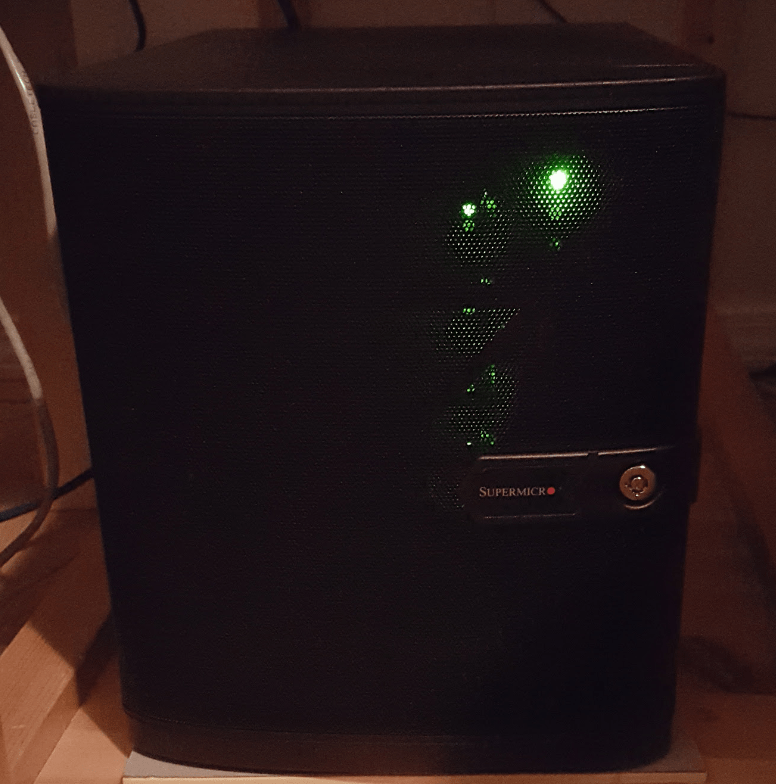

- Case: Supermicro CSE-721TQ-250B

- Hard Drives: 4x6TB RAID-Z: See my hard drives for ZFS post.

- Memory: Crucial 32GB Kit (16GBx2) DDR4-2133 ECC

Supermicro X10SDV Motherboard

Unlike most processors, the Xeon D is SOC (System on Chip) meaning that it’s built into the motherboard. Depending on your compute needs, you’ve got a lot of pricing / power flexibility with the Mini-ITX Supermicro X10SDV motherboards with the Xeon D SOC CPU ranging from a budget build of 2 cores to a ridiculous 16 cores rivaling high end Xeon E5 class processors!

How many cores do you want? CPU/Motherbord Options

- X10SDV-2C-TLN2F – Xeon D1508 2 cores @ 2.2GHz @ 25W TDP (7-year life)

- X10SDV-4C+-TLN4F – Xeon D1518 4 cores 2.2GHz @ 35W TDP (7-year life)

- X10SDV-6C+-TLN4F – Xeon D-1528 6 cores 1.9GHz 35W TDP (7-year life)

- X10SDV-TLN4F – Xeon D-1540 8 cores 2GHz @ 45W TDP (global SKU)

- X10SDV-16C+-TLN4F – Xeon D-1587 16 cores! 1.7GHz @ 65W TDP (7-year life)

A few things to keep in mind when choosing a board. Some come with a FAN (normally indicated by a + after the core count), some don’t. I suggest getting it with a fan unless you’re putting some serious air flow (such as with a 1U server) through the heatsink. I got one without a fan and had to do a Noctua mod (below).

Many versions of this board are rated for 7-years lifespan which means they have components designed to last longer than most boards! Usually computers go obsolete before they die anyway, but it’s nice to have that option if you’re looking for a permanent solution. A VMware / NAS server that’ll last you 7-years isn’t bad at all!

On the last 5 digits, you’ll see two options: “-TLN2F” and “-TLN4F” this refers to the number network Ethernet ports (N2 comes with 2 x gigabit ports, and N4 usually comes with 2 gigabit plus 2 x 10 gigabit ports). 10 gbe ports may come in handy for storage, and also having 4 ports may be useful if you’re going to run a router VM such as pfSense.

I bought the first model just known as the “X10SDV-F” which comes with 8 cores and 2 gigabit network ports. This board looks like it’s designed for high density computing. It’s like cramming dual Xeon E5’s into a Mini-ITX board. The Xeon D-1540 will well outperform the Xeon E3-1230v3 in most tests, can handle up to 128GB memory, two nics (this also comes in a model that offers two more 10Gbe providing four nics), IPMI, 6 SATA-3 ports, a PCI-E slot, and an M.2 slot.

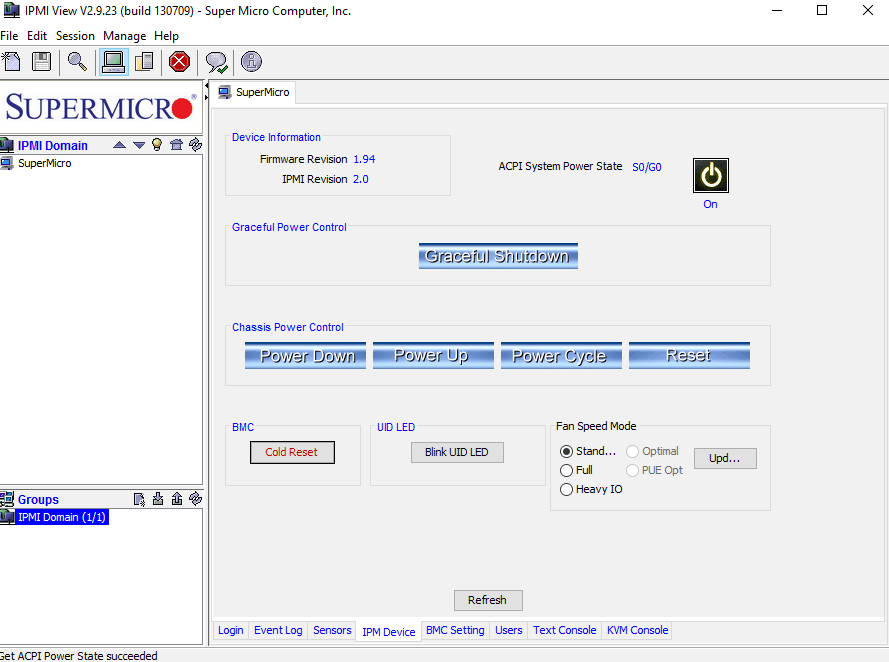

IPMI / KVM Over-IP / Out of Band Management

One of the great features of these motherboards is you will never need to plug in a keyboard, mouse, or monitor. In addition to the 2 or 4 normal Ethernet ports, there is one port off to the side, the management port. Unlike HP iLO, this is a free feature on the Supermicro motherboards. The IPMI interface will get a DHCP address. You can download the Free IPMIView software from Supermicro, or use the Android app to scan your network for the IP address. Login as ADMIN / ADMIN (be sure to change the password).

You can even reset or power off, and even if the power is off you can power on the server remotely.

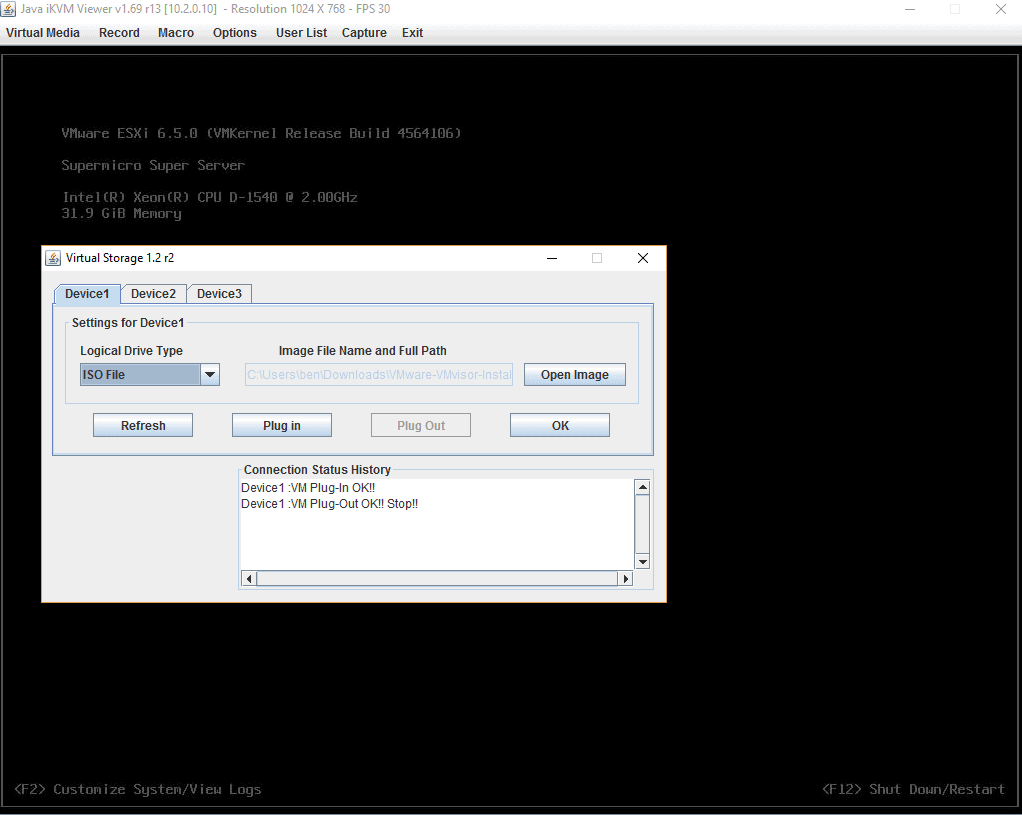

And of course you also get KVM over IP, which is so low level you can get into the BIOS and even load an ISO file from your workstation to boot off of over the network!

When I first saw IPMI I made sure all my new servers have it. I hate messing around with keyboards and mice and monitors and I don’t have room for a hardware based KVM solution. This out of band management port is the perfect answer. And the best part is the ability to manage your server from remote. I have used this to power on servers and load ISO files in California from Idaho.

I should note that I would not be exposing the IPMI port over the internet, make sure it’s on it’s behind a firewall accessible only through VPN.

Cooling issue | heatsink not enough

The first boot was fine but it crashed after about 5 minutes while I was in the BIOS setup…. after a few resets I couldn’t even get it to post. I finally realized the CPU was getting too hot. Supermicro probably meant for this model to be in a 1U case with good air flow. The X10SDV-TLN4F is a little extra but it comes with a CPU fan in addition to the 10Gbe network adapters so keep that in mind if you’re trying to decide between the two boards.

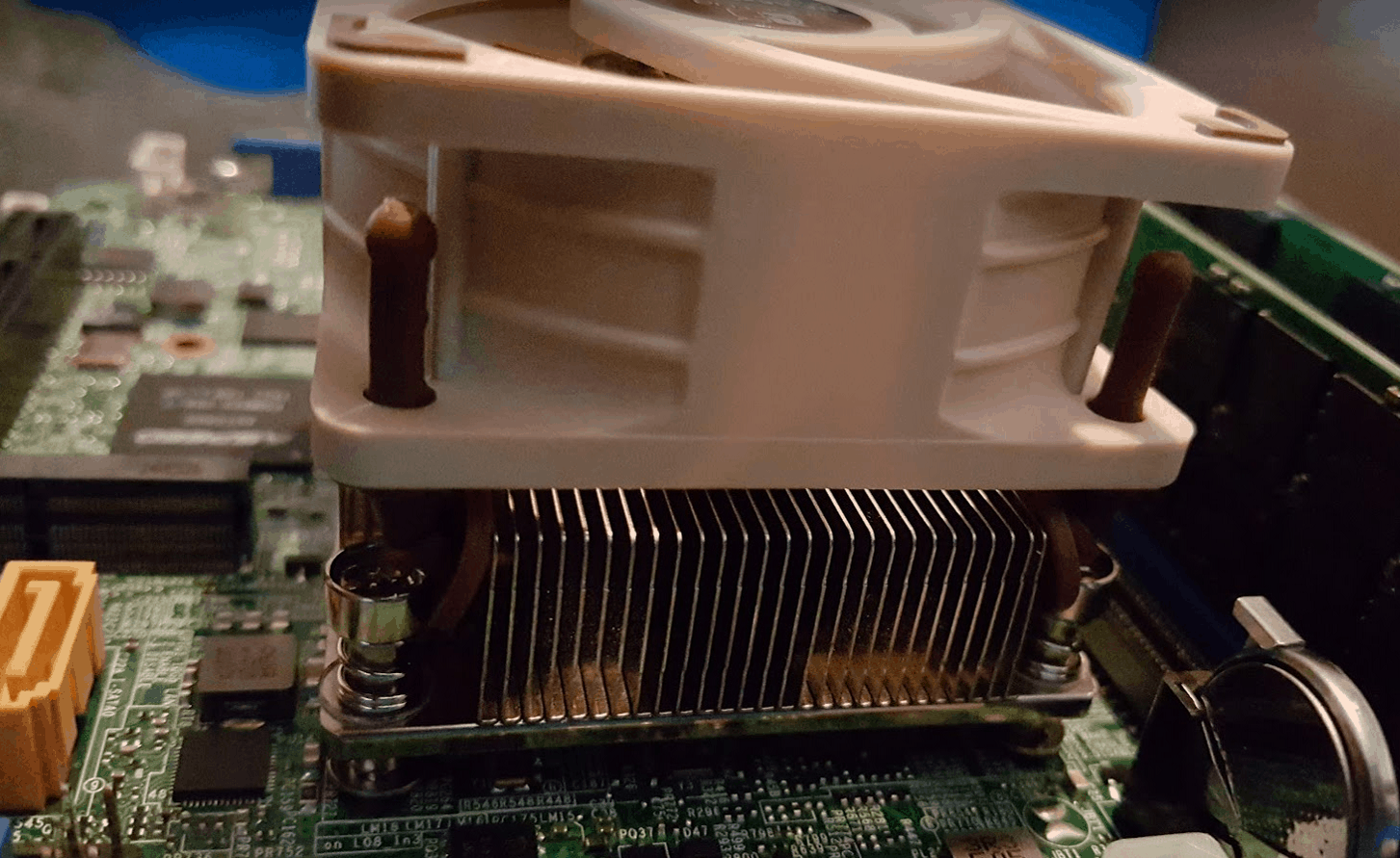

Noctua to the Rescue

I couldn’t find a CPU fan designed to fit this particular socket, so I bought a 60MM Noctua NF-A6x25

UPDATE: Mikaelo commented that the fan is backwards in the pictures! Label should be down.

This is my first Noctua fan and I think it’s the nicest fan I’ve ever owned. It came packaged with screws, rubber leg things, an extension cord, a molex power adapter, and two noise reducer cables that slow the fan down a bit. I actually can’t even hear the fan running at normal speed.

There’s not really a good way to screw the fan and the heatsink into the motherboard together, but I took the four rubber things and sort of tucked them under the heatsink screws. This is surprisingly a secure fit, it’s not ideal but the fan is not going to go anywhere.

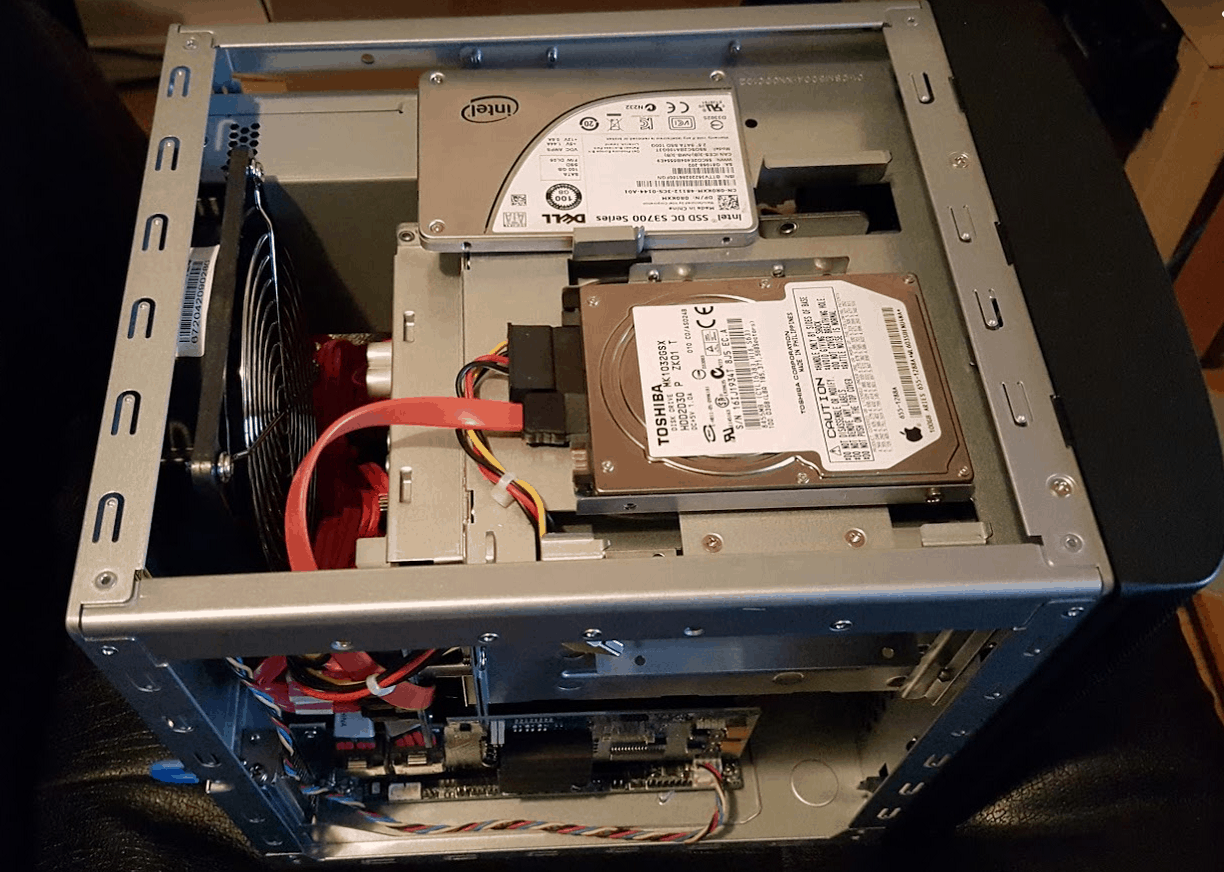

Supermicro CSE-721TQ-250B

This is what you would expect from Supermicro, a quality server-grade case. It comes with a 250 watt 80 plus power supply. Four 3.5″ hotswap bays, trays are the same as you would find on a 16 bay enterprise chassis. Also it comes with labels numbered from 0 to 4 so you could choose to label starting at 0 (the right way) or 1. It is designed to fit two fixed 2.5″ drives, one on the side of the HDD cage, and the other can be used on top instead of an optical drive.

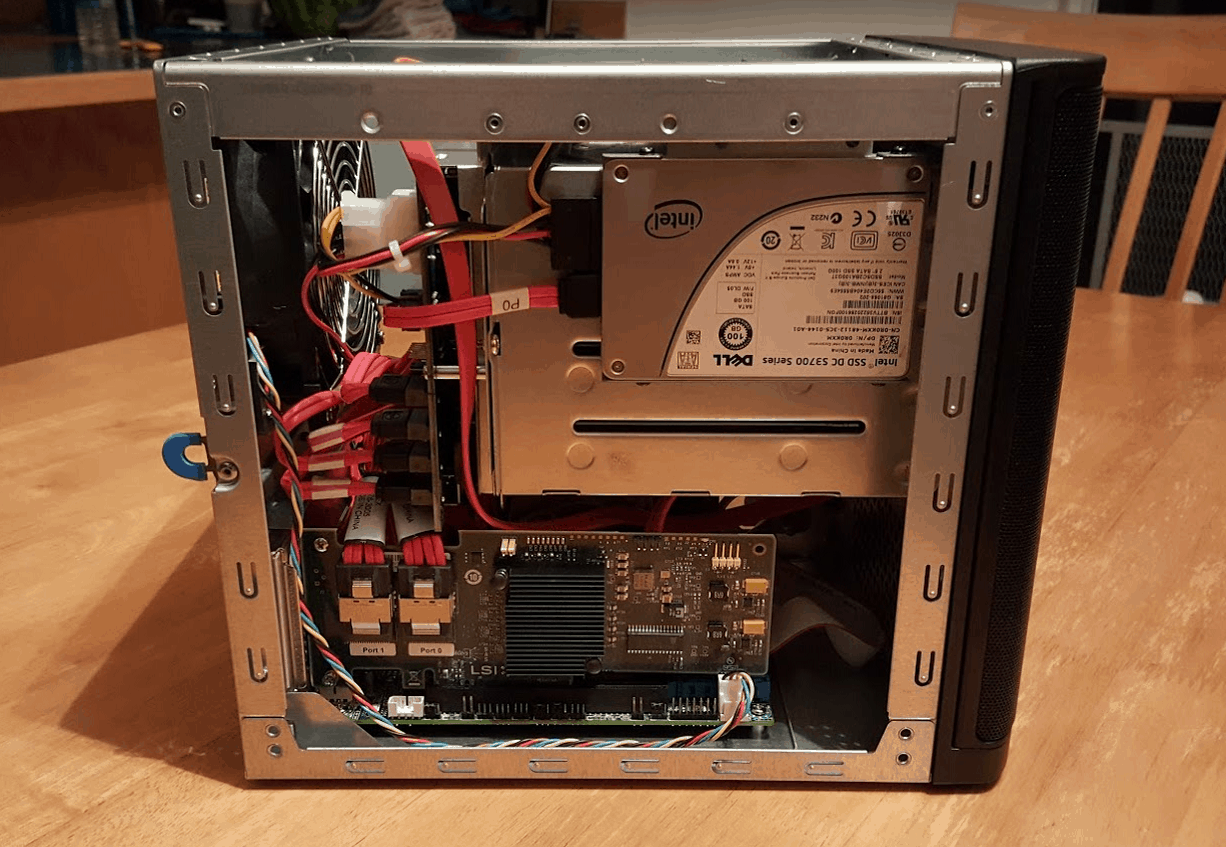

The case is roomy enough to work with, I had no trouble adding an IBM ServerRAID M1015 / LSI 9220-8i

I took this shot just to note that if you could figure out a way to secure an extra drive, there is room to fit three drives, or perhaps two drives even with an optical drive, you’d have to use a Y-splitter to power it. I should also note that you could use the M.2. slot to add another SSD.

The case is pretty quiet, I cannot hear it at all with my other computers running in the same room so I’m not sure how much noise it makes.

This case reminds me of the HP Microserver Gen8 and is probably about the same size and quality but I think a little more roomier and with Supermicro IPMI is free.

Compared to the Silverstone DS380 the Supermicro CS721 is a more compact. The DS380 has the advantage of being able to hold more drives. The DS380 can fit 8 3.5″ or 2.5″ in hotswap bays plus an additional four 2.5″ fixed in a cage. Between the two cases I much prefer the Supermicro CS-721 even with less drive capacity. The DS380 has vibration issues with all the drives populated, and it’s also not as easy to work with. The CS-721 looks and feels much higher quality.

Storage Capacity

I think the Xeon D platform offers great value with a great range of power and pricing options. The prices on the Xeon D motherboards are reasonable considering the Motherboard and CPU are combined, if you went with a Xeon E3 or E5 platform you’d be paying about the same or more to purchase them separately. You’ll be paying anywhere from $350 to $2500 depending on how many cores you want.

Core Count Recommendations

For a NAS only box such as FreeNAS, OmniOS+NappIt, NAS4Free, etc. or a VMware All in one with FreeNAS and one or two light guest VMs I’d go with a simple 2C CPU.

For a bhyve or VMware + ZFS an all-in-one I think the 4C is a great starter board, it will handle probably a lot more than most people need for a home server running a handful of VMs including the ability to trans-code with a Plex or Emby server.

From there you can get 6C, 8C, 12C, or 16C, as you start getting more cores the clock frequency starts to go down so you don’t want to go overboard unless you really do need to use those cores. Also, consider that you may prefer to get two or three smaller boards to allow failover instead of one powerful server.

What Do I Run On My Server Under My Desk?

- VMware + FreeNAS All In One ZFS Server

- Emby Media Server

- pfSense HA Failover Cluster with my own DNS caching

- Automatic Ripping Machine

- CrashPlan Backup Server

- Mumble Server

- Home Assistant

- Gridcoin CPU Miner

- UniFi Controller

- Ansible Server

- Build Servers (for development)

Other Thoughts

That’s one fantastic little rig you’ve got there! I love that mini chasis, iXsystems has the FreeNas mini based on the same thing. Personally, I’ve gone back to full sized chasis because they’re so cheap and quite.

Ben,

Good read, thanks for sharing. I just bought a SuperMicro 5028D-TN4T and it’s blazing fast, I’m running my full VM test lab and it’s hardly noticed. I do have a question about the IMPI, I’m used to going the HP Microserver route and there’s really no configuration needed, just enter a license key, I’m quite lost with the SuperMicro IMPI and SD5 setup, is that something you’d be open to helping (consulting fee, of course) with? I’m not sure how to contact you via the PGP contact you have on your site, please let me know the best way to reach out, if that’s in your wheelhouse.

Thanks,

Paul

This looks like a really good build, but I have one question.

Given that the motherboard has 6 SATA3 connections and you don’t appear to need more than that (unless you are actually using three 2.5 drives); why did you install an external HBA?

Hi, Gareth. The only reason was I was runing a ZFS NAS server inside VMware, and the best way to do that is to pass an HBA controller to the guest VM running ZFS so that it has direct access to the disks. Probably a more efficient use of hardware is to run a type-2 hypervisor like KVM or bhyve on top of OmniOS or FreeBSD so that the hypervisor has direct access to ZFS storage and no need for an HBA.

Thanks, Ben

After I posted, I figured that may be the reason. I too had been thinking along the napp-it route but once I saw your articles about bhyve and the responses about Joyent, I’m starting to reconsider that decision.

Gareth

Cool, let me know what you end up trying.

I have the generic version of this case. Its the Ablecom CS-M50.

UK price was about 105ukp, whichs is cheaper than Supermicro :)

I did read somewhere that Ablecom supply it to Supermicro who rebrand it, but can’t find the link right now…

The younger brother of the president of Supermicro is the president of Ablecom.

http://www.ablecom.de/Press/news/2012/March/19-TheWorseTheEconomy

worth mentioning that the mentioned DS380B is a SilverStone DS380B, not a SuperMicro DS380B.

Nice build, may i know what temperatures you with the noctua?

Hi, Lucien. I don’t have the system up and running at the moment, but I’ll let you know next time I’m doing some experiments on it.

Why didn’t you get the the motherboard model with the fan? It’s only $10 more. Granted you probably bought the wrong model, you could’ve ordered the oem fan from supermicro.

Good question. I didn’t realize the airflow from the case fan wouldn’t be enough, and at the time I bought it the model with the fan wasn’t available yet–and I saw this listed for $780 at a time when it was hard to even find them in stock for any price. I’m happy with what I got for the price, but unless you’re putting this in a server chassis it’s better to have the one with the fan.

I didn’t realize you could buy the OEM fan from supermicro? Do you by chance know the part number?

I don’t have the part number. I bought the mb model with the fan. Maybe you can send supermicro an email?

I am so infinitely f’n sad right now I could cry. I seriously just dropped a bunch converting a Lenovo TS140 in to a 4U rack server with a bunch of extra parts I picked up. This makes me want to cry… Good job, dude. This is amazing. I want to do this now.

Hi, Chris. Keep your TS140 and buy a second server. I always have at least two servers–one I can experiment with and one that I consider my production environment. The TS140 isn’t a bad system, it’s very inexpensive for what you get so it’s an excellent value, and seems to be rated highly on Amazon. One way to look at it is you could get two or three TS140s very cheaply and experiment with high availability, distributed file-systems, etc. I’ve used a fair amount of hardware (although I have not ever had a Lenovo server) and Supermicro is my favorite so far because it’s inexpensive but server-grade hardware with features I want like KVM over IP.

I ran into similar issues with cooling b/c mine was 60-65 C under full load. I ended up having custom 70 mm x 70 mm x 40 mm heatsinks made that dropped the full-load temps to 48-53 C.

Are you using the tower case and still getting those temps with the passive heatsink? Do you have any pictures?

Sorry for the long delay, work consumed me this weekend. You can see pics on a similarly excellent article found here:

https://tinkertry.com/superserver-combined-cpu-and-m2-cooling-fan

This system is currently on a table, right next to an identical one with the stock HSF. Both of these will go into a 4U together at some point (hopefully soon).

I have 6 extra heatsinks if anybody is interested…

Have you ever tried to use the internal SATA ports with ESXi 6? Did they work too, under VMware and with DirectPath?

The internal SATA ports work in ESXi as JBOD Data stores without RAID, I don’t see an option to use DirectPath I/O with the onboard SATA controller.

Hi folks,

For those wanting to use DirectPath IO, here’s how:

https://forums.servethehome.com/index.php?threads/usb-thumb-drive-datastore-built-in-intel-lynx-point-ahci-controller-vt-d-successful-aio.8716/

I’ve tried this using FreeNAS on ESXI 6 and it works a treat – FreeNAS gets direct access to the disks

That is fantastic info! Thanks for sharing that Rowland.

THANK YOU for the hint on the Noctua. I realised pretty quick that cooling wasn’t an issue but wasn’t sure what to do about it.

FYI you can also buy SNK-C0057A4L, the fan + heatsink assembly that comes on some of the other SM Xeon D motherboards. I’ve ordered one and can update here if any one cares.

You’re welcome. Thanks for posting the part number for the official fan!

Hi, Lucien. I just saw this again and realize I didn’t respond. I ran it for 30 minutes of sustained max CPU load (maxing out 8 cores / 16GHz) and here are the temperatures:

CPU: 84C

System Temp: 36C

Peripheral Temp: 42C

Memory DIMMA: 47C

Memiry DIMMB: 49C

FAN3 1700 RPM

FAN1 3300 RPM

The ambient temperature in my office is probably around 20-21C

This seems to be normal per: https://communities.intel.com/thread/78926

I’d be curious to know how this compares to the stock CPU fan.

Great tip about the Noctua fan! Haven’t ordered yet, but plan to. Going to be doing some swapping around of boards. Started out with a system 5028D with the X10SDV-TLN4F (original Xeon D-1540) in that CSE-721TQ-250B and so far all is great. Doing an all-in-one with ESXi 6.0.0(U2), some nested ESXi instances + VCSA, and a NexentaStor 4.0.4 CE for iSCSI & NFS for labbing vSphere.

Want to separate the IP Storage from the rest, though, and bought a CSE-504-203B and X10SDV-4C-TLN2f (Xeon D-1521). Right now, the new MB is in the 1RU case and running hottish (60C Idle, havne’t pushed it yet). Have qty. 2 28x40mm PWM fans installed in fan bracket at front of the 1RU case. Question: Is it best to orient for airflow to pull cool air into the case, or exhaust hot air out the case, or does that depend on orientation of the board with respect to Rear-I/O (as with my case) or Front-I/O? Either way, I’m planning to pick up one of these Noctua fans…hopefully there’s enough clearance in the 1RU form factor for it. But in the end my plan is to swap the D-1541 board into the 1RU and just have it serve the Hypervisor(s) role, and put the D-1521 board into the 4-bay case where it’ll just be a NAS/SAN that I can run and forget about.

Hello Ben

Nice post!

I am planning to build one with 721TQ-250B and X10SDV-TLN4F. But after comparing the part list of 721TQ-250B + X10SDV-TLN4F with 5028D-TN4T, I noticed some cables are missing

* X10SDV-TLN4F: https://www.supermicro.com/products/motherboard/Xeon/D/X10SDV-TLN4F.cfm

* 721TQ-250B: https://www.supermicro.com/products/chassis/tower/721/SC721TQ-250B

* 5028D-TN4T: https://www.supermicro.com/products/system/midtower/5028/SYS-5028D-TN4T.cfm

Superserver 5028D-TN4T has some extra SATA S-S cables, and 1 CBL-0157L (8 pin to 8 pin ribbon SGPIO cable with tube , 40cm, PBF for SES-II).

SATA cables are easy to get. But I may have to buy CBL-0157L from abroad. Do you think it is a must? Do you have any problem with the cabling?

So, I am actually using an M1015 flashed to IT mode on mine for the disks, I couldn’t be 100% sure but I just looked in my motherboard box and don’t see that cable in it. So likely you’ll need to purchase the cable separately if you need SES-II capability. I see that cable available at a lot of stores in the U.S. including Amazon and Ebay, I’m guessing you’re in another country? I don’t have any issues not using it. With only four drives it shouldn’t be too hard to keep track of. I use FreeNAS so when I built the pool I inserted one drive at a time, labeled the drives slot 0 through 3 in the GUI (to match the numbers on the drive caddies) so it’s easy to know what failed.

I like the noctua mod. Might do something similar. Have you tried the oem fan ? What sort of temp do you get with noctua ?

Hi, Mdahal. According to VMware, with a household ambient temp of 68F (might be more like 70F in my office) my CPU is currently 52C with 9% average load for the last hour. If I max out the CPU on all 8 cores for 30 minutes it will go to 84C which is still well below the max of 108C. I have not tried the OEM fan since I didn’t know you could purchase it separately at the time, but obviously if that’s an option it would probably be a better solution.

Hi Bryan

What was your trick to mounting the noctua fan? I’ve found it to be difficult using your suggestion and at one point the fan came loose while the NAS was on (luckily I was testing). How is the OEM fan?

Thanks!

Chris

Hi, Chris. Just what’s in the picture… I have another board that came with the OEM fan and it’s perfectly fine. Another way to mount the Noctua fan is this: https://www.servethehome.com/near-silent-powerhouse-making-a-quieter-microlab-platform/ looks like he glue the rubber feet to the heatsink screws.

The fan is upside down. I cannot believe noone had mentioned that.

Ha, really!? So far it’s working great. Is the air supposed to be blowing into the CPU or away from the CPU?

Into, always. Look for example at Noctuas cpu coolers ie: Noctua l9i.

Im willing to bet your cpu temps would go down significantly. 80+C is very warm for such a lower power CPU.

Well, it’s definitely blowing away from the CPU. I’ll reverse it next time I open up the case and report how the temp changes. Thanks for noticing!

Hi, Mikaelo. I was running some very heavy CPU loads and noticed it getting up to 93C and remembered your comment. Today, I finally had a chance to power down and reverse the fan. It made a big difference, now at 65C under heavy CPU load and the fan is much quieter.

One suggestion I have regarding fan choice is to use a high static pressure fan. Noctua is at the lower end (though it is quiet), and a Fractal Design, San Ace, or Sunon would lower temperatures far more significantly. Here is some testing I did with a range of fans, fan ducts, and custom heatsink.

http://chemlinux.blogspot.com/2017/09/cooling-x10sdv-xeon-d-15401541.html

Nice comparison, that’s great information! I had never even heard of high static pressure fans. I”m guessing the main difference between that and a normal fan is the fan is creating more pressure so it is able to force air through a heatsync better?

I suppose I should clarify, given my above comment was not overly precise. Some fans are labeled as high static pressure, but this is always a relative term. In an ideal world all of the fan manufacturers would give us a measured curve for each fan showing the static pressure on one axis and the volumetric flow rate on the other axis. This would allow us to compare CFM of different fans at the same static pressure, which is how the system will operate. Sunon has a nice overview of this here.

http://www.sunon.com/uFiles/file/03_products/07-Technology/004.pdf

Given we are not in an ideal world, most fans do not have a measured curve available. Some do not even have a static pressure listed, so making these sorts of decisions is much harder.

Hi,

Thanks for an excellent article. I found it while browsing for information on HP’s Microserver … glad I did though because it made me change my mind and go the DIY route.

I’m building a similar NAS but with only 8 GB of RAM. Given the projected use (streaming video content, data storage) it should more than suffice. Do you have any thoughts on using an SSD as ZIL/L2ARC device? I have a feeling that for the few users of my NAS, it’s probably not necessary.

Cheers!

Hi, Jernej, you’re welcome. L2ARC is not advised with that little memory, I’d say you’d want 32GB, probably even 64GB before even considering L2ARC. Putting the ZIL on an SSD is recommended and drastically improves write performance, however you can always try it without and add one later if write performance is too slow. Also, you technically don’t have to have an SSD for ZIL, you can disable the ZIL on disk on a dataset by doing:

zfs set sync=disabled tank/dataset

What this does is it keeps the ZIL only in memory (normally it’s in memory+SSD or memory+disk) so this will cause writes to cache in memory only before they’re written to disk, the risk is if you write data to your ZFS pool, and lose power or have a crash before it’s written to disk you will lose that data. I can’t remember exactly but I don’t think it would ever be more than the last 30 seconds of data. In theory the worst case is you’d boot back up after a power loss and your data would be in a state 30 seconds prior. Because ZFS is a copy-on-write filesystem (it never overwrites data, but even writes modifications to a new place on disk) it should be impossible to corrupt the filesystem even if you lose power in the middle of a write.

The other risk is very few people run with sync=disabled, so who knows if there are edge cases that cause data loss on the pool, that said I have never heard of anyone losing more than a few seconds of data because sync was disabled.

For me, I have the SSDs so I just use them, but if I didn’t and all I was storing was files and movies I’d run with sync=disabled. If I was doing important business transactions I’d definitely get SSDs for ZIL. Either way, make sure you have backups. |:-)

Ben

Cool blog Ben, nice builds. I just wanted to make small correction in above statement. KVM and Bhyve are type-1 Hypervisors, along with Hyper-V, Xen and ESXi. Type-2 Hypervisors are Virtual Box, VMware Workstation, Fusion, Parallels and etc.

Hi Benjamin, thank you for this really nice write-up. Can you explain me the rational for using the M1015 controller? Isn’t the X10SDVcapable of RAID 5? Thank you

Well, if you get the version with the fan, or if you connect a SNK-C0057A4L you will see that it blows away from the CPU. If you install the mylar shroud MCP-310-00076-0B (that is standard issue in the 5028D-T4NT and others), then the chassis fan will pull the air into the bottom of the CPU heatsink, and the CPU heatsink fan will pull it up into the “hot” area above the CPU. So the picture in the article is correct, but only if you install the shroud. By the way, that shroud will also help cooling your other components, like the nvme, ram and especially that flaming hot 10Gb-T chip. Be aware that that chip is a weak point on the boards, especially on the higher core counts. See tinkertry or servethehome, forgot which one.