This is a guide which will install FreeNAS 9.10 under VMware ESXi and then using ZFS share the storage back to VMware. This is roughly based on Napp-It’s All-In-One design, except that it uses FreeNAS instead of OminOS.

This post has had over 160,000 visitors, thousands of people have used this setup in their homelabs and small businesses. I should note that I myself would not run FreeNAS virtualized in a production environments. But many have done so successfully. If you run into any problems and ask for help on the FreeNAS forums, I have no doubt that Cyberjock will respond with “So, you want to lose all your data?” So, with that disclaimer aside let’s get going:

This guide was originally written for FreeNAS 9.3, I’ve updated it for FreeNAS 9.10. Also, I believe Avago LSI P20 firmware bugs have been fixed and have been around long enough to be considered stable so I’ve removed my warning on using P20. Added sections 7.1 (Resource reservations) and 16.1 (zpool layouts) and some other minor updates.

1. Get proper hardware

Example 1: Supermicro 2U Build

SuperMicro X10SL7-F (which has a built in LSI2308 HBA).

Xeon E3-1240v3

ECC Memory

6 hotswap bays with 2TB HGST HDDs (I use RAID-Z2)

4 2.5″ hotswap bays. 2 Intel DC S3700’s for SLOG / ZIL, and 2 drives for installing FreeNAS (mirrored)

Example 2: Mini-ITX Datacenter in a Box Build

X10SDV-F (build in Xeon D-1540 8 core broadwell

ECC Memory

IBM 1015 / LSI 9220-8i HBA

4 hotswap bays with 2TB HGST HDDs (I use RAID-Z)

2 Intel DC S3700’s. 1 for SLOG / ZIL, and one to boot ESXi and install FreeNAS to.

Hard drives. See info on my Hard Drives for ZFS post.

The LSI2308/M1015 has 8 ports, I like do to two DC S3700s for a striped SLOG device and then do a RAID-Z2 of spinners on the other 6 slots. Also get one (preferably two for a mirror) drives that you will plug into the SATA ports (not on the LSI controller) for the local ESXi data store. I’m using DC S3700s because that’s what I have, but this doesn’t need to be fast storage, it’s just to put FreeNAS on.

2. Flash HBA to IT Firmware

As of FreeNAS 9.3.1 or greater you should be flashing to IT mode P20 (looks like it’s P21 now but it’s not available by every vendor yet).

I strongly suggest pulling all drives before flashing.

LSI 2308 IT firmware for Supermicro

Here’s instructions to flash the firmware: http://hardforum.com/showthread.php?t=1758318

Supermicro firmware: ftp://ftp.supermicro.com/Driver/SAS/LSI/2308/Firmware/IT/

For IBM M1015 / LSI Avago 9220-8i

Instructions for flashing firmware:

https://forums.freenas.org/index.php?threads/confirmation-please-lsi-9211-i8-flashing-to-p20.40373/

LSI / Avago Firmware: http://www.avagotech.com/products/server-storage/host-bus-adapters/sas-9211-8i#downloads

(If you already have the card passed through to FreeNAS via VT-d (steps 6-8) you can actually flash the card from FreeNAS using the sas2flash utility using the steps below (in this example my card is already in IT mode so I’m just upgrading it):

[root@freenas] # cd /root/ [root@freenas] # mkdir m1015 [root@freenas] # cd m1015/ [root@freenas] # wget http://docs.avagotech.com/docs-and-downloads/host-bus-adapters/host-bus-adapters-common-files/sas_sata_6g_p20/9211-8i_Package_P20_IR_IT_FW_BIOS_for_MSDOS_Windows.zip [root@freenas] # chmod +x /usr/local/sbin/sas2flash [root@freenas] # sas2flash -o -f Firmware/HBA_9211_8i_IT/2118it.bin -b sasbios_rel/mptsas2.rom LSI Corporation SAS2 Flash Utility Version 16.00.00.00 (2013.03.01) Copyright (c) 2008-2013 LSI Corporation. All rights reserved Advanced Mode Set Adapter Selected is a LSI SAS: SAS2008(B2) Executing Operation: Flash Firmware Image Firmware Image has a Valid Checksum. Firmware Version 20.00.07.00 Firmware Image compatible with Controller. Valid NVDATA Image found. NVDATA Version 14.01.00.00 Checking for a compatible NVData image... NVDATA Device ID and Chip Revision match verified. NVDATA Versions Compatible. Valid Initialization Image verified. Valid BootLoader Image verified. Beginning Firmware Download... Firmware Download Successful. Verifying Download... Firmware Flash Successful. Resetting Adapter...

(Wait a few minutes, at this point FreeNAS finally crashed. Poweroff. FreeNAS, and then reboot VMware)

Warning on P20 buggy firmware:

Some earlier versions of the P20 firmware were buggy, so make sure it’s version P20.00.04.00 or later. If you can’t P20 in aversion later than P20.00.04.00 then use P19 or P16.

3. Optional: Over-provision ZIL / SLOG SSDs.

If you’re going to use an SSD for SLOG you can over-provision them. You can boot into an Ubuntu LiveCD and use hdparm, instructions are here: https://www.thomas-krenn.com/en/wiki/SSD_Over-provisioning_using_hdparm You can also do this after after VMware is installed by passing the LSI controller to an Ubuntu VM (FreeNAS doesn’t have hdparm). I usually over-provision down to 8GB.

Update 2016-08-10: But you may want to only go to 20GB depending on your setup! One of my colleagues discovered 8GB over-provisioning wasn’t even maxing out 10Gb network (remember, every write to VMware is a sync so it hits the ZIL no matter what) with 2 x 10Gb fiber lagged connections between VMware and FreeNAS. This was on an HGST 840z so not sure if the same holds true for the Intel DC S3700… and it wasn’t virtualized setup. But thought I’d mention it here.

4. Install VMware ESXi 6

Under configuration, storage, click add storage. Choose one (or two) of the local storage disks plugged into your SATA ports (do not add a disk on your LSI controller).

5. Create a Virtual Storage Network.

For this example my VMware management IP is 10.2.0.231, the VMware Storage Network ip is 10.55.0.2, and the FreeNAS Storage Network IP is 10.55.1.2.

Create a virtual storage network with jumbo frames enabled.

VMware, Configuration, Add Networking. Virtual Machine…

Create a standard switch (uncheck any physical adapters).

![Image [8]](https://b3n.org/wp-content/uploads/2015/03/Image-8.png)

![Image [11]](https://b3n.org/wp-content/uploads/2015/03/Image-11.png)

Add Networking again, VMKernel, VMKernel… Select vSwitch1 (which you just created in the previous step), give it a network different than your main network. I use 10.55.0.0/16 for my storage so you’d put 10.55.0.2 for the IP and 255.255.0.0 for the netmask.

![Image [12]](https://b3n.org/wp-content/uploads/2015/03/Image-12.png)

Some people are having trouble with an MTU of 9000. I suggest leaving the MTU at 1500 and make sure everything works there before testing an MTU of 9000. Also, if you run into networking issues look at disabling TSO offloading (see comments).

Under vSwitch1 go to Properties, select vSwitch, Edit, change the MTU to 9000. Answer yes to the no active NICs warning.

![Image [14]](https://b3n.org/wp-content/uploads/2015/03/Image-14.png)

![Image [15]](https://b3n.org/wp-content/uploads/2015/03/Image-15.png)

Then select the Storage Kernel port, edit, and set the MTU to 9000.

![Image [17]](https://b3n.org/wp-content/uploads/2015/03/Image-17.png)

![Image [18]](https://b3n.org/wp-content/uploads/2015/03/Image-18.png)

6. Configure the LSI 2308 for Passthrough (VT-d).

Configuration, Advanced Settings, Configure Passthrough.

![Image [19]](https://b3n.org/wp-content/uploads/2015/03/Image-19.png)

Mark the LSI2308 controller for passthrough.

![Image [20]](https://b3n.org/wp-content/uploads/2015/03/Image-20.png)

You must have VT-d enabled in the BIOS for this to work so if it won’t let you for some reason check your BIOS settings.

Reboot VMware.

7. Create the FreeNAS VM.

Download the FreeNAS ISO.

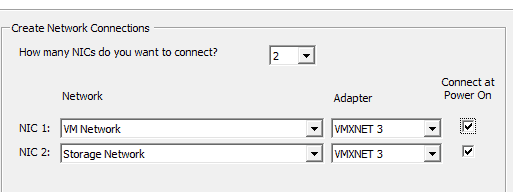

Create a new VM, choose custom, put it on one of the drives on the SATA ports, Virtual Machine version 11, Guest OS type is FreeBSD 64-bit, 1 socket and 2 cores. Try to give it at least 8GB of memory. On Networking give it two adapters, the 1st NIC should be assigned to the VM Network, 2nd NIC to the Storage network. Set both to VMXNET3.

SCSI controller should be the default, LSI Logic Parallel.

Choose Edit the Virtual Machine before completion.

If you have a second local drive (not one that you’ll use for your zpool) here you can add a second boot drive for a mirror.

Before finishing the creation of the VM click Add, select PCI Devices, and choose the LSI 2308.

![Image [32]](https://b3n.org/wp-content/uploads/2015/03/Image-32.png)

And be sure to go into the CD/DVD drive settings and set it to boot off the FreeNAS iso. Then finish creation of the VM.

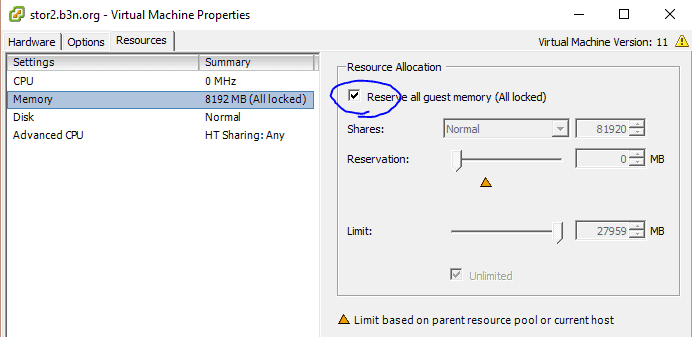

7.1 FreeNAS VM Resource allocation

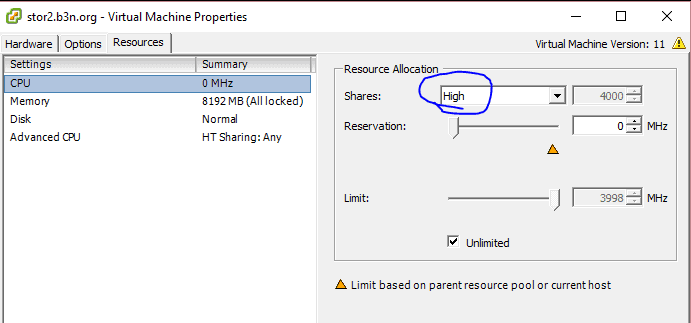

Also, since FreeNAS will be driving the storage for the rest of VMware, it’s a good idea to make sure it has a higher priority for CPU and Memory than other guests. Edit the virtual machine, under Resources set the CPU Shares to “High” to give FreeNAS a higher priority, then under Memory allocation lock the guest memory so that VMware doesn’t ever borrow from it for memory ballooning. You don’t want VMware to swap out ZFS’s ARC (memory read cache).

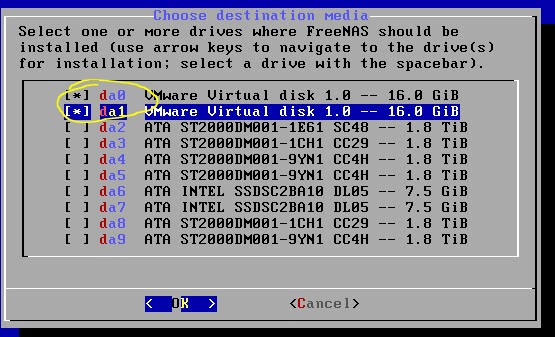

8. Install FreeNAS.

Boot of the VM, install it to your SATA drive (or two of them to mirror boot).

After it’s finished installing reboot.

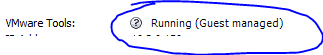

9. Install VMware Tools.

SKIP THIS STEP. As of FreeNAS 9.10.1 installing VMware should may no longer be necessary–you can skip step 9 and go to 10. Just leaving this for historical purposes.

In VMware right-click the FreeNAS VM, Choose Guest, then Install/Upgrade VMware Tools. You’ll then choose interactive mode.

Mount the CD-ROM and copy the VMware install files to FreeNAS:

# mkdir /mnt/cdrom # mount -t cd9660 /dev/iso9660/VMware\ Tools /mnt/cdrom/ # cp /mnt/cdrom/vmware-freebsd-tools.tar.gz /root/ # tar -zxmf vmware-freebsd-tools.tar.gz # cd vmware-tools-distrib/lib/modules/binary/FreeBSD9.0-amd64 # cp vmxnet3.ko /boot/modules

Once installed Navigate to the WebGUI, it starts out presenting a wizard, I usually set my language and timezone then exit the rest of the wizard.

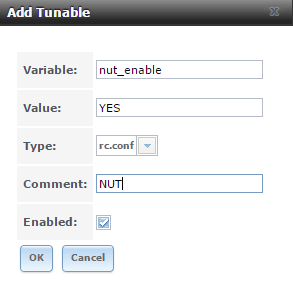

Under System, Tunables…

Add a Tunable. Variables should be: vmxnet3_load. The type should be Loader and the Value YES .

Reboot FreeNAS. On reboot you should notice that the VMXNET3 NICS now work (except the NIC on the storage network can’t find a DHCP server, but we’ll set it to static later), also you should notice that VMware is now reporting that VMware tools are installed.

If all looks well shutdown FreeNAS (you can now choose Shutdown Guest from VMware to safely power it off), remove the E1000 NIC and boot it back up (note that the IP address on the web gui will be different).

10. Update FreeNAS

Before doing anything let’s upgrade FreeNAS to the latest stable under System Update.

This is a great time to make some tea.

Once that’s done it should reboot. Then I always go back again and check for updates again to make sure there’s nothing left.

11. SSL Certificate on the Management Interface (optional)

On my DHCP server I’ll give FreeNAS a static/reserved IP, and setup an entry for it on my local DNS server. So for this example I’ll have a DNS entry on my internal network for stor1.b3n.org.

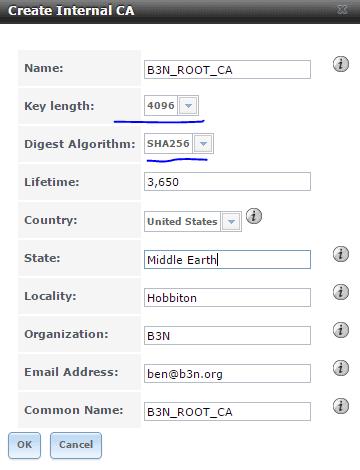

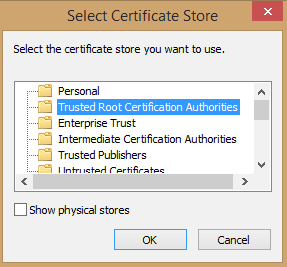

If you don’t have your own internal Certificate Authority you can create one right in FreeNAS:

System, CAs, Create internal CA. Increase the key length to 4096 and make sure the Digest Algorithm is set to SHA256.

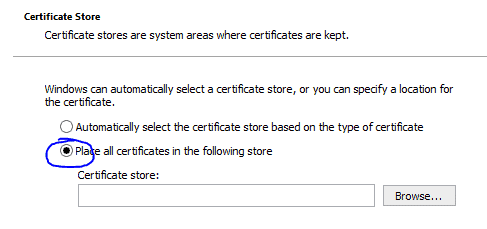

Click on the CA you just created, hit the Export Certificate button, click on it to install the Root certificate you just created on your computer. You can either install it just for your profile or for the local machine, I usually do local machine, and you’ll want to make sure to store it is in the Trusted Root Certificate Authorities store.

Just a warning, that you must keep this Root CA guarded, if a hacker were to access this he could generate certificates to impersonate anyone (including your bank) to initiate a MITM attack.

Also Export the Private Key of the CA and store it some place safe.

Now create the certificate…

System, Certificates, Create Internal Certificate. Once again bump the key length to 4096. The important part here is the Common Name must match your DNS entry. If you are going to access FreeNAS via IP then you should put the IP address in the Common Name field.

System, Information. Set the hostname to your dns name.

System, General. Change the protocol to HTTPS and select the certificate you created. Now you should be able to go to use https to access the FreeNAS WebGUI.

12. Setup Email Notifications

Account, Users, Root, Change Email, set to the email address you want to receive alerts (like if a drive fails or there’s an update available).

System, Advanced

Show console messages in the footer. Enable (I find it useful)

System Email…

Fill in your SMTP server info… and send a test email to make sure it works.

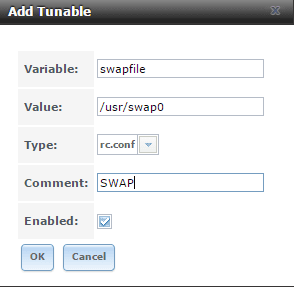

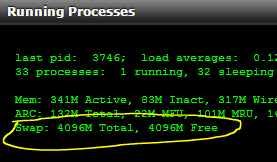

13. Setup a Proper Swap

FreeNAS by default creates a swap partition on each drive, and then stripes the swap across them so that if any one drive fails there’s a chance your system will crash. We don’t want this.

System, Advanced…

Swap size on each drive in GiB, affects new disks only. Setting this to 0 disables swap creation completely (STRONGLY DISCOURAGED). Set this to 0.

Open the shell. This will create a 4GB swap file (based on https://www.freebsd.org/doc/handbook/adding-swap-space.html)

dd if=/dev/zero of=/usr/swap0 bs=1m count=4096 chmod 0600 /usr/swap0

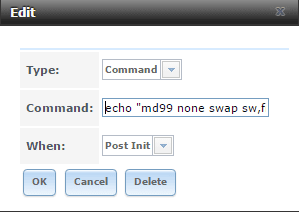

If you are on FreeNAS 9.10

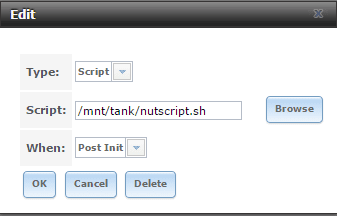

System, Tasks, Add Init/Shutdown Script, Type=Command. Command:

echo "md99 none swap sw,file=/usr/swap0,late 0 0" >> /etc/fstab && swapon -aL

When = Post Init

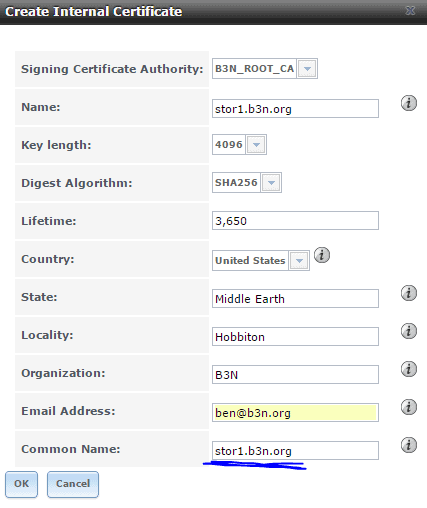

If you are on FreeNAS 9.3

System, Tunables, Add Tunable.

Variable=swapfile, Value=/usr/swap0, Type=rc.conf

Back to Both:

Next time you reboot on the left Navigation pane click Display System Processes and make sure the swap shows up. If so it’s working.

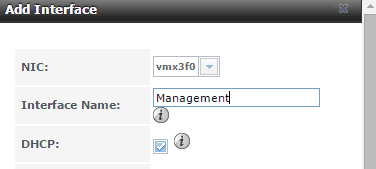

14. Configure FreeNAS Networking

Setup the Management Network (which you are currently using to connect to the WebGUI).

Network, Interfaces, Add Interface, choose the Management NIC, vmx3f0, and set to DHCP.

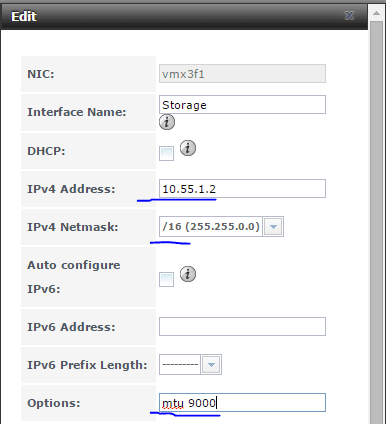

Setup the Storage Network

Add Interface, choose the Storage NIC, vmx3f1, and set to 10.55.1.2 (I setup my VMware hosts on 10.55.0.x and ZFS servers on 10.55.1.x), be sure to select /16 for the netmask. And set the mtu to 9000.

Open a shell and make sure you can ping the ESXi host at 10.55.0.2

Reboot. Let’s make sure the networking and swap stick.

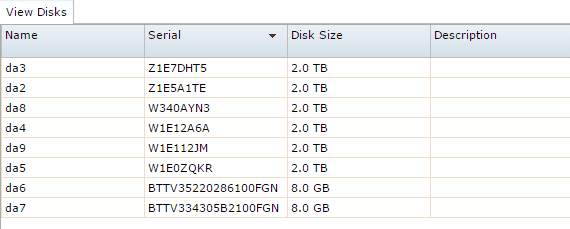

15. Hard Drive Identification Setup

Label Drives. FreeNAS is great at detecting bad drives, but it’s not so great at telling you which physical drive is having an issue. It will tell you the serial number and that’s about it. But how confident are you in knowing which drive fails? If FreeNAS tells you that disk da3 (by the way, all these da numbers can change randomly) is having an issue how do you know which drive to pull? Under Storage, View Disks, you can see the serial number, this still isn’t entirely helpful because chances are you can’t see the serial number without pulling a drive. So we need to map them to slot numbers or labels of some sort.

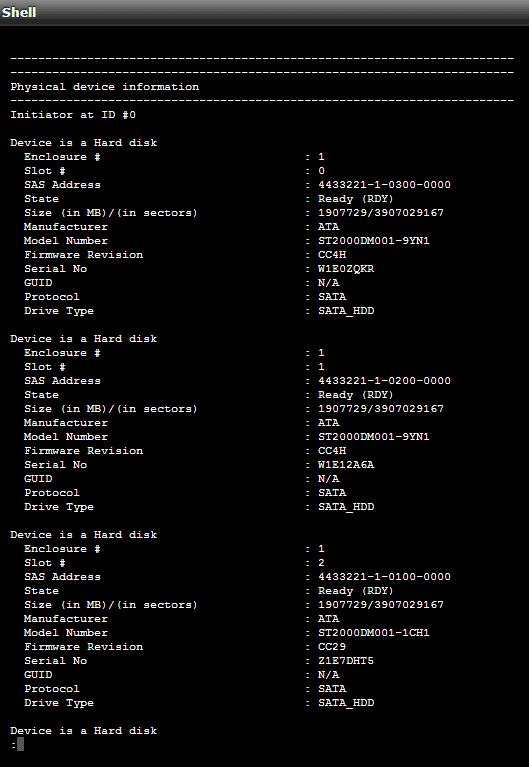

There are two ways you can deal with this. The first, and my preference, is sas2ircu. Assuming you connected the cables between the LSI 2308 and the backplane in proper sequence sas2ircu will tell you the slot number the drives are plugged into on the LSI controller. Also if you’re using a backplane with an expander that supports SES2 it should also tell you which slots the drives are in. Try running this command:

# sas2ircu 0 display|less

You can see that it tells you the slot number and maps it to the serial number. If you are comfortable that you know which physical drive each slot number is in then you should be okay.

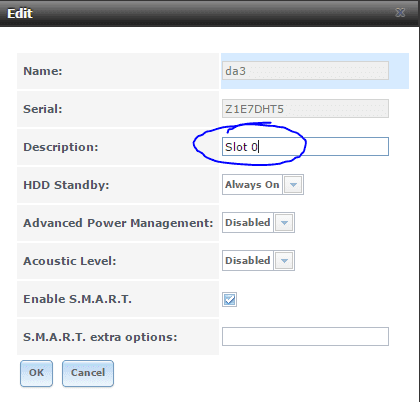

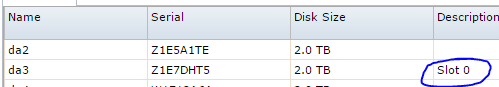

If not, the second method, is remove all the drives from the LSI controller, and put in just the first drive and label it Slot 0 in the GUI by clicking on the drive, Edit, and enter a Description.

Put in the next drive in Slot 1 and label it, then insert the next drive and label it Slot 2 and so on…

The Description will show up in FreeNAS and it will survive reboots. it will also follow the drive even if you move it to a different slot. So it may be more appropriate to make your description match a label on the removable trays rather than the bay number.

It doesn’t matter if you label the drives or use sas2ircu, just make sure you’re confident that you can map a serial number to a physical drive before going forward.

16.1 Choose Pool Layout

For high performance the best configuration is to maximize the number of VDEVs by creating mirrors (essentially RAID-10). That said, with my 6-drive RAID-Z2 array with 2 DC S3700 SSDs for SLOG/ZIL my setup performs very well with VMware in my environment. If you’re running heavy random I/O mirrors are more important, but if you’re just running a handful of VMs RAID-Z / RAID-Z2 will probably offer great performance as long as you have a good SSD for SLOG device. I like to start double parity at 5 or 6 disk VDEVs, and triple parity at 9 disks. Here some some sample configurations:

Example zpool / vdev configurations

2 disks = 1 mirror

3 disks = RAID-Z

4 disks = RAID-Z or 2 mirrors

5 disks = RAID-Z, or RAID-Z2, or 2 mirrors with hot spare.

(Don’t configure 5 disks with 4 drives being in RAID-Z plus 1 hot spare–that’s just ridiculous. Make it a RAID-Z2 to begin with).

6 disks = RAID-Z2, or 3 mirrors

7 disks = RAID-Z2, or 3 mirrors plus hot spare

8 disks = RAID-Z2, or 4 mirrors

9 disks = RAID-Z3, or 4 mirrors plus hot spare

10 disks = RAID-Z3, 2 vdevs of 5 disk RAID-Z2 or 5 mirrors

11 disks = RAID-Z3, 2 vdevs of 5 disk RAID-Z2 plus hot spare or 5 mirrors with hot spare

12 disks = 2 vdevs of 6 disk RAID-Z2, or 5 mirrors with 2 hot spares

13 disks = 2 vdevs of 6 disk RAID-Z2 plus hot spare or 5 mirrors with one hot spare

14 disks = 2 vdevs of 7 disk RAID-Z2 or 6 mirrors plus 2 hot spares

15 disks = 3 vdevs of 5 disk RAID-Z2 or 7 mirrors with 1 hot spare

16 disks = 3 vdevs of 5 disk RAID-Z2 plus hot spare or 7 mirrors with 2 hot spares

17 disks = 3 vdevs of 5 disk RAID-Z2 plus hot spares or 7 mirrors with 3 hot spares

18 disks = 2 vdevs of 9 disk RAID-Z3, 3 vdevs of 6 disk RAID-Z2 or 8 mirrors with 2 hot spares

19 disks = 2 vdevs of 9 disk RAID-Z3, 3 vdevs of 6 disk RAID-Z2 plus hot spares or 8 mirrors with 3 hot spares

20 disks = 2 vdevs of 10 disk RAID-Z3 4 vdevs of 5 disk RAID-Z2 plus hot spares or 9 mirrors with 2 hot spares

Anyway, that gives you a rough idea. The more vdevs the better random performance. It’s always a balance between capacity, performance, and safety.

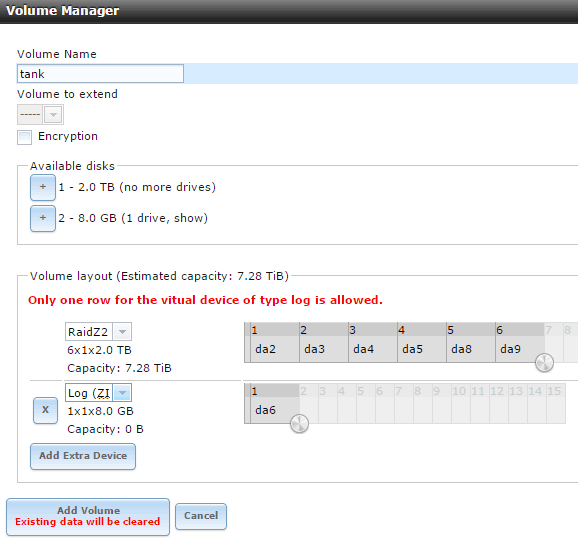

16.2 Create the Pool.

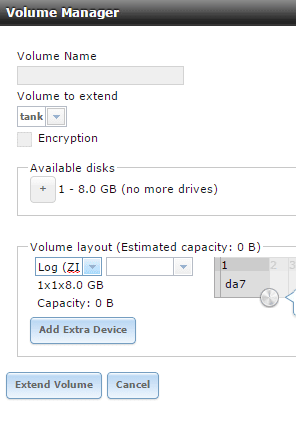

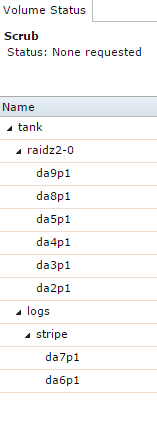

Storage, Volumes, Volume Manager.

Click the + next to your HDDs and add them to the pool as RAID-Z2.

Click the + next to the SSDs and add them to the pool. By default the SSDs will be on one row and two columns. This will create a mirror. If you want a stripe just add one Log device now and add the second one later. Make certain that you change the dropdown on the SSD to “Log (ZIL)” …it seems to lose this setting anytime you make any other changes so change that setting last. If you do not do this you will stripe the SSD with the HDDs and possibly create a situation where any one drive failure can result in data loss.

Back to Volume manager and add the second Log device…

I have on numerous occasions had the Log get changed to Stripe after I set it to Log, so just double-check by clicking on the top level tank, then the volume status icon and make sure it looks like this:

17. Create an NFS Share for VMware

You can create either an NFS share, or iSCSI share (or both) for VMware. First here’s how to setup an NFS share:

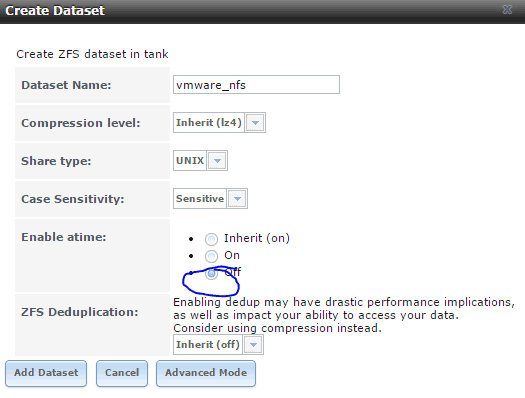

Storage, Volumes, Select the nested Tank, Create Data Set

Be sure to disable atime.

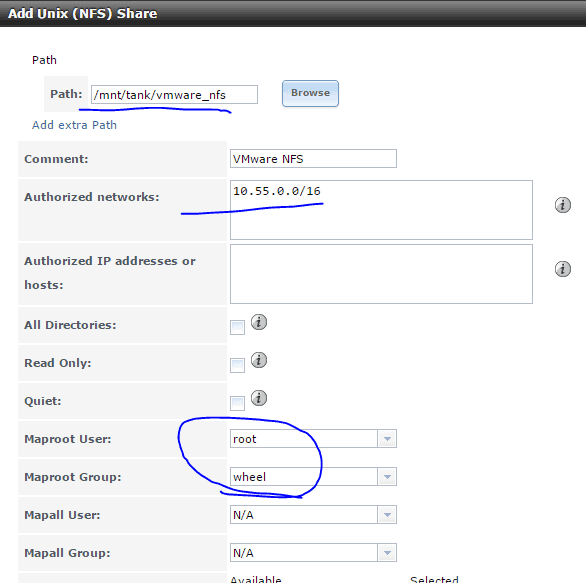

Sharing, NFS, Add Unix (NFS) Share. Add the vmware_nfs dataset, and grant access to the storage network, and map the root user to root.

Answer yes to enable the NFS service.

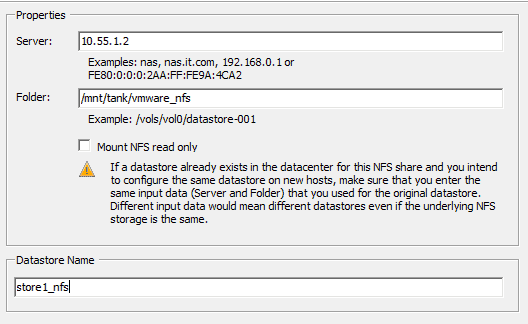

In VMware, Configuration, Add Storage, Network File System and add the storage:

And there’s your storage!

18. Create an iSCSI share for VMware

WARNING: Note that at this time, based on some of the comments below with people having connection drop issues on iSCSI I suggest testing with heavy concurrent loads to make sure it’s stable. Watch dmesg and /var/log/messages on FreeNAS for iSCSI timeouts. Personally I use NFS. But here’s how to enable iSCSI:

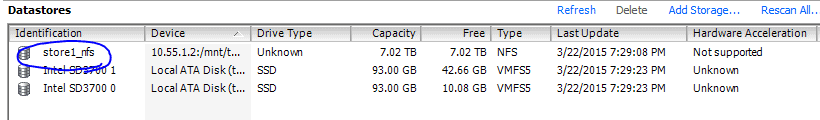

Storage, select the nested tank, Create Zvol. Be sure compression is set to lz4. Check Sparse Volume. Choose advanced mode and optionally change the default block size. I use 64K block-size based on some benchmarks I’ve done comparing 16K (the default), 64K, and 128K. 64K blocks didn’t really hurt random I/O but helped some on sequential performance, and also gives a better compression ratio. 128K blocks had the best better compression ratio but random I/O started to suffer so I think 64K is a good middle-ground. Various workloads will probably benefit from different block sizes.

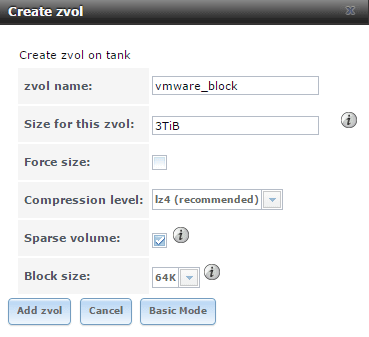

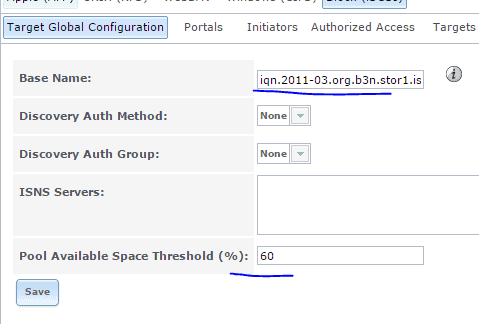

Sharing, Block (iSCSI), Target Global Configuration.

Set the base name to something sensible like: iqn.2011-03.org.b3n.stor1.istgt Set Pool Available Space Threshold to 60%

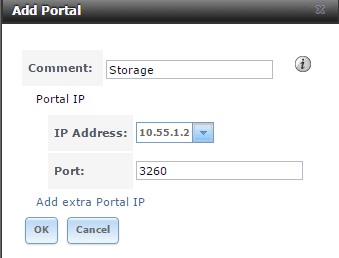

Portals tab… add a portal on the storage network.

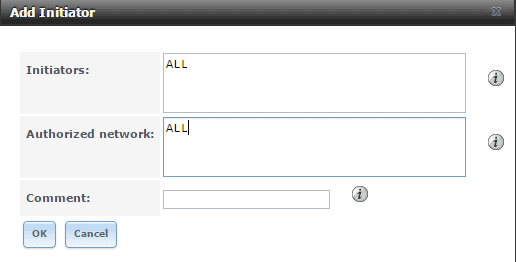

Initiator. Add Initiator.

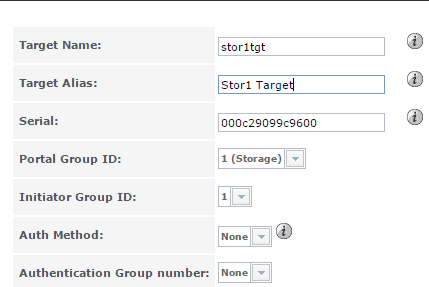

Targets. Add Target.

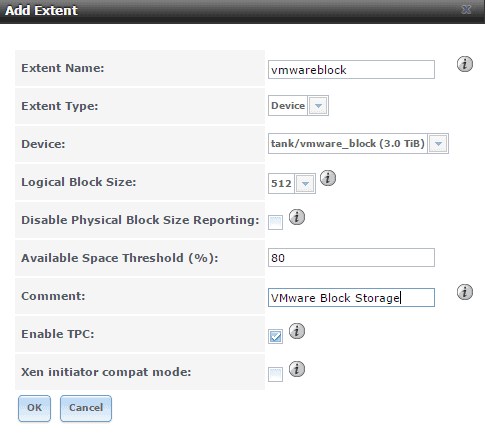

Extents. Add Extent.

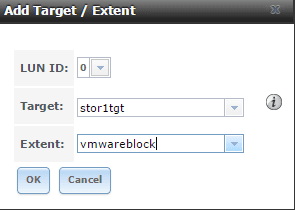

Associated Targets. Add Target / Extent.

Under Services enable iSCSI.

In VMware Configutration, Storage Adapters, Add Adapter, iSCSI.

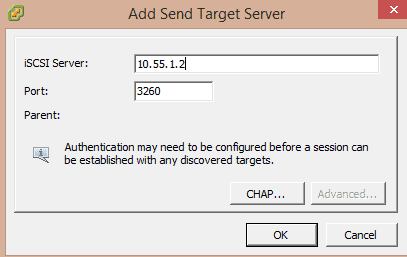

Select the iSCSI Software Adapter in the adapters list and choose properties. Dynamic discovery tab. Add…

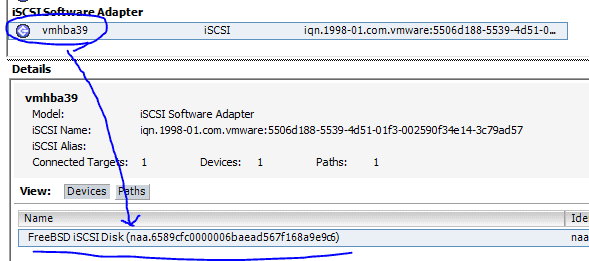

Close and re-scan the HBA / Adapter.

You should see your iSCSI block device appear…

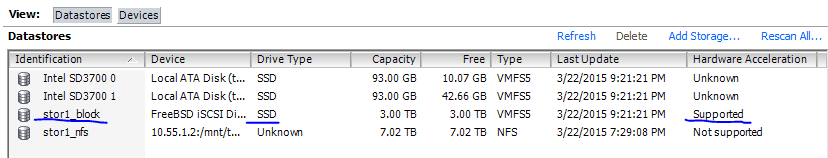

Configuration, Storage, Add Storage, Disk/LUN, select the FreeBSD iSCSi Disk,

19. Setup ZFS VMware-Snapshot coordination.

This will coordinate with VMware to take clean snapshots of the VMs whenever ZFS takes a snapshot of that dataset.

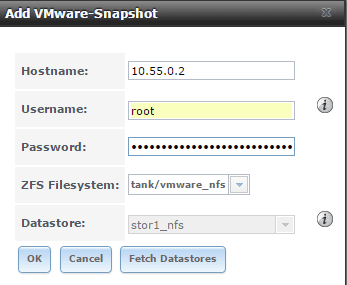

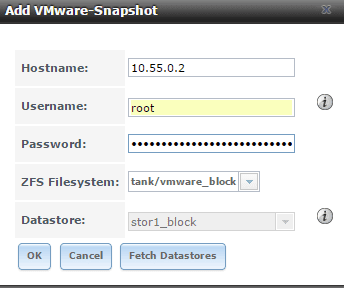

Storage. Vmware-Snapshot. Add VMware-Snapshot. Map your ZFS dataset to the VMware data store.

ZFS / VMware snapshots of NFS example.

ZFS / VMware snapshots of iSCSI example.

20. Periodic Snapshots

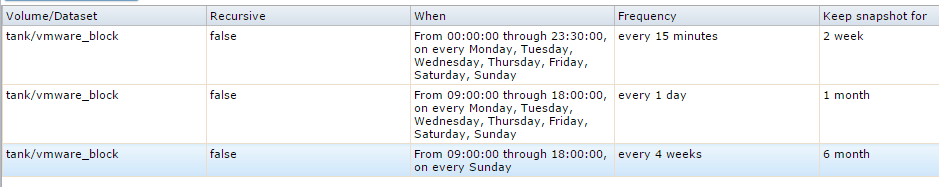

Add periodic snapshot jobs for your VMware storage under Storage, Periodic Snapshot Tasks. You can setup different snapshot jobs with different retention policies.

21. ZFS Replication

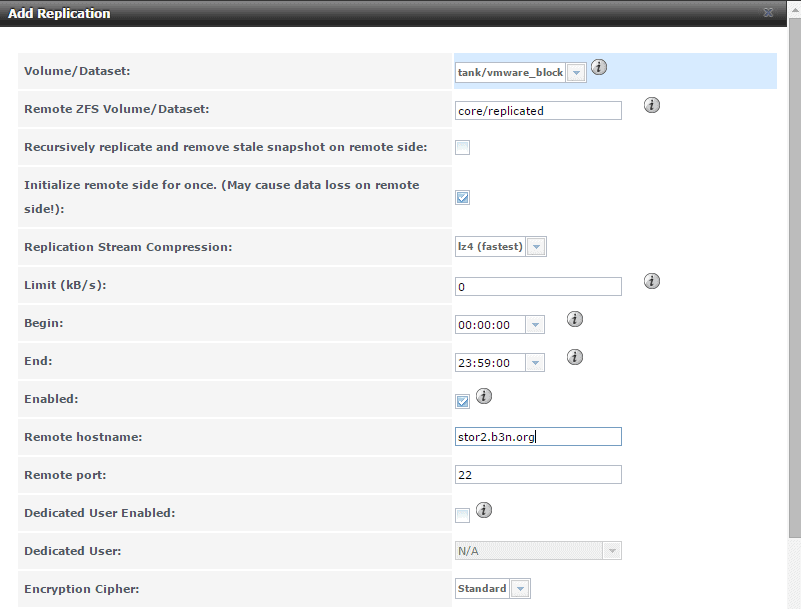

If you have a second FreeNAS Server (say stor2.b3n.org) you can replicate the snapshots over to it. On stor1.b3n.org, Replication tasks, view public key. copy the key to the clipboard.

On the server you’re replicating to, stor2.b3n.org, go to Account, View Users, root, Modify User, and paste the public key into the SSH public Key field. Also create a dataset called “replicated”.

Back on stor1.b3n.org:

Add Replication. Do an SSH keyscan.

And repeat for any other datasets. Optionally you could also just replicate the entire pool with the recursive option.

22. Automatic Shutdown on UPS Battery Failure (Work in Progress)

The goal is on power loss, before the battery fails to shutdown all the VMware guests including FreeNAS. So far all I have gotten is the APC working with VMware. Edit the VM settings and add a USB controller, then add a USB device and select the UPS, in my case a APC Back-UPS ES 550G. Power FreeNAS back on.

On the shell type:

dmesg|grep APC

ugen0.4: <APC> at usbus0 This will tell you where the APC device is. IN my case it’s showing up on ugen0.4. I ended up having to grant world access to the UPS…

chmod 777 /dev/ugen0.4

For some reason I could not get the GUI to connect to the UPS, I can selected ugen0.4, but under the drivers dropdown I just have hyphens —— … I set it manually in /usr/local/etc/nut/ups.conf

[ups] driver = usbhid-ups port = /dev/ugen0.4 desc = "APC 1"

However, this file gets overwritten on reboot, and also the rc.conf setting doesn’t seem to stick. I added this tunable to get the rc.conf setting…

And I created my ups.conf file in /mnt/tank/ups.conf. Then I created a script to stop the nut service, copy my config file and restart the nut service in /mnt/tank/nutscript.sh

#!/bin/sh service nut stop cp /mnt/tank/ups.conf /usr/local/etc/nut/ups.conf service nut start

Then under tasks, Init/Shutdown Scripts I added a task to run the script post init.

Next step is to configure automatic shutdown of the VMware server and all guests on it… I have not done this yet.

There’s a couple of approaches to take here. One is to install a NUT client on the ESXi, and the other is to have FreeNAS ssh into VMware and tell it to shutdown. I may update this section later if I ever get around to implementing it.

23. Backups

Before going live make sure you have adequate backups! You can use ZFS replication with a fast link. For slow network connections Rsync will work better (Took under Tasks -> Rsync tasks) or use a cloud service like CrashPlan. Here’s a nice CrashPlan on FreeNAS Howto.

BACKUPS BEFORE PRODUCTION. I can’t stress this enough, don’t rely on ZFS’s redundancy alone, always have backups (one offsite, one onsite) in place before putting anything important on it.

Setup Complete… mostly.

Well, that’s all for now.

This is a really great post that gave me some good tips. There is great overlap between the hardware we use.

In an all-in-one like this, do you see any benefits to using vSphere 6 over 5.5? I use a combination of the desktop client and the command line to manage the VMs and thus run vmx-09 instead of 11.

Thanks, Soeren. There are a few minor advantages:

ESXi 6 allows you to create and modify the newer HW versions, however it doesn’t allow you to use the newer features of those versions. I can edit a VM I created with HW11. That’s a small step up from 5.5 at least which wouldn’t let you edit newer hardware versions at all. I pretty much do all my management from the desktop C# client, I haven’t needed any of the newer features that aren’t available there.

VMware Tools for FreeBSD work fine with FreeNAS 9.3, in ESXi 5.5 one had to compile or download the vmxnet3 driver that someone on the FreeNAS forums made.

The NFS now supports 4.1, the main advantage here is if you want multi-pathing to your storage. I believe FreeBSD 10.1 has NFS 4.1, so FreeNAS 10 will likely support ESXi with NFS 4.1.

Other than that I don’t see any major changes. My critical production server I’ll keep on 5.5 for awhile longer, but I’m running 6.0 on two servers without issues so far.

As I’m building a server based on the ASRock Avoton board, I figured I’d do some interesting stuff during my testing phase so I used the ESXi RDM hack that’s floating around the internet so I could present the raw disks on the machines SATA ports to a NAS VM. Nothing important on the machine currently, nothing lost and only my spare time wasted. I also wanted to test for issues with the disk controller.

The TL;DR of this lash up was:

Debian ‘Wheezy’ wrote 2TB of /dev/zero to an ext4 formatted ‘RDM’ disk with no problem and did it 6 times, once to each of the disks I was using.

OmniOS + napp-it, wrote about the same to a RAIDZ2 array made of 6x2TB ‘RDM’ disks (I decided it’s ACL’s were too much of a pain for a 3 user home server).

FreeNAS… exploded quite spectacularly and managed to lock the host machine solid with disk errors after only 200GB of data had been passed to a recreated RAIDZ2 array.

Post FreeNAS the Debian experiment was performed again and all 6 disks passed with flying colours (as well as being given the thumbs up by both ESXi and the BIOS’s SMART check). It was also noted from inside the vSphere client that FreeNAS had consumed all but 2.5GB of the 14GB RAM allocated to it whilst OmnioOS consumed a grand total of 8GB, both VM’s were given the same amount of RAM.

Conclusion: FreeNAS has some very serious and major issues, be these inherent to FreeBSD or bugs in its ZFS implementation I can’t say but of the three OS’s tested… it was the only one to experience issues.

Good testing Sarah, That’s very interesting the FreeNAS crashed with RDMs while the other OSes did not. I concur with your results on FreeNAS eating up memory compared to OmniOS. OmniOS also has also outperformed FreeNAS in most tests on the same environment in all my testing which includes an HP Microserver N40L, HP Gen 8 Microserver, ASRock Avoton C2750, and X10SL7-F with Xeon E3-1245v3.

I still prefer OmniOS over FreeNAS for most deployments. FreeNAS is pretty good if you have the memory, I would consider it reliable (except on RDMs apparently) but it isn’t robust like OmniOS is. Especially when you start to have problems like a ZIL failure it’s much easier and faster to get things up and running again with OmniOS. I do think FreeNAS has improved a lot with the 9.3 series, and hopefully that trend will continue with 10. FreeNAS probably has a slight edge on iSCSI for VMware now with the VAAI integration, but I much prefer NFS for it’s simplicity.

I know exactly what you mean by the OmniOS ACLs being overkill–but the nice thing is for businesses is it integrates perfectly with Windows ACLs. What I do for my home network is setup one user account for the family to share.

Be very careful with RDM, it may work for awhile but you could run into data corruption even on OmniOS or Debian. It may be okay but be sure to stress test it real good first. I think running on top of VMDKs is a little safer and doesn’t cost more than 1-2% performance (which the RDMs are probably costing you anyway). I’ve also run FreeNAS on the Avoton on top of VMDKs with no data corruption issues. YMMV.

I followed your instructions here for installing the VMware tools and pkg_add failed trying to load the compat6x-amd64 and perl packages. I checked manually, and it seems the directory it’s trying to download from — ftp://ftp.freebsd.org/pub/FreeBSD/ports/amd64/packages-9-stable — does not exist! Yikes! :-)

hallo,

first of all, thank you for your great article. When I install the two dependencies in Freenas 9.3 I get These errros:

pkg_add -r compat6x-amd64

Error: Unable to get ftp://ftp.freebsd.org/pub/FreeBSD/ports/amd64/packages-9-stable/Latest/compat6x-amd64.tbz: File unavailable

I found out, that under the path ftp://ftp.freebsd.org/pub/FreeBSD/ports/amd64/ only the directory packages-8.4-release exists.

are prior to installation with pkg_add additional commands to execute?

Many thanks in advance

Looks like the FTP site is missing the files… did FreeBSD change where they put the packages lately?

I believe that FreeBSD did remove the archive, perhaps in response to a recent system compromise? I don’t know… At any rate, I did some snooping and ended up using the repository at ftp://ftp-archive.freebsd.org/pub/FreeBSD-Archive/ports/amd64/packages-9.2-release/Latest/ to get the two packages. It’s for the 9.2 release where FreeNAS is 9.3, but as far as I could tell the two packages in question haven’t changed in some time. Anyway, I managed to get the VMware tools installed and everything seems to be working just fine.

Good find. I wonder if this is related to their switching to the pkgng format?

Please!

How can you insert the new ftp to install the pkg?

By the way, great info!

I’ve updated the instructions to use the binary module from VMware’s guest CD.

open a shell and input

setenv PACKAGESITE ftp://ftp-archive.freebsd.org/pub/FreeBSD-Archive/ports/amd64/packages-9.2-release/Latest/

then run it again

make sure to switch to the tcsh shell first if you’re running this from the web gui shell (mine is bash)

tcsh

Ben, this is a great guide and I’ve referred to it endlessly over the last couple of weeks. Kudos, sir, and many thanks!

I have a nice all-in-one running and FreeNAS 9.3 fires up just as expected. The only problem is that ESXi doesn’t see the FreeNAS VM’s iSCSI datastore until I manually rescan for datastores with the vSphere client.

Is there a way to set up ESXi to rescan datastores after the FreeNAS VM has started up?

You’re welcome, Keith. I’m just running NFS (it’s a lot easier to manage if you don’t need the performance) so I don’t run into that problem… but you can have FreeNAS tell VMware to rescan the iSCSI adapter once it boots…

First, under Configure -> Virtual Machine Startup/Shutdown configure your FreeNAS VM to boot first.

Then give FreeNAS a post init Task (like the nutscript above) to ssh into VMware and run a command like this:

ssh root@vmwarehost esxi storage core adapter rescan –all (or you can specify the iSCSI adapter).

You’ll need to setup a public/private ssh key (see: http://doc.freenas.org/9.3/freenas_tasks.html#rsync-over-ssh-mode) so that FreeNAS can ssh into VMware without being prompting for a password.

Outstanding! I thought I’d found the solution with the vSphere CLI at https://developercenter.vmware.com/tool/vsphere_cli/6.0 — but there may be problems installing it on FreeNAS/FreeBSD. You’re approach is much simpler.

This guide was extremely helpful. thank-you. I have nearly doubled my performance on my server.

I don’t understand why we added 2 nic though, I don’t ever see myself using the second. I’m not complaining mind you, I went from 45 Mps to 80+Mps on WD greens that is what I was getting on bare metal.

Hi, Randy. You’re welcome. The reason for the 2nd virtual nic is to separate Storage traffic from LAN traffic. One nice thing about VMware is virtual switches are free.

Ben – I’ve been delving pretty deeply into FreeNAS 9.3 on VMware 6.0 for the last few weeks and thought I would share my impressions.

I am running the lastest version (FreeNAS 9.3-STABLE-201506042008) virtualized on VMware 6.0 with 4 vCPUs and 16GB RAM on:

Supermicro X10SL7, Intel Xeon ES-1241v3 @3.5GHz, 32GB ECC RAM

6 x 2TB HGST 7K4000 drives set up as 3 mirrored vdevs for a 6TB zpool (‘tank’)

Motherboard’s LSI 2308 controller passed through to FreeNAS VM via VT-d per best practices

I used your guide here as a roadmap to configure FreeNAS networking and shares.

It seems to work fine as a CIFS/SMB file server. It doesn’t work so well as a VMware datastore. In all of my testing below, I used two Windows VMs – one running Windows 7, the other Windows Server 2012R2 – homed on the appropriate datastore.

At first I thought iSCSI was going to be the ticket and it certainly was blazingly fast running the ATTO benchmark on a single Windows VM. But it turns out that it breaks down badly under any kind of load. When running the ATTO benchmark simultaneously on both Windows VMs I get this sequence of error messages:

WARNING: 10.0.58.1 (iqn.1998-01.com.vmware:554fa508-b47d-0416-fffc-0cc47a3419a2-2889c35f): no ping reply (NOP-Out) after 5 seconds; dropping connection

WARNING: 10.0.58.1 (iqn.1998-01.com.vmware:554fa508-b47d-0416-fffc-0cc47a3419a2-2889c35f): connection error; dropping connection

…after which the VMs either take a very long time to finish the benchmark or lock up/become unresponsive. There is an unresolved Bug report about this very problem here:

https://bugs.pcbsd.org/issues/7622

Next I tried NFS, which seemed to work well as long as you go against best practices and disable ‘sync’ on the NFS share. But, lo and behold, NFS too fails under load; just as with iSCSI, both Windows VMs lock up when running ATTO simultaneously, only in this instance there is no enlightening error message. In addition, NFS datastores sometimes fail to register with VMware after a reboot, despite my scripting a datastore rescan as we discussed earlier here.

So, after high expectations, much testing, and a great deal of disappointment, I’m now going to abandon FreeNAS for a while and give something else a try, perhaps OmniOS/NappIt…

Hi, Keith. Thanks for the update. I did not run into the iSCSI issue but I mainly run NFS in my environment. From that bug It looks like there is an issue with iSCSI but here are a few ideas to check on NFS performance:

1. Are you running SSDs for ZIL? VMware’s NFS performs horribly without ZIL, or you can leave sync disabled for testing.

2. Try increasing number of NFS servers in FreeNAS. See Josh’s May 22nd comment on this page: https://b3n.org/freenas-vs-omnios-napp-it/

3. You only have 4 physical cores so if you give FreeNAS 4 vCPUs you’re forcing otherwise you’re forcing either your test VMs or FreeNAS to run on hyperthreaded cores. For your test I would give FreeNAS 2 vCPUs and each of your VMs one vCPU. On my setup I found it best to give FreeNAS 2 vCPUs and all other VMs 1 vCPU.

4. Any kind of vibration, from washing machines in the next room or even having more than one non-enterprise class drive in the same chassis, or even having your server near loud noise can kill performance.

5. Make sure you gave FreeNAS at least 8GB, also if you over-commit memory make sure FreeNAS has “reserve all memory (locked to VM)” checked under the resources in the VM options.

Also, I would be very curious on your OmniOS / Napp-It performance if you go that route and how it compares to FreeNAS, I have gotten much better performance out of OmniOS (especially with NFS) but I’d like to see those results validated.

Hi, Ben — thanks for your suggestions.

RE: 1> I tried using a 120GB Samsung 850 EVO for L2ARC and a 60GB Edge Boost Pro Plus (provisioned down to 4GB) for SLOG and honestly couldn’t see any improvement on my system. At the same time, I understand that these aren’t ideal SSDs for these purposes. Also, I’m only using 16GB for FreeNAS and certain knowledgable posters (ahem) at the FreeNAS forums claim that L2ARC and/or SLOG are useless unless you have a gazillion Terabytes of RAM. :-)

At any rate, I run the NFS tests with sync disabled when there is no SLOG device.

RE: 2> I tried increasing the NFS servers from 4 to 6, 16, and 32, again with no real difference in outcome. Adding servers only seems to delay the point at which NFS breaks down and the ATTO benchmarks stall.

RE: 3> I was running FreeNAS with 2 sockets and 2 cores per socket for a total of 4 CPUs. I thought by doing this I was allocating half the system’s CPU to FreeNAS. Perhaps a naive assumption on my part? I have since tried other settings: 1 socket w/ 4 cores, 4 sockets with 1 core, etc., including combinations giving the lower vCPU count of 2 which you suggested. Again, this doesn’t seem to make any discernible difference in the outcome.

RE: 4> Hmmmm… Interesting that ambient noise can have such a profound effect… and there IS a window AC near the FreeNAS system. I will try running the benchmarks again with the AC turned off!

RE: 5> For all of these tests I’ve run FreeNAS with 16GB and the Windows VMs with 1 vCPU and 1GB (WS2012R2) or 2GB (Windows 7) of RAM.

Interestingly, the FreeNAS NFS service seems to work fine across the LAN. I’ve configured another ESXi server on a SuperMicro X8SIE-LN4F system and clones of the Windows test VMs successfully complete the ATTO tests simultaneously, albeit only at gigabit speeds.

I’m a developer, and my intended use for a VMware+FreeNAS all-in-one is 1> as a reliable file server and 2> as a platform to run a very few VMs: an Oracle database, various versions of Windows and other OSes as the need arises, etc.

I’m tempted to ignore these oddball ATTO benchmark stalls and just go ahead with my plans to use this all-in-one I’ve built. In all other respects it works great; I love FreeBSD, FreeNAS, and ZFS; I like the fact that there is an active support community; and, like you, I have developed a certain respect for iXsystems and the people who work there.

Hi, Keith. If I get a chance (no promises) I’m going to try to deploy a couple of Windows VMs and run ATTO on my setup to see what happens.

I am running the lastest version (FreeNAS 9.3-STABLE-201506042008) virtualized on VMware 6.0 but can’t add vmxnet3 adapters in FreeNAS. Followed your guide, and even tried compiling drivers . Any thoughts?

In addition, I created my FreeNAS VM using version 8 instead of 11. Not sure that version would matter. I did run into issues with the pkg_add compat6x-amd64 and perl, but changed the PACKAGESITE to ftp://ftp-archive.freebsd.org/pub/FreeBSD-Archive/ports/amd64/packages-9.2-release/Latest/

I created the VM with 3 NICs (2 x vmxnet3, 1 x E1000), but in FreeNAS I only see em0 (even when adding interfaces)..

Nevermind, I started from scratch again using version 11 VM, I now get vmx3f0 & vmx3f1 interfaces as expected… Thanks for the great blog…

Hi, Peter. Great to hear. Good to know that you need to use hardware version 11 for the guest tools to work.

Thanks for the great blog Ben, really useful.. Another thing I figured out yesterday is that the plex plugin in FreeNAS needs Esxi promiscuous mode enabled… http://kb.vmware.com/selfservice/microsites/search.do?cmd=displayKC&docType=kc&docTypeID=DT_KB_1_1&externalId=1004099

Without it, you can’t access the web interface for plex plugin… Might be useful info for someone.

You’re welcome. I meant to mention that in the article so I’m glad you brought up the reminder about promiscuous mode. How do you like Plex? I was looking at purchasing it but $150 is a little steep so I”m using Emby at the moment.

Great tip – Waste hours with FreeNAS networking and multiple Nics, to get FreeNAS Plex web working.

Hi Ben. First off, great blog and great article, very informative and helpful. So I’m in Peter’s boat and I’m running the latest FreeNAS (FreeNAS-9.3-STABLE-201512121950) on VMware 6.0, and I also can’t add vmxnet3 adapters in FreeNAS. I’ve tried numerous times, using both the FTP site and the binaries, and both ways It states that VMware Tools are running. I’ve setup the Tunables correctly as well. This is also the 5 time of starting from scratch. The only thing that is different is that I’m running PH19-IT for the LSI, but should not effect anything. Any suggestions on what I should look for would be greatly appreciated.

Hi, TJ. The LSI version won’t make a difference. I’m assuming you created your FreeNAS VM with HW version 11?

One think you might try is going to an older version of VMware 6 or older version of FreeNAS (not to run in production obviously, just to troubleshoot). Also, see if the vmxnet3 drivers work with a normal FreeBSD 9.3 install.

Apologies, I forgot to reply back on here to let you know I got it working. Oddly enough, I ended up starting from scratch a 6th time, not changing how I was installing everything from the previous attempts and for whatever reason it worked this 6th time. Not sure why it decided to work this time, but I’ll take it. Also, I want to mention that I ended up flashing version 20 for the LSI and so far it’s working wonderfully with excellent read/write throughput.

So, everything is working with VMware 6.0 using version 11, FreeNAS-9.3-STABLE-201512121950, PH20-IT for the LSI, and vmxnet3.ko with both interfaces showing up as vmx3f0 & vmx3f1.

On a side note, have you tried adding an addition physical NIC to your system and running a lagg for Storage in FreeNAS? In my old FreeNAS box I had 1 for my management & 2 in a lagg for my storage, and was hoping to do the same with this setup.

Glad to hear you got it working. I have not setup lagg with FreeNAS under VMware–I haven’t had the need to in my home environment. But I see no reason why it wouldn’t work. Thanks for the report on version 20. I might remove my warning note and flash that myself soon.

No issues with VMXNET3 on OmniOS.

Been running OmniOS for months, works like a champ. My primary SSD which has ESXi + OmniOS failed and the system kept going because of the OmniOS mirror.

Systems runs like a champ. I don’t think I would switch (hard to find a reason). Happy Holidays Ben!

Hi, Ben — I have discovered that using an MTU of 9000 is what caused the time-out problems I mentioned upthread. Dropping back to the default value fixed the problem of NFS/iSCSI datastores ‘dropping out’! I posted some of the gory details at the FreeNAS forum:

https://forums.freenas.org/index.php?threads/freenas-9-3-vm-datastore-on-vmware-esxi-6-vmxnet3-adapter-unreliable-under-heavy-load-with-mtu-9000.35014/

Hi, Keith. Excellent troubleshooting! Thanks for posting that, hopefully someone from FreeNAS can respond there to give some insight into the issue. I’m actually not surprised it was the MTU as I’ve seen that hurt performance before, but it slipped my mind. The good thing is on modern hardware running the default MTU is probably not going to hurt your performance that much–I think I measured around 2% improvement with 9000 on even lesser hardware. I am very curious now–once I get some Windows VMs setup I’ll try to run that ATTO test on both MTU settings to see if I can reproduce your issue.

Ahem… well, I was only half right. This afternoon I confirmed that reverting to the standard MTU fixes the problem w/ NFS datastores, but not iSCSI. So, for now, I have to conclude that iSCSI is basically unreliable, and therefore unusable, on VMware 6 + FreeNAS 9.3.

I wonder if reverting to VMware 5.x would make any difference?

Does the problem with iSCSI occur on both VMXNET3 and E1000 or just VMXNET3?

Hi, Keith. I was able to duplicate your problem. I deployed two Win 10 VMs with ATTO. On NFS very slow I/O with multiple VMs hitting the storage. And on iSCSI multiple instances of this:

WARNING: 10.55.0.2 (iqn.1998-01.com.vmware:5506d188-5539-4d51-01f3-002590f34e14-3c79ad57): no ping reply (NOP-Out) after 5 seconds; dropping connection

WARNING: 10.55.0.2 (iqn.1998-01.com.vmware:5506d188-5539-4d51-01f3-002590f34e14-3c79ad57): connection error; dropping connectiWARNING: 10.55.0.2 (iqn.1998-01.com.vmware:5506d188-5539-4d51-01f3-002590f34e14-3c79ad57): connection error; dropping connection

And pretty awful performance.

Disabling segmentation offloading appears to have fixed the issue for me. You can turn it off with:

# ifconfig vmx3f0 -tso

# ifconfig vmx3f1 -tso

Can you test in your environment?

Note that this setting does not survive a reboot, so if it does help add it to the post init scripts or network init options under FreeNAS.

Just for the record I am also having the same “no ping reply (NOP-Out) after 5 seconds; dropping connection” as Keith. I’ve taken the MTU back to 1500 but this has made no difference. I am on a current FreeNAS patch level and also running VMWare 6.0 2715440. I am also running good quality hardware with Intel / bcom nics.

Two of my 3 hosts had Delayed ACK enabled and they went down, with the above issue. The one that did not stayed up however it was under the least amount of load. I’m going to disable Delayed ACKs again on my VMWare iSCSI initiators however it does seem to seriously kill the performance. From what I can see the iSCSI in FreeNAS does fall over when under heavy load, like when I have backups running. My environment is used 24 hours a day.

Basically for me everything was stable on FreeNAS 9.2. I suspect the new performance features in the 9.3 iSCSI target have issues when put under load.

Thanks for the confirmation of the issue Richard. I remembered at work we had to disable segmentation offloading because of a bug in the 10GB Intel drivers (which had not been fixed by Intel as of Feb at least), and may be the same issue on the VMXNET3 driver. See my comment above responding to Keith and let me know if that helps your situation at all.

Ben (and Richard) — Sorry I’m just now seeing your questions and updates.

To answer your question Ben: the problem doesn’t happen with the E1000 (see below).

Over the last few days I’ve learned that the VMware tools are automatically installed by FreeNAS 9.3, but NOT the VMXNET3 NIC driver! And according to Dru Lavigne (FreeNAS Core Team member), the VMXNET3 driver will not be supported by FreeNAS until version 10 (see this thread):

https://forums.freenas.org/index.php?threads/vmware-tools-install-in-freenas-9-3.35041/

So, since reliability is more important to me than performance, I have reverted to the somewhat slower E1000 NIC drivers. For now, E1000-based NFS datastores w/ sync disabled are suitable for my needs. I’m a developer and pretty much the only user of all of the systems here at my home, with the exception of backing up the family’s iPads’n’gizmos. I will probably round up an Intel DC S3700 and install it as a ZIL SLOG at some point. This would let me restore synchronous writes on the VMware datastores without sacrificing too much performance. Both NFS and iSCSI are unusably slow w/ synchronous writes enabled, at least on my system.

Regarding the VMware tools installation… Not knowing they were already being installed by FreeNAS, I originally used Ben’s instructions above, fighting through the missing archive issues and so forth. But I eventually ran the ‘System->Update->Verify Install’ tool and FreeNAS told me that quite a few of my modules were out-of-whack. I suspect this was because I’d installed Perl and such from the slightly out-of-date archive during the course of installing the tools. I re-installed FreeNAS just a few days ago and this problem ‘went away’.

FreeNAS 9.3 seems to be going through a rough patch recently; I think they may have ‘picked it a little green’, as they say here in the South. There have been a slough of updates and bug fixes since I started testing it in early May. I like it and want to use it, but I’m hoping to see the team get it a little more stabilized before I put it into ‘production’ use. For now, I’m still relying on my rock-steady and utterly reliable Synology DS411+II NAS.

Hi Ben,

Just for everyones benefit and clarity, I am running FreeNAS on an HP xw4600 workstation with a Quad Port Intel card using the igb driver. I also have link aggregation configured however I am only using fault tolerant teams, so this should be safe.

I’ve not made any additional changes since my post the other day however here is a sample of todays issues from the syslog output. Our system has not actually gone down again however this may be down to disabling the Delayed ACK option in the iSCSI initiator in VMWare.

Jun 20 17:53:41 xw4600 WARNING: 10.2.254.31 (iqn.1998-01.com.vmware:esxi-01-610a1caf): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 20 17:53:41 xw4600 WARNING: 10.2.253.31 (iqn.1998-01.com.vmware:esxi-01-610a1caf): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 20 18:26:54 xw4600 WARNING: 10.2.253.33 (iqn.1998-01.com.vmware:esxi03-239ceb4c): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 20 18:26:55 xw4600 WARNING: 10.2.254.33 (iqn.1998-01.com.vmware:esxi03-239ceb4c): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 21 00:00:01 xw4600 syslog-ng[4232]: Configuration reload request received, reloading configuration;

Jun 21 17:36:12 xw4600 WARNING: 10.2.253.30 (iqn.1991-05.com.microsoft:veeam.london.local): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 22 00:00:01 xw4600 syslog-ng[4232]: Configuration reload request received, reloading configuration;

Jun 23 00:00:01 xw4600 syslog-ng[4232]: Configuration reload request received, reloading configuration;

Jun 23 15:01:41 xw4600 WARNING: 10.2.253.30 (iqn.1991-05.com.microsoft:veeam.london.local): no ping reply (NOP-Out) after 5 seconds; dropping connection

Jun 24 00:00:01 xw4600 syslog-ng[4232]: Configuration reload request received, reloading configuration;

Jun 24 11:31:28 xw4600 ctl_datamove: tag 0x1799cf6 on (3:4:0:1) aborted

Jun 24 11:31:33 xw4600 ctl_datamove: tag 0x1798fe4 on (3:4:0:1) aborted

Jun 24 11:31:33 xw4600 ctl_datamove: tag 0x1798cd7 on (3:4:0:1) aborted

Jun 24 11:31:34 xw4600 ctl_datamove: tag 0x17a50bf on (2:4:0:1) aborted

Jun 24 11:31:34 xw4600 ctl_datamove: tag 0x1799b84 on (3:4:0:1) aborted

Jun 24 11:32:34 xw4600 ctl_datamove: tag 0x179bbf3 on (3:4:0:1) aborted

Jun 24 11:32:52 xw4600 ctl_datamove: tag 0x17a5b70 on (2:4:0:1) aborted

Jun 24 11:32:52 xw4600 (3:4:1/0): READ(10). CDB: 28 00 1f 31 34 a0 00 01 00 00

Jun 24 11:32:52 xw4600 (3:4:1/0): Tag: 0x1798872, type 1

Jun 24 11:32:52 xw4600 (3:4:1/0): ctl_datamove: 150 seconds

Jun 24 11:32:52 xw4600 ctl_datamove: tag 0x1798872 on (3:4:0:1) aborted

Jun 24 11:32:52 xw4600 (3:4:1/0): READ(10). CDB: 28 00 1f 31 34 a0 00 01 00 00

Jun 24 11:32:52 xw4600 (3:4:1/0): Tag: 0x1798872, type 1

Jun 24 11:32:52 xw4600 (3:4:1/0): ctl_process_done: 150 seconds

Jun 24 11:32:52 xw4600 (2:4:1/0): READ(10). CDB: 28 00 1f 31 35 a0 00 00 80 00

Jun 24 11:32:52 xw4600 (2:4:1/0): Tag: 0x17a528b, type 1

Jun 24 11:32:52 xw4600 (2:4:1/0): ctl_process_done: 149 seconds

Just to clarify you are suggesting I disable TSO/LRO on FreeNAS box, not on the VMWare hosts? I’ve issued “ifconfig lagg0 -tso -vlanhwtso” which appears to have disable the TSO options of all lagg and member igb adapters in the system.

Will see how things progress and if they work out then I will add them to the nic options for all nics.

Thanks,

Rich.

Hi, Richard. Just curious… are you running FreeNAS in a VM? Or on the bare metal?

My money is on bare metal.

Hi, Rich. Correct, I have not had to disable TSO/LRO on VMware, just on FreeNAS. In our case we had two Intel 10GB NICs configured in a LAGG and were getting great write performance but reads were pretty bad. We traced it to a bug in the Intel FreeBSD drivers. I don’t think we saw the connection drop, but definitely saw poor read performance from FreeNAS. I do recall we had to set the -tso switch on the individual interfaces for some reason, it didn’t seem to take effect when setting it on the lagg interface. I think in the FreeNAS post init script we have “ifconfig ixgbe0 -tso; ifconfig ixgbe1 -tso” which does the trick. Another thing we had to do was lower the MTU to 1500 on storage network but I’m thinking that may have been because of a limitation on the switch, or another device that we needed on the storage network. Either way 1500 MTU will hardly hurt your performance so I’d start there and make sure you get good performance before trying to go higher. This may not be the issue in your environment but worth a shot.

Hi Ben and Keith,

Ben’s assumption is right, bare metal in my case and I would only use a virtualised SAN for testing, as I’m sure you are Keith. The systems I am having issues with is our non critical production and testing systems.

We are a Cisco (Catalyst) house for the network but I went back to 1500 MTU fairly early on as there was a suggestion that frames of 9000 bytes might not be equal in all systems and drivers.

I’ve checked the syslogs today and so far so good but I think I need a good week first before I feel safe.

I do wish FreeNAS would chill out on the regular updates a bit and work on the stability aspect. 9.2 was a good release for me. As Ben already said you don’t want to bother messing with updating a SAN that often and when you do you want the stable release you are installing to be rock solid.

It almost feels like I’m on the nightly dev build release tree but I double checked and I’m not. :-)

An impressive little system anyway and I do appreciate all the hard work that goes into it, plus the community that comes with it.

Thanks for your time chaps with the suggestions and thanks Devs!

Hi, Keith. Thanks for the confirmation on the E1000. FreeBSD 9 is officially supported with the VMXNET3 driver from ESXi 6 so I wonder if there’s something specific to FreeNAS that’s giving them trouble? I just tried a verify install on mine and it found no inconsistencies so the older FTP source probably did it. But I think the E1000 driver is fine, on OmniOS last year I did a test between E1000 and VMXNET3 and the VMXNET3 was about 5% faster and this was on an Avoton so probably even narrower on an E3.

I’ve got four DC S3700s and they work very well in both FreeNAS and OmniOS so I think you’ll do well there. There’s also the new NVMe drives that are coming out which should be faster.

Regarding stability I agree, it doesn’t seem to be as robust as the OmniOS LTS releases and the update cycle is a lot faster–and storage is really something you want to turn on and rarely, if ever, update. On the other hand FreeNAS is pretty quick at fixing issues being found by a very wide and diverse user base. I would guess that a lot of updates are fixes for edge cases that aren’t even considered on most storage systems. I would like to see a FreeNAS LTS train on a slower more tested update cycle, but that would probably be what TrueNAS is considered. I stay a little behind on the FreeNAS updates to be on the safe side.

Morning Chaps,

Well this morning at around 3am FreeNAS started causing issues with VMWare which once again cause our VI to slow to a crawl. No errors on the syslog console but as soon as we powered off the FreeNAS everything else sprang into life. Power it back on and started the iSCSI services but I’ve had a few of the error below.

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f99fe on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26eaf0c on (5:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f99ff on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f9a00 on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f9a01 on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26eaf33 on (5:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f9a02 on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26f9a03 on (3:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x26eaf36 on (5:4:0:1) aborted

Jun 29 09:40:37 xw4600 ctl_datamove: tag 0x4f701 on (4:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaec1 on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf37 on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf39 on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf3a on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf3b on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f702 on (4:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f6ed on (6:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf3c on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf3e on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x26eaf38 on (5:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f6f8 on (6:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f708 on (4:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f70d on (4:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x4f70b on (4:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x548e9 on (2:4:0:1) aborted

Jun 29 09:40:51 xw4600 ctl_datamove: tag 0x548ea on (2:4:0:1) aborted

Jun 29 09:42:09 xw4600 ctl_datamove: tag 0x4f73d on (4:4:0:1) aborted

I’ve been doing some diags on my hosts with esxtop then u to show my storage by LUN. I’ve observed the DAVG (Average disk Queue) is often between 17 (best) and 550 (worse). I had the opportunity to speak to a senior VMWare engineer about this last week who advised be anything over 30 indicates an issue. Our main iSCSI Starwind SAN which is heavily loaded and processes around 400 CMDS/s has an DAVG of about 4 – 6.

I’m ditching FreeNAS 9.3. I may go back to 9.2 or try OmniOS LTS but what ever I do I can’t leave things like this and hope it gets fixed quickly. I still think it’s a great little product but the releases updates so seem to come a little thick and fast for me. As Ben said before storage is something you don’t really want to be messing with once you have it commissioned and I need something stable.

Thanks for your help everyone, it been a fun and informative ride!

Rich.

Thanks for the update, Rich. I’ve been running several FreeNAS 9.3 servers and haven’t run into these problems, but it seems you’re issue isn’t entirely uncommon either. If you had unlimited time (which I’m sure you don’t) it would be curious to see if you ran into the same issue on FreeBSD 10 (which uses the kernel based iSCSI target that was implemented in FreeNAS 9.3).

Outstanding Guide!

First, excuse my english. It’s not my first language.

I read it with great enthusiasm as I am building a similar setup.

HP ml310e

IBM 1015 in IT mode with LSI firmware. (fw mathches freenas driver)

esxi 6.0 – 1015 passthrough of 1015 to Freenas VM

Freenas VM located on SSD datastore.

Around 18 TB data disks with ZFS attached to the 1015. No ZIL SLOG

Using vmware tool that came with Freenas install. So E1000 NICs.

Ran out of SSD data store space and wanted to use the freenas exported NFS or iSCSI handed back to ESCi as datastore for less important/demanding VM’s.

Horrible performance :) So started reading your guys comments. And all the discussion on the freenas forums you linked too. I got a bit confused as to what your actual conclusions where? Am I right in assuming that the following is what you came up with?

Use E1000 driver (slower but stable)

Use SSD ZIL SLOG if you want w/sync enabled. Else, disable w/sync.

Keep MTU on 1500

Use NFS, don’t use iSCSI

Disable tso offloads (or was that only seen on bare metal?)

Or are you still having issues in spite of these corrections?

Best regards

Kenneth

Hi, Kenneth.

The problem with ESXi on NFS is it forces an o_sync (cache flush) after every write. ESXi does not do this with iSCSI by default does not do this (but it means you will lose the last few seconds of writes if power is lost unless you set sync=always which then gives it the same disadvantage as NFS).

For performance with ESXi and ZFS here’s what you’ll want to consider:

– Get a fast SSD for SLOG such as the HGST ZeusRAM or S840z, or if on a budget the Intel DC S3700 or DC S3500 for the log. There are other SSDs but I’ve found they lack power loss protection or performance. Before buying a log device you can also try running with sync=disabled (which will result in data loss of the last few seconds if you lose power), if you see a large amount of improvement in write performance then this will help. My guess is this will make the largest difference.

– Consider your ZFS layout. For ZFS each vdev has the IOPS performance of the slowest single disk in that vdev. So maximize the number of vdevs. Mirrors will get you the most performance. If you have 18 disks you could also consider 3 vdevs of 6 disks in RAID-Z2, but mirrors would be far better.

– For read performance the more ram the better, try to get your working set to fit into ARC. You can look at your ARC cache hit ratio on the graphs section in FreeNAS, on the ZFS tab. If your arc cache hit ratio is consistently less than 90 or 95% you will benefit from more RAM.

– If you have a lot of RAM, say 64GB and are still low on your ARC cache hit ratio you may consider getting an SSD for an L2ARC.

– Make sure you disabled atime on the dataset with the VMs.

– iSCSI should have better performance than NFS, but it seems to be causing problems for some people and resulting in worse performance. I’ve always run NFS as it’s easier to manage and not as bad on fragmenting.

– I wouldn’t worry about MTU 9000, it makes very little difference with modern hardware and has the risk of degradation if everything isn’t set just right.

– The VMXNET3 driver works fine for me, but others seem to be having issues with it. The main difference is VMXNET3 has less CPU overhead so if you have a fast CPU this won’t make a large difference.

Also, make sure you’re not over-committing memory or CPU. If FreeNAS is contenting for resources from the VMs it can cause serious performance issues. One common mistake is if you’re running a CPU with hyper-threading it appears as though you have twice as many cores as you do. It’s probably wise to lock the memory to the FreeNAS guest and also give it the highest priority on CPU.

Hope that helps.

Hi, I found your blog from Google search and is reading few posts now. It happened that you have quite similar All-in-One system like mine, except I use all SSD environment (I also setup VMXNET3 using binary driver so actually we are pretty on the very same road of finding optimized way of setting up the system). I dont want to be locked into any kind of HW RAID so ZFS is my choice.

The connection drop out is a problem of new FreeBSD iSCSI kernel target (it will not happen if you use FreeNAS 9.2). Who face this problem with FreeNAS 9.3 can check my thread on FreeNAS forum since 2014 here:

https://forums.freenas.org/index.php?threads/freenas-9-3-stable-upgrading-issue-from-9-2-1-9.25462/page-2

Using sysctl tweak sysctl kern.icl.coalesce=0 could help reduce the connection dropping (but latency sometime rise very very high).

Basically I think FreeNAS 9.3 is not good for production as it has lot of trouble with kernel target. We might need to wait till FreeNAS 10. At few first update, FreeNAS 9.3 is really buggies. I still dont know why my FreeNAS has quite low write speed (if I fire up other SAN VM and handover the SAS controller + my pool to it, it has better write) (all use same setting: 02 vCPU, 8GB RAM, VT-d LSI SAS controller, ZFS mirror with 04 SSD)

Hi, abcslayer, sounds like you like ZFS for the same reason I do. Thanks for the info on the coalesce. I can also confirm the issues doesn’t occur on 9.2, and doesn’t occur in OmniOS so I think you’re right that it’s an issue with the FreeNAS 9.3 iSCSI kernel target. Hopefully iX can trace it down.

So far it seems the best platform for robustness and performance on ZFS is OmniOS with NFS. FreeNAS 9.3 has fixed some NFS write performance issues recently, not quite as fast as OmniOS yet but close enough it doesn’t matter unless you’re doing some pretty intensive write I/O.

Ben do you have any standard test that one can run to validate performance with OmniOS? Hoping to do an apples to apples like comparison.

Also, what drive are you using with OmniOS VMXNET3 or E1000? Thanks again for the great blog!

Hi, Dave. I posted the sysbench script that I use in the comments here: https://b3n.org/vmware-vs-bhyve-performance-comparison/#comments Another test I use is CrystalDiskMark https://b3n.org/freenas-vs-omnios-napp-it/ Keep in mind with Crystal that I’m measuring the IOPS and it outputs in MBps, to get the IOPS after a test go to File, Copy, then paste into Notepad. Also, my test is entirely within VMware, so I have OmniOS and FreeNAS installed inside VMware, use VT-d to passthrough the HBA to the ZFS server, and from the ZFS server I make an NFS share and mount it to VMware. The guest OS where I’m performing the test is a Windows or Linux system running on a vmdk file on that NFS share. Hope that helps, let me know if I can give more details on the test. I’d love to see how your results compare.

For OmniOS I used VMXNET3 using Napp-It’s instructions. I tested the E1000 and noticed about a 1-2% performance degradation. Not enough to make a big difference.

I did not find a guide where Napp-IT Details how to setup their server for VMXNET3 but between your guide (which is great) and the OmniOS documentation I was able to figure it out.

I have no L2Arc but I do have an S3700 it is provisioned for 8GB as you suggested. Also, I have passed through a Supermicro 2308 that is running firmware V20.

Here are some of the tests, similar setup as you mention. OmniOS installed on a Satadom and server Windows 2012 R2 through a datastore that is mounted via NFS.

Using 5 tests 1GiB

Random Read 4k Q1T1 10722.7

Random Write 4k Q1T1 1667.0

Random Read 4k Q1T32 79347.9

Random Write 4k Q1T32 19895

My Q32 IOPS are in sync, but the Q1 are not. They are closer to Freenas numbers. Any thoughts?

Hi, Dave.

Thanks for posting the results, it’s always good to see other people validate or invalidate my results on similar setups.

One difference is you are on firmware P20 where I was on P19. You may want to consider downgrading. See: http://hardforum.com/showthread.php?t=1573272&page=320 and https://forums.servethehome.com/index.php?threads/performance-tuning-three-monster-zfs-systems.3921/ and http://napp-it.org/faq/disk-controller_en.html

I’m not sure that’s the difference, but that’s the first place I’d look. There are a million other things that could explain the difference, but the fact that Q1 is slower but Q32 is not almost points to a latency or clock frequency difference: perhaps a slightly faster CPU, maybe I had lower latency ram. It could also be any number of other things: slightly faster seeking HDDs, perhaps you missed a 9000 MTU setting somewhere and you’re fragmenting packets on the VMware network, different firmware version on the motherboard, different motherboard with different bus speed, you were using Windows 2012 where I was using a beta Windows 10 build, etc. Also, I was using 2 x DCS3700s striped, if you’re only using one that could be the difference (although setting sync=disabled should get you as fast or faster than my results if that’s the bottleneck).

I forgot to mention it, but did you disable a-time on the zfs dataset with the NFS share in Napp-It?

Ben

Thanks for pointing out the firmware! I was reading the freenas forums and it seems they recommend P20 so I installed that. I removed it and put on P19. I’ve compared my sysbench results to the ones you published and the numbers all look very similar! So perhaps something with OS, version who knows?

May I ask why you chose to stripe your Slog? Have you found a big difference in doing so? Also, have you tried NVMe drives or do you think this is overkill?

Thanks again!

Glad to hear P19 solved it for you! Also thanks for confirming my results.

I get a little more performance out of striped log so I run that way on my home storage.

Mirroringstriping does help with throughput a little since NFS is essentially sync always with VMware but to be honest 99% of the time I don’t notice the difference between mirror and stripe ZIL on my home setup. For mission critical storage I always mirror (or do a stripe of mirrors).I’ve been thinking about NVMe but haven’t gotten to it yet–it’s hard to justify for my home lab since I already get more than enough performance out of a S3700.

I was a bit confused when you said FreeNAS was recommending P20, since they have been on P16 for as long as I can remember. It looks like FreeNAS recommends P20 with the latest 9.3.1 update–P20 is very new and I’m not quite sure I’d consider it stable yet. I’ve updated the post to address this.

I had the opportunity to try out a S3710 – seems like it has a bit of an advantage over the S3700. Could be due to the size as well. Numbers aren’t that bad at all. Scores are now much more inline and sometimes over. So definitely your slog setup had an impact on your benchmarks.

Hi, Dave. The size will make a big difference, especially on sequential writes. What size did you end up getting?

Ended up getting the 200 gigabyte S3710. The S3700 200gb will do 365 sequential write vs 300 sequential write on the S3710. But the S3710 will do higher random write.

Both are faster than the 100GB S3700 which is 200 sequential write and a lot less with random write.

I don’t know why but the S3710 200GB is almost $100 less than the S3700 200GB so it seemed like a reasonable deal.

Hi, Dave. Yeah, for that $100 price difference the S3710 is the way to go. Sometimes the supply/demand gets kind of odd after merchants have lowered their inventories of old hardware which is what appears to be happening here.

Nice write up !

I have been running an all in one freenas / vmware box for 2 years now but recently experienced an issue when I upgraded to latest freenas and latest vmware 6.0.

After rebooting, 75% of the time the NFS storage never shows up so i can’t boot VMs automatically, issue is resolved after turning off and turning back on the NFS service in freenas, datastore shows up right away. So based on some freenas forum posts I switched to iSCSI and now i hit the NOP issue as well … and found this post … do you have the latest freenas and vmware 6 ? and your all in one is booting up okay ?

Hi, Reqlez,

Are you talking about rebooting just the FreeNAS vm or rebooting the ESXi 6 server? I have restarted FreeNAS quite a few times after installing an update and it has always shown back up in ESXi 6. It has been a long time since I’ve rebooted ESXi so my memory is a little vague but I had to power it on from cold boot after moving to a new house back in May and I don’t recall having any trouble with it–I do have my boot priority set to boot FreeNAS up first.

I updated FreeNAS 6 days ago so I’m on FreeNAS-9.3-STABLE-201509022158, and ESXi 6.0.0 2494585 (haven’t updated it since the initial install).

If an NFS restart fixes it, one thing you could do is add a “service nfsd restart” post init script in FreeNAS.

yea it’s restarting the ESXi host. I’m just doing test to make sure when my APC Smart UPS turns power on i want server to actually come on because i will be running some VoIP on it as well.

service nfsd restart on init in freenas is not a bad idea, I will try it. are those init scripts ran after all the services boot up i’m assuming. I’m running ESXi 6 build 2809209 but happened also with 2494585 i’m pretty sure.

I don’t know if its freenas related or the new ESXi NFS service has issues when it boots up with NFS server down. Maybe it “locks up” until a connection is restarted by doesn’t really make sense. Ill try the init script in freenas and report back.

A suggestion. I have found that if I make some modifications while ESXi is active the NFS mount will sometimes move into a non accessible state.

To get the system out of this I either need to reboot or connect to ESXI host terminal and issue the following commands.

// List network shares

esxcli storage nfs list

// Unmount share which shows as mounted but state is not accessible

esxcli storage filesystem unmount -l datastore2

// Remount datastore

esxcli storage filesystem mount -l datastore2

This brings things back to life for me. I never experience what you are describing on boot. But perhaps something is going on in the background where your mount is coming online disconnecting and then coming back online again causing ESXi to put the share into an inaccessible state.

Ben, you are a true geek ! “service nfsd restart” post init script in FreeNAS worked like a charm. i’m going to make it my default config from now on. By the way … i’m upgrading my ESXi LAB to D-1540 soon, this 16GB RAM limit is killing me, i’m running a D3700 as “host cache” but its not optimal.

Sorry I meant S3700 Intel

Glad that worked, Reqlez. If you get the D-1540 be sure to let me know how it works for you–being able to go to 128GB, even 64GB really frees up a lot of memory constraints. I only got 32GB but there’s plenty of room for growth. Also having those 4 extra cores really helps with CPU contention between VMs if your server is loaded up–especially for these all-in-one setups.

Gave me more the reason to want to use OmniOS as I’ve read not a single good thing about P20. Also, it seems like there is a few revisions of the firmware and they give no indication as to what version or revision is considered safe. My OmniOS + ESXi 6.0 combo using a Xeon 1540D has been rock solid. Couldn’t be happier and probably would have never figured out how to set it up if it weren’t for your great guide!

apparently there are several versions of the P20 firmware, but the one that has 04 in it is apparently safe. The one from last year apparently had some complications. i’m running the 04 version and nothing is going bad so far… and i put drives thru stress.

My card has an LSI 2308 chip for it. I don’t believe there is an 04 version for that one. Where did you download?

i downloaded latest from LSI site i’m pretty sure. i cross flashed to LSI firmware but i don’t have 2308 i have older model, 9211 that has 2008 chip i’m pretty sure ?

But your model, you can download here… but you might have to cross flash somehow ? http://docs.avagotech.com/docs/12350493

I don’t know how to cross flash from Supermicro to LSI firmware, i did IBM to LSI … Also every time i cross flash or flash in general, i don’t flash the stupid BIOS file, that way there is no raid screen that comes up every time you boot ( quicker boot )

Thanks for the info and also for confirming that 04 is working for you. I will stick with firmware 16 until Supermicro releases the 04 version for P20. I’ve cross flashed a few IBM 1015s to LSI 9211s but I’m not so sure you’d want to do that with the Supermicro LSI 2308, especially since it’s built into the motherboard.

Does anybody know if there is a way to store swap file on the Pool ? I just got hit by the stupid swap error because a drive failed, but i also don’t want to run out of swap due to memory leak or something. I see you posted that swap can be disabled and created under /usr ? but isn’t that also non-redundant ?

/usr is on the boot drive where FreeNAS is installed, so if you lose /usr you’re going down anyway. That said I mirror my boot drives. I mentioned it briefly in step 7 but didn’t go into detail, I have two local drives so I put one VMDK on each and during the FreeNAS install you can set them up as a mirror so it’s redundant. Even with my method, if the drive housing the configuration files (e.g. .vmx) dies you will go down and have to rebuild the vm configuration on the other drive (which isn’t hard to do). Only way around that is to hardware RAID-1. I personally don’t do RAID-1. I just have two DC S3700s which are extremely reliable, the VM config (.vmx file) is on one, the vmdks are mirrored.