I ran some benchmarks comparing the Intel DC S3500 vs the Intel DC S3700 when being used as a SLOG/ZIL (ZFS Intent Log). Both SSDs are what Intel considers datacenter class and they are both very reasonably priced compared to some of the other enterprise class offerings.

Update: 2014-09-14 — I’ve done newer benchmarks on faster hardware here: https://b3n.org/ssd-zfs-zil-slog-benchmarks-intel-dc-s3700-intel-dc-s3500-seagate-600-pro-crucial-mx100-comparison/

SLOG Requirements

Since flushing the cache to spindles is slow, ZFS uses the ZIL to safely commit random writes. The ZIL is never read from except in the case power is lost before “flushed” writes in memory have been written to the pool. So to make a decent SLOG/ZIL the SSD must be able to sustain a power loss in the middle of a write without losing or corrupting data. The ZIL translates random writes to sequential writes so it must be able to sustain fairly high throughput. I don’t think random write IOPS is as important but I’m sure it helps some. Generally a larger SSD is better because they tend to offer more throughput. I don’t have an unlimited budget so I got the 80GB S3500 and the 100GB S3700 but if you’re planning for some serious performance you may want to use a larger model, maybe around 200GB or even 400GB.

Specifications of SSDs Tested

Intel DC S3500 80GB

- Seq Read/Write: 340MBps/100MBs

- 70,000 / 7,000 4K Read/Write IOPS

- Endurance Rating: 45TB written

- Price: $113 at Amazon

Intel DC S3700 100GB

- Seq Read/Write: 500MBs/200MBs

- 75,000 / 19,000 4K Read/Write IOPS

- Endurance Rating: 1.83PB written (that is a lot of endurance).

- Price: $203 at Amazon

Build Specifications

- Avoton C2750 (see build information here)

- 16GB ECC Memory

- VMware ESXi 5.5.0 build-1892794 (includes patch to fix NFS issues).

Virtual NAS Configuration

- FreeNAS 9.2.1.7 VM with 6GB memory and 2 cores. Using VMXNET3 network driver.

- RAID-Z is from VMDKs on 3×7200 Seagates.

- SLOG/ZIL device is a 16GB vmdk on the tested SSD.

- NFS dataset on pool is shared back to VMware. For more information on this concept see Napp-in-one.

- LZ4 Compression enabled on the pool.

- Encryption On

- Deduplication Off

- Atime=off

- Sync=Standard (VMware requests a cache flush after every write so this is a very safe configuration).

Don’t try this at home: I should note that FreeNAS is not supported running as a VM guest, and as a general rule running ZFS off of VMDKs is discouraged. OmniOS would be better supported as a VM guest as long as the HBA is passed to the guest using VMDirectIO. The Avoton processor doesn’t support VT-d which is why I didn’t try to benchmark in that configuration.

Benchmarked Guest VM Configuration

- Benchmark vmdk is installed on the NFS datastore. A VM is installed on that vmdk running Ubuntu 14.04 LTS, 2 cores, 1GB memory. Para-virtual storage.

- OLTP tests run against MariaDB Server 5.5.37 (fork from MySQL).

I wiped out the zpool after every configuration change, and ran each test three times for each configuration and took the average (in almost all cases subsequent tests ran faster because the ZFS ARC was caching reads into memory so I was very careful to run the tests in the same order on each configuration. If I made a mistake I rebooted to clear the ARC). I am mostly concerned with testing random write performance so these benchmarks are more concerned with write IOPS than with throughput.

Benchmark Commands

Random Read/Write:

# sysbench –test=fileio –file-total-size=6G –file-test-mode=rndrw –max-time=300 run

Random Write:

# sysbench –test=fileio –file-total-size=6G –file-test-mode=rndwr –max-time=300 run

OLTP 2 threads:

# sysbench –test=oltp –oltp-table-size=1000000 –mysql-db=sbtest –mysql-user=root –mysql-password=test –num-threads=2 –max-time=60 run

OLTP 4 threads:

# sysbench –test=oltp –oltp-table-size=1000000 –mysql-db=sbtest –mysql-user=root –mysql-password=test –num-threads=4 –max-time=60 run

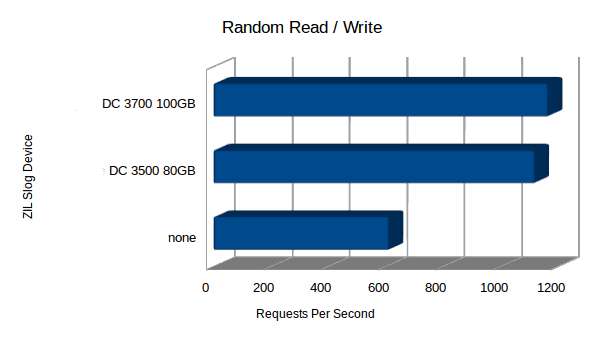

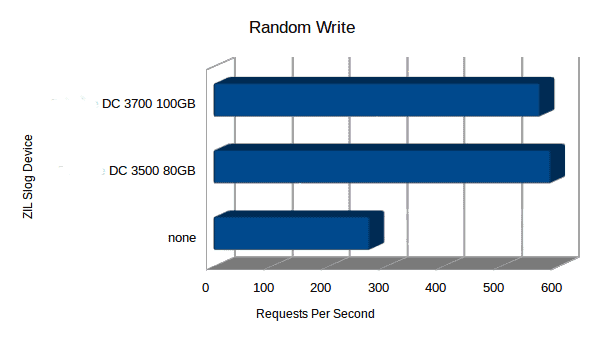

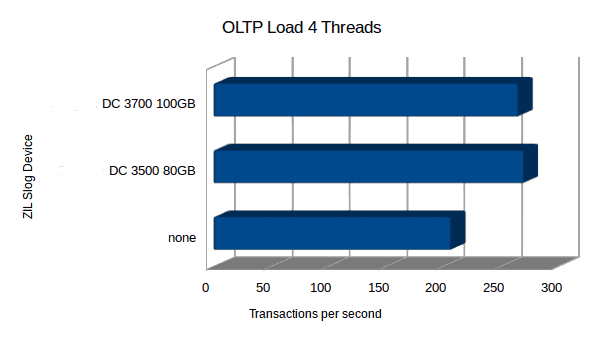

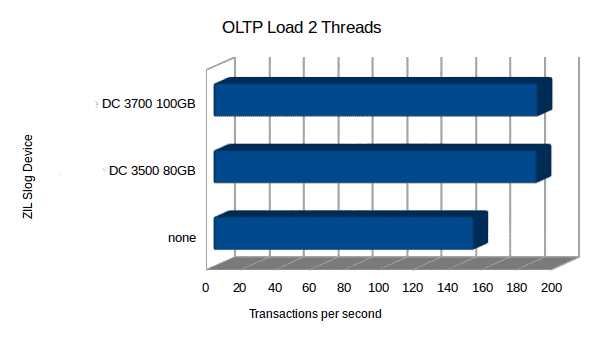

Test Results

| SLOG | Test | TPS Avg |

| none | OLTP 2 Threads | 151 |

| Intel DC 3500 80GB | OLTP 2 Threads | 188 |

| Intel DC 3700 100GB | OLTP 2 Threads | 189 |

| none | OLTP 4 Threads | 207 |

| Intel DC 3500 80GB | OLTP 4 Threads | 271 |

| Intel DC 3700 100GB | OLTP 4 Threads | 266 |

| none | RNDRW | 613 |

| Intel DC 3500 80GB | RNDRW | 1120 |

| Intel DC 3700 100GB | RNDRW | 1166 |

| none | RNDWR | 273 |

| Intel DC 3500 80GB | RNDWR | 588 |

| Intel DC 3700 100GB | RNDWR | 569 |

Surprisingly the Intel DC S3700 didn’t offer much of an advantage over the DC S3500. Real life workload results may vary but the Intel DC S3500 is probably the best performance per dollar for a SLOG device unless you’re concerned about write endurance in which case you’ll want to use the DC S3700.

Other Observations

There are a few SSDs with power loss protection that would also work. The Seagate 600 Pro SSD, and also for light workloads a consumer SSDs like the Crucial M500 and the Crucial MX100 would be decent candidates and still provide an advantage over running without a SLOG.

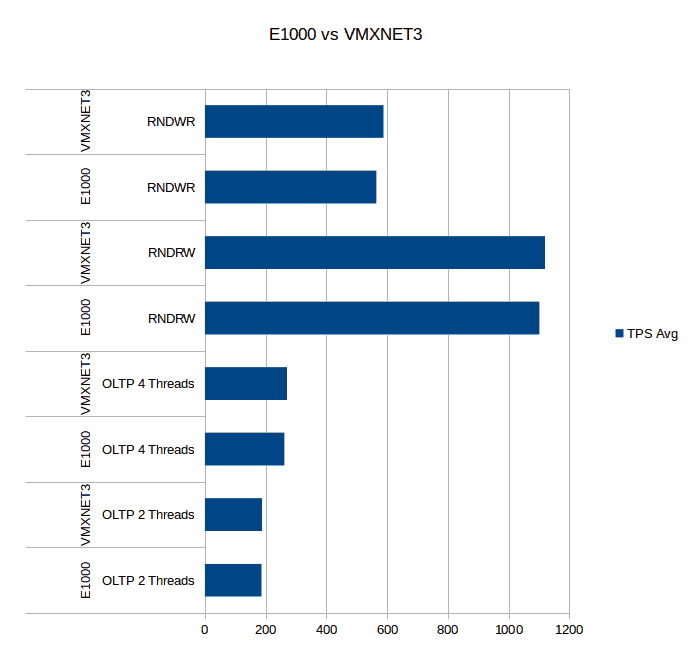

I ran a few tests comparing the VMXNET3 vs E1000 network adapter and there is a performance penalty for the E1000. This test was against the DC 3500.

| Network | Test | TPS Avg |

| E1000g | OLTP 2 Threads | 187 |

| VMXNET3 | OLTP 2 Threads | 188 |

| E1000g | OLTP 4 Threads | 262 |

| VMXNET3 | OLTP 4 Threads | 271 |

| E1000g | RNDRW | 1101 |

| VMXNET3 | RNDRW | 1120 |

| E1000g | RNDWR | 564 |

| VMXNET3 | RNDWR | 588 |

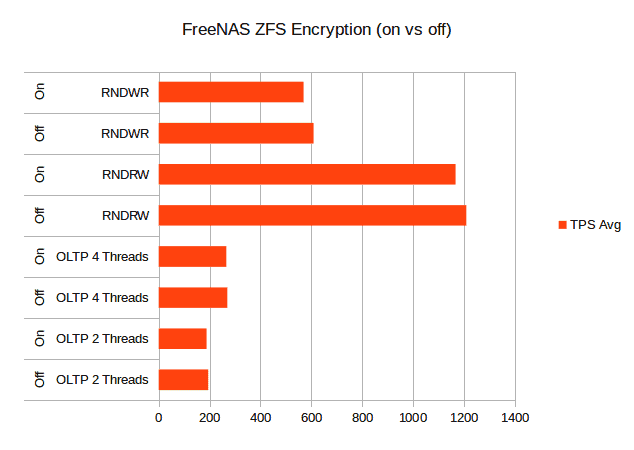

I ran a few tests with Encryption on and off and found a small performance penalty for encryption. This test was against the DC S3700.

| Encryption | Test | TPS Avg |

| Off | OLTP 2 Threads | 195 |

| On | OLTP 2 Threads | 189 |

| Off | OLTP 4 Threads | 270 |

| On | OLTP 4 Threads | 266 |

| Off | RNDRW | 1209 |

| On | RNDRW | 1166 |

| Off | RNDWR | 609 |

| On | RNDWR | 569 |

great.. i knew which SSD i should buy now. Unfortunately, the INTEL DC series not available in my country. I need to ship from US

What about the Crucial M500 or Crucial MX100? Those seem to be good options if you don’t need the endurance of the DC 3700.

I mistakenly wrote Seagate instead of Intel in a few places (like the charts) … I’ll fix it later but just want to clarify its the Intel DC S3500 and S3700.

Fixed.

I might avoid crucial for zil as their power loss data protection does not protect all data in the cache. s3500 and s3700 sound good for zil though. http://lkcl.net/reports/ssd_analysis.html

The Crucial M500 and MX100 should be okay. The Crucial that failed in that test you linked to is the Crucial M4 which doesn’t claim to have power loss protection. That said the DC S3500 and S3700 are widely used and aren’t that expensive so they would be my top choice (I run the DC S3700 on my server).

What do you think about mirroring ZIL with a S3700?. With respect to write endurance, is there need for a second S3700 to mirror the ZIL?

Hi, John. The failure rate on these is quite low: http://www.tomsitpro.com/articles/intel-dc-s3710-enterprise-ssd,2-915-8.html I’ve seen arguments that you don’t need to mirror because the ZIL is already mirrored in RAM so a device failure would only mean data loss in the event of a power failure. However, I have been able to produce kernel panics on occasion by pulling a SLOG device in the middle of writes. So it seems there is a risk of a kernel panic caused by a SLOG failure (granted there is a pretty small chance that would happen with a DC S3700) which could lead to a few seconds of data loss without a mirror. Also, ignoring the possibility of data loss you would also need to consider availability because the pool performance would degrade significantly and perhaps be unusable under load until you could replace the device so you’d want to have a spare on hand and if you had a spare why not put it in as mirror to begin with.

For storage where a day of downtime wouldn’t be catastrophic (like at home) I just use a single DC S3700 or stripe two of them to get more throughput. For enterprise storage where up-time is critical I always recommend a mirror to be on the safe side. The ZeusRAM has lower latency than the DC S3700 but you’ll pay for it.

Thanks Ben, you convinced me to mirror the ZIL.

Btw, the reason for not mirroring the ZIL and keep a spare S3700 in case of failure is that mirroring the ZIL uses all the 6Mb/s slots so there is no slot left for L2ARC in case that is needed.

Well, then. You need more slots!

Wanted to wait with expanding the slots for the moment I need to expand outside the box with a sas expander. It is only one slot I need now and internal there is room left for one and squeezing maybe two SSD’s. For the L2ARC I was thinking about something like the 4 Channel, 2 mSATA + 2 SATA 6Gb/s PCIe RAID Card from Vantec and up to four Samsung SSD 840 EVO mSATA. Not as fast as the Samsung Pro but the Pro is not available as mSata.

http://www.vantecusa.com/en/product/view_detail/650

I am wondering how the Samsung 950 and 960 NVME products compare to the Intel Enterprise products. NVME should have a LOT less latency when comparing to SATA. It is too bad the ONLY company making a 2 port NVME PCI-e 3.0 x8 card is IBM, I bet the card is like $5,000 where the single slot PCI-e 3.0 x4 card on newegg is $23. LOL