My Proxmox server was at 85% capacity. The daily email from Proxmox reminded me of two drives throwing SMART errors. It was time to replace the array. I like to think hard drives can last 5-years so it’s best to proactively replace drives around that age before the failure rate starts to increase.

I ask myself, what’s the most I will need 5-years from now.

The answer is always lots of Terrabytes.

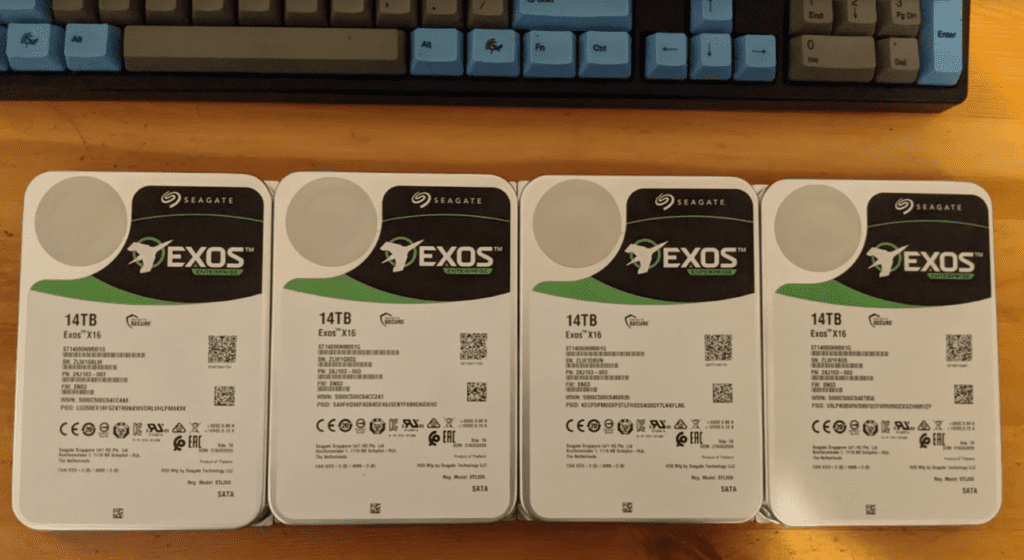

Here’s what 56TB looks like. I am amazed how much data you can store in a 4-bay NAS these days. At RAID-Z (like RAID-5 which uses one drive for parity) this will be 42TB.

I referenced my own ZFS hard drive guide (I eat my own dogfood) and looking at prices decided on the 14TB Seagate Exos X16. They’re 7200RPM Enterprise-class, and starting at 14TB they get a 256GB cache. These are CMR (not SMR) which is what you want for ZFS or RAID systems.

Upgrading a ZFS Zpool by replacing disks with larger drives

RAID-Z VDEVs can be expanded in size by replacing and resilvering all of the drives in the array, one at a time.

The first step, is to make sure backups are good. I would not proceed without good backups.

Next, for safety, run a zpool scrub. This makes ZFS read every block of data on every drive and verifies it against a checksum to make sure all of the data is readable and there is no data corruption. Having bad data during a rebuild would be bad because there wouldn’t be enough parity to reconstruct it and then I’d have to recover from backup.

I should note that at the cost of another drive dedicated to parity, a raid level like RAID-Z2 (RAID-6) results in double-parity so has a lesser risk of failure during resilvering. But I didn’t want to lose half my drives to parity.

zpool scrub pvepool1This is a great time to go to bed and check on things in the morning.

In the morning, check the status.

zpool status pvepool1If the scrub had found a drive with any errors, I would replace that disk first. But in my case zpool status showed no errors so I’ll just replace the first disk (number 0). To do this, no need to offline anything, just physically pull the first tray and wait for the drive to spin down.

For those wondering, this is my AMD 8-Core EPYC Home Server Build.

Swap out the drive for a new one (don’t torque the screws, just tighten them).

Re-insert the tray. Run zpool status to see which drive needs to be replaced. You can also run dmesg to see the device name of the device you just inserted. In my case it was /dev/sda

zpool status pvepool1

dmesg

zpool replace pvepool1 sdaRun the status command to see how it’s progressing. In my case, I was rebuilding a nearly full four 2TB RAID-Z array and it took a bit less than 5 hours per drive. It’s also a good idea to run an extra scrub after replacing each drive to verify that all the data can be read back before moving on to the next drive.

zpool scrub pvepool1

zpool status pvepool1I repeated the process for sdb, sdc, and sdd to replace all 4 drives.

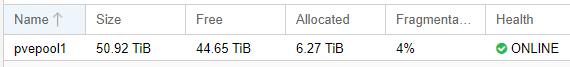

The whole process took a few days. Finally, after replacing and resilvering the last drive I turn on auto-expand for the pool, and expand all of the devices.

zpool set autoexpand=on pvepool1

zpool online -e pvepool1 sda

zpool online -e pvepool1 sdb

zpool online -e pvepool1 sdc

zpool online -e pvepool1 sddAnd now, Proxmox can see the additional space.

The new Seagate Exos X16 drives are not silent, but fairly quiet for Enterprise-class drives. I can hear them thumping during data writes but it’s not loud. It kind of reminds me of my first IBM computer.

I’d much rather have a little noise and character from my computers than the soulless silence of an SSD. As far as noise level they’re fine in an office, basement, or closet. But I wouldn’t run these drives in a bedroom.

Happy Halloween, and Happy Reformation Day!

Love the concept of ZFS, absolutely HATE the community. If you aren’t running server-class hardware with ECC RAM, they look down their noses at you and any assistance you ask for will simply be them “shaming you” for not running enterprise-class products on a home network.

On the topic of SSD’s – My personal experience is that SSD’s are quite variable in terms of the quality of drive you can and you have to be somewhat careful about which ones you buy if you want them to last. To get quality ones that are large in size, it will be quite a bit more than the mechanical drives. I do have a couple that I use, but their contents never change.

One question – for a home NAS, why not 10k drives instead of 7200? I actually notice a very significant improvement in write and read across the network whether wired (gigabit) or WiFi, although server-to-server (wired) is definitely the biggest improvement.

I have found the people at HardForum pretty helpful in regards to ZFS, they were also little more tolerant of my experimenting.

Good question, well, honestly I haven’t considered 10K drives. I’m not sure you could get them this large. I’m happy enough with the performance of 7200RPM. My four-bay TrueNAS with RAID-Z (RAID-5) saturates gigabit ethernet on both read and write. My local VMware and Proxmox VMs feel a lot snappier than anything I’ve ever had on AWS or DigitalOcean. ZFS has a lot of features that reduce the load on spinning disks: SSD Write Cache (I use an Intel DC S3700), ZFS is a Copy-on-Write filesystem so modified blocks are written to a new area of the disk instead of updating existing blocks. This results in most random writes being converted to sequential writes by the time it hits spinning disks–and you know how that helps with the slower seek times of 7.2K vs 10K. Data is compressed on the fly with LZ4 before it is written which can result in lower latency for read/writes since it takes up fewer (or smaller) blocks. ZFS is also a filesystem, and if you choose to use it, it is aware of the underlying data… this helps because it can have variable block sizes from 4K to 1M depending on how large each file is.

Probably the biggest benefit of ZFS is the ARC (Adaptive Replacement Cache) which prefetches the most frequently accessed data along with anticipating which data it thinks will be needed next, and stores those in memory. That frees up a lot of disk I/O. I just checked my Proxmox server and it looks like 98% of my read requests are being served out of ARC, a few times an hour I see that briefly drop down to 90%. My spinning disks are hardly being touched so I don’t think 10K would improve it much unless my workload was a little heavier.

Hadn’t explored HardForum, maybe I’ll give it a go to see what it’s like. I’m getting to a point where I want to re-do what I have for NAS in the house anyhow, so maybe it’s time to look at something like ZFS again instead of just using NFS and straight Linux EXT4 filesystems.

10k on large drives – yeah, you’re probably right that it’s not out there in quantity. So, cost would be a huge factor. I want to revisit my previous comment here, too. Yes, I see a big improvement in read/write on my NAS with faster drives, but mine are 7200 (my previous comment is at least implying that I am running 10k and I’m not). I wasn’t sure if you had thought about pushing the boundaries a bit more with 10k. I can appreciate the moving of data files to keep the bits sequential, too. As long as your drive doesn’t exceed about 90% full, this should always be feasible and keeps things arranged nicely.

Is there any guidance provided for the kind of SSD to use and how often to replace it for the write cache? Using SSD to store all data before committing to physical disk sort of ‘scares me’ because of the higher potential for SSD to wear out and fail. SSD is an excellent medium for content that’s frequently read but seldom written…

WRT compression of data before writing to disk… Do you actually get any speed difference there? What about deltas in storage capacity? File content like MP3’s, which are already very highly compressed, will often take up MORE space if you try and compress them. And the trade-off with speed may also not be worth it.

I’m curious, too, about whether pre-fetch actually has any true value. When you’re talking about 10TB of data on disk compared with 128G of RAM (maybe 100GB of which could be stacked as a RAM Drive for pre-fetch), you’re talking orders of magnitude difference. How much data can the system actually fetch in a predictive manner that speeds anything up? Acting as a file cache to store recently retrieved data that is requested again in short order will definitely be of benefit… I genuinely wonder about the true benefit of this in a home network type of setup where the number of users is extremely small and the number of data files is extremely large. It would seem that you’d need a lot more users and/or a lot less data files in play to truly start seeing benefits around cache or pre-fetch. Have you done any measurements? For me, I don’t think it would help since I’m storing so much content that’s very static (movies) and content being retrieved is by only a couple of users (my Plex server and possibly a Plex client) infrequently. I’d be curious to know about real-world measurements, though.

Well Mark, I just remembered that once I did get a few 10K drives in a server I bought off eBay but they were way too loud! I don’t have a basement so all of my things must be quiet enough to live in my spare bedroom/home office. |:-)

I know the right SSDs are reliable. At work, some of our most demanding database workloads run on all SSD TrueNAS Enterprise units.

With SSDs it’s just a matter of getting the right ones. This is old, but I did an experiment and was surprised at how badly some SSDs performed as a ZFS write cache compared to others: https://b3n.org/ssd-zfs-zil-slog-benchmarks-intel-dc-s3700-intel-dc-s3500-seagate-600-pro-crucial-mx100-comparison/ In general, I’ve found Intel (Intel just sold their SSD business to SK Hynix so I’m not sure how long this will hold up) makes the best SSDs that can handle a power loss and have high write endurance. For my main Proxmox server, I forgot I’m not using a DC S3700, I’m just using a 16GB Intel Optane M.2 SSD: https://amzn.to/3efH108 which has a write endurance of 365TB: https://www.intel.com/content/www/us/en/products/memory-storage/optane-memory/optane-memory-m10-series/optane-memory-m10-16gb-m-2-80mm.html I’ve been running this for 8-months with a light workload and SMART says I’ve got a 2% wear on the SSD. My understanding is depending on a lot of things, not every write goes to SSD. If you’ve got a large sequential write and the OS isn’t requesting a flush to disk, ZFS may bypass the SSD altogether.

One thing that isn’t commonly known is that ZFS always stores the in-flight writes to the ZIL (ZFS Intent Log) which is in RAM and then flushes to spinning disks from there. If the OS requests a sync, ZFS flushes the ZIL to disk immediately. The only thing an SSD write-cache does is mirror the ZIL so that the data is flushed to SSD instead of spinning disks when a sync is requested. But ZFS will still keep the data in RAM until it’s flushed to spinning disks. The only time the SSD is ever read from is in case of a crash or power loss.

With that said, one option that most people would frown upon, but should work, without even using SSDs is you can run ZFS with sync=disabled. With sync disabled ZFS will just ignore sync requests keeping the writes in memory until it gets around to flushing them to disk–this greatly reduces disk I/O. The downside is if you had a kernel panic or lose power you’d lose the last 30 seconds (I’m not sure if it’s exactly 30 seconds but around there) or so of writes. Because ZFS is CoW there will not be any data corruption. When power was restored you’d just be 30 seconds back in time–ZFS might refuse to bring the pool online if it knows it came up in an incosistent state in which case you’d just run a command to roll back a few seconds. I wouldn’t run that configuration myself, but maybe a home NAS could tolerate that, and then you’d get the write performance of RAM.

You are correct in that the read cache benefit really depends on your workload size. The larger it is the more you’ll be hitting those spinners.

If ZFS doesn’t gain at least 12% compression improvement it stores the uncompressed data instead. The only cost to compression is higher CPU load but LZ4 is so fast that unless you’re running old Hardware it shouldn’t be an issue. Here’s some tests that showed CPU utilization/latency with compression on and off: https://www.servethehome.com/the-case-for-using-zfs-compression/ You can also turn compresson off and on per dataset so you could always turn if off in places you know you’re only storing non-compressible data like MP3s. Where I find the most compression benefit is VMs, I see a 1.5 compression ratio there.

I haven’t done a test on prefetch, my guess is it is indirectly helping with larger reads to a small degree. E.g. If you’re running VMs and Plex storage on the same array, the more predictable and frequent VM accesses may be cached in RAM which means when you stream a large file off Ples the spinners have more I/O available for that process since they’re not also having to service your VMs reads. Like I say, I haven’t tested this.

True story about the 10k’s being louder, and I can totally appreciate wanting / needing to be considerate of just how loud systems are. I am fortunate that I can store my server rack in the basement where the noise is heavily deadened from the living space AND where it’s cooler than in the house. Still, with three HP Z800’s and a couple of desktops, all with lots of spinning drives in them, it can get a bit noisy in that area.

“Write endurance of 365TB” – that’s a new and interesting way to rate longevity. SSD’s are normally rated in P/E (Program/Erase) cycles which tie directly to writes on disk. If you assume the 16G drive you mentioned is made up of 2k blocks, that would yield 8,000,000 blocks on the disk. 365TB would require writing to each of those blocks 20,000 times each. That would put that device squarely into the DLC arena for SSD’s which is a great place to be. SLC is enterprise-class (like the stuff you have at work) and can be rated for up to 100k write cycles. But, it’s expensive. MLC is cheap, but doesn’t hold up well (what many thumb drives contain – not intended to be written to extensively).

A 1.5 compression ratio is pretty good. As I mentioned, I’m not at all seasoned with ZFS because my initial attempts to leverage it were met with pure hatred from the community because I wasn’t willing to build an enterprise-class NAS for my home media needs. But, it’s good to know that there is a lot of intelligence built into the setup to do things like skip compression if the savings on disk isn’t actually useful. I’m definitely going to have to look more closely at it to see if putting it in place would make sense.

Do you run your VM’s on a machine separate from your NAS? I’m expecting that you do, but wanted to be sure. If I build a ZFS-based NAS, I would use one of my Z800’s with four large drives and carve out a portion for my XenServer host to use for VM disk storage instead of keeping it local to the host.

I’m pretty sure the Intel SSDs are overprovisioned… I wouldn’t be surprised if the 16GB SSD had 128GB under the hood.

At home, I’m doing VMs and ZFS on the same box. My lab is always changing around, but currently, I have two hyper-converged Proxmox servers and then I have a FreeNAS server that’s just a NAS and also acts as a backup target for Proxmox and my Windows desktops.

TrueNAS is coming out with a product called TrueNAS Scale (currently in alpha) based on Debian which is going to be more of a hyper-converged platform https://www.truenas.com/truenas-scale/ I think it would be like combining Proxmox and TrueNAS into one product.

You’ll have to let me know how it goes!

First off I love your blog! You have really good articles that I refer to quite frequently about ZFS and ESXi. Long time lurker first time poster. It’s been my understanding that making a Raidz1 array with disks larger than 2-3 TB is a bad idea, can you explain the rationale for why you did with 14TB disks?

Thanks Aidan! That means a lot to me. I just checked out your blog, looks like you just got Gigabit fiber as well! Congratulations!

I agree with your understanding, it probably is a bad idea. The argument against this setup is that the rebuild time for a RAID-Z1 14TB is so long (a week?) that there’s a high risk of another drive failure during a rebuild, or perhaps a bad sector on one of the drives means it can’t be rebuilt.

However, my rationale is that I’m thrifty and I’d rather just lose 1/4 than 1/2 of my drives to parity. I back up all of the important data to a separate ZFS server, so in reality, I have the data on two RAID-Z servers which I think is slightly safer than one server with RAID-Z2. Plus the most essential data is backed up to two offsite locations. So if I lose my array during a rebuild I still have 3 copies of my essential data. I also have SMART monitoring and do a monthly zpool scrub with email alerts on both so in all probability, I’m going to know ahead of time if one of the drives is corrupting data or is near failure and can react proactively.

Downtime also isn’t a large financial cost to me. If I have downtime for a few weeks maybe it means my family can’t watch Emby movies–not the end of the world, this blog is hosted from these drives as well but I could boot the VM running b3n.org from the daily backup on my spare Proxmox server within a matter of minutes. (ultimately I would like to do Ceph or GlusterFS so this can failover automatically but that’s a project for another day).

That said, if the cost wasn’t a concern, I would be doing RAID-Z2 or mirrors (RAID-10).

You’re welcome. And thank you, I ordered the upgrade (from 100/100) on Thursday and it took effect this morning, pretty sweet!

It’s also a space consideration isn’t it? The server you upgraded only has 4 bays? If so and if cost wasn’t a concern I’d say go RAID10 as mirrors beat RAIDZ all day long in terms of performance so rebuilds don’t take nearly as long. With that being said however there are many that would argue RAID10 isn’t as safe as RAIDZ2 because in the latter you can lose any 2 disks whereas in the former you can only lose 2 disks from each mirrored vdev.